Description

when I convert an onnx model which is very complex to tensort engine with fp32, the output of the two models are the same, the commd as follows:

trtexec --onnx=encoder2.onnx --saveEngine=encoderfp32.trt --useCudaGraph --verbose --tacticSources=-cublasLt,+cublas --workspace=10240M --minShapes=src_tokens:1x1000 --optShapes=src_tokens:1x100000 --maxShapes=src_tokens:1x700000 --preview=+fasterDynamicShapes0805

However, when I convert the onnx model to tensort model with fp16

trtexec --onnx=encoder2.onnx --fp16 --saveEngine=encoderfp16.trt --useCudaGraph --verbose --tacticSources=-cublasLt,+cublas --workspace=10240M --minShapes=src_tokens:1x1000 --optShapes=src_tokens:1x100000 --maxShapes=src_tokens:1x700000 --preview=+fasterDynamicShapes0805 >log.en

the output of onnx and tensortfp16 models are very different, and some problem :

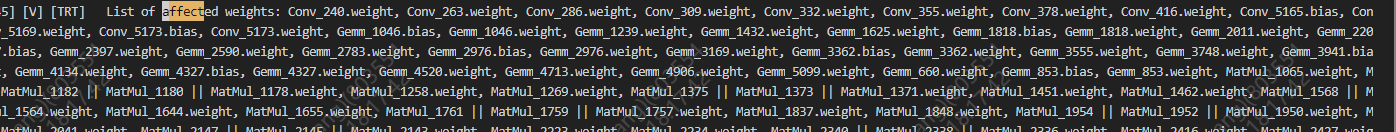

and the log show the affected weights:

all the log in log.en

log.en (33.7 MB)

How should I do by use tag --precisionConstraints --layerPrecisions to avoid this problem, Is the commad right as follows:

trtexec --onnx=encoder2.onnx --fp16 --saveEngine=encoderfp16.trt --useCudaGraph --verbose --tacticSources=-cublasLt,+cublas --workspace=10240M --minShapes=src_tokens:1x1000 --optShapes=src_tokens:1x100000 --maxShapes=src_tokens:1x700000 --preview=+fasterDynamicShapes0805 --precisionConstraints=obey --layerPrecisions=Conv_240.weight:fp32,Conv_263.weight:fp32,Conv_286.weight:fp32

But it don’t work

all the log in log.en1 is same with log.en

log.en1 (33.9 MB)

Environment

system configuration as follows:

cuda: cuda-11.6

tensorrt: TensorRT-8.5.2.2.Linux.x86_64-gnu.cuda-11.8.cudnn8.6.tar.gz

cudnn: cudnn-linux-x86_64-8.6.0.163_cuda11-archive

onnx: onnx1.12.0

pytorch: 1.12.0