We were experiencing lower than expected performance on our DriveAGX Pegasus, running DriveOS 10. When analyzing our application using “nvprof” could identify calls to ‘cudaMalloc’, allocating memory for the iGPU, as one of the problems. I wrote a small test program:

#include <chrono>

#include <iostream>

#include <cuda.h>

int main (int argc, char** argv){

int n = 1000;

unsigned sizeX = 1920u;

unsigned sizeY = 1208u;

cudaSetDevice(1);

float *in;

cudaMalloc(&in, sizeX*sizeY*sizeof(float));

auto start = std::chrono::high_resolution_clock::now();

double tMalloc = 0.0, tCopy = 0.0, tFree = 0.0;

for(int i = 0; i < n; ++i) {

float* tmp;

auto t0 = std::chrono::high_resolution_clock::now();

cudaMalloc(&tmp, sizeX*sizeY*sizeof(float));

auto t1 = std::chrono::high_resolution_clock::now();

cudaMemcpy(tmp, in, sizeX*sizeY*sizeof(float), cudaMemcpyDeviceToDevice);

auto t2 = std::chrono::high_resolution_clock::now();

cudaFree(tmp);

auto t3 = std::chrono::high_resolution_clock::now();

tMalloc += static_cast<double>((t1 - t0).count())/1000000.0;

tCopy += static_cast<double>((t2 - t1).count())/1000000.0;

tFree += static_cast<double>((t3 - t2).count())/1000000.0;

}

auto end = std::chrono::high_resolution_clock::now();

std::chrono::duration<double> elapsed = end - start;

std::cout << " cudaMalloc " << tMalloc / n << " ms, "

<< " cudaMemcpy " << tCopy / n << " ms, "

<< " cudaFree " << tFree / n << " ms"

<< std::endl;

cudaFree(in);

}

When I run this on the Xavier A, I get the following output:

cudaMalloc 11.9218 ms, cudaMemcpy 0.0491907 ms, cudaFree 0.974387 ms

When running on the Xavier B, the output looks as follows:

cudaMalloc 6.02418 ms, cudaMemcpy 0.0661738 ms, cudaFree 0.953712 ms

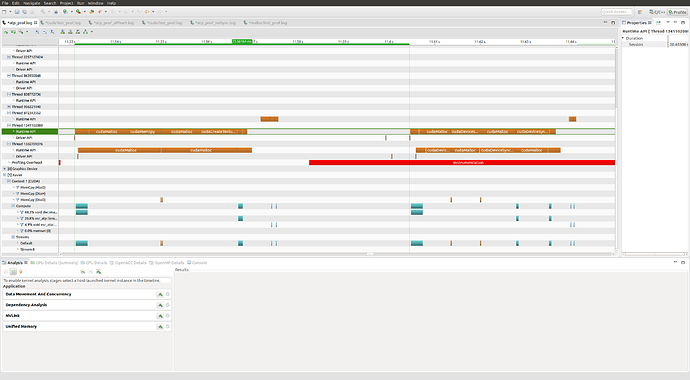

So the “cudaMalloc” takes much longer than it should. It is also much slower on the Xavier A than on the Xavier B. Before running this test, I enabled the usage of all 6 CPU cores as described here and stopped all services I don’t need as described here

For comparison, I run my code also on a DrivePX2 with the following results:

On TegraA:

cudaMalloc 0.926063 ms, cudaMemcpy 0.084942 ms, cudaFree 1.32754 ms

On TegraB:

cudaMalloc 0.638675 ms, cudaMemcpy 0.0383388 ms, cudaFree 1.34566 ms

So I am wondering if there is something wrong with my setup or if others do see the same result.

Furthermore, does anybody have an idea how memory for the iGPU could be allocated faster on the DriveAGX?