Hello!

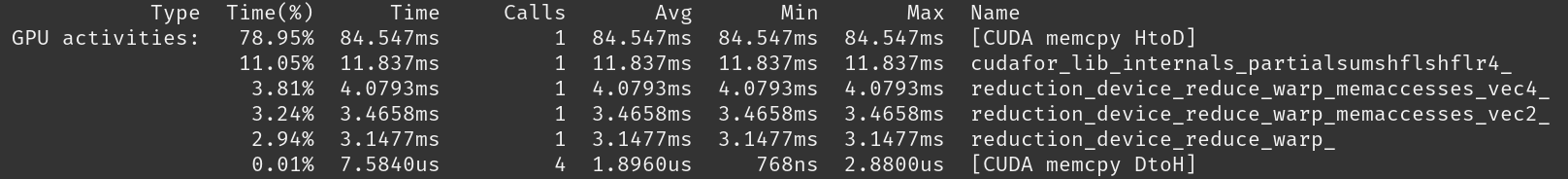

Context: As a deep dive learning experience I’ve been continually optimizing the reduction_sum kernel for arrays of floats. My fastest implementation involves register shuffles and atomicAdds, and is about 3.5x faster than the GPU-overloaded sum(devArr) intrinsic function:

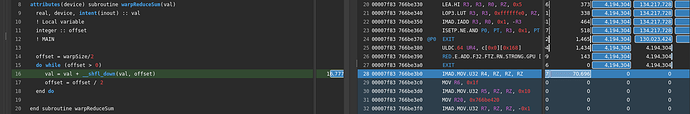

Nsight-Compute profiling tells me that the current bottleneck in my kernel is waiting for data to be fetched from global memory; I’ve identified the line in source and everything. The only thing left I can think of (other than pure ptx management) is implementing vectorized float2/float4 loads; easy in CUDA C++.

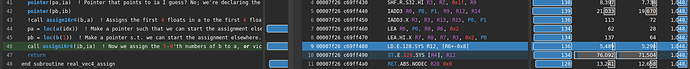

Fortran doesn’t have the int2, int4, or float2, float4 data types that C++ does. Regardless, I would like to get the benefits of vectorized loads. My hope was that if I made an array twoint(2), that vectorized loads would be performed during the compilation stage if I did

twoint(:) = global_arr(idx:idx+1)

However, doing this doesn’t seem to yield speedup on my GTX 1650 (which to be fair might not support vectorized loads; I’m not sure where to look for that info). Using Nsight-Compute, I actually seem to ruin my coalesced accessing of global array data, and I get a slight performance hit.

So my question is how one would go about ensuring the compiler performs vectorized loads (if possible)? Would I have to enter in PTX for the vectorized loads manually? If so, what would that look like? An example in a CUDA Fortran kernel would be nice.

Something that may be helpful is that Fortran does have the transfer() function, which plays the role of reinterpret_cast<>. But I’m not sure how to use it in this regard.