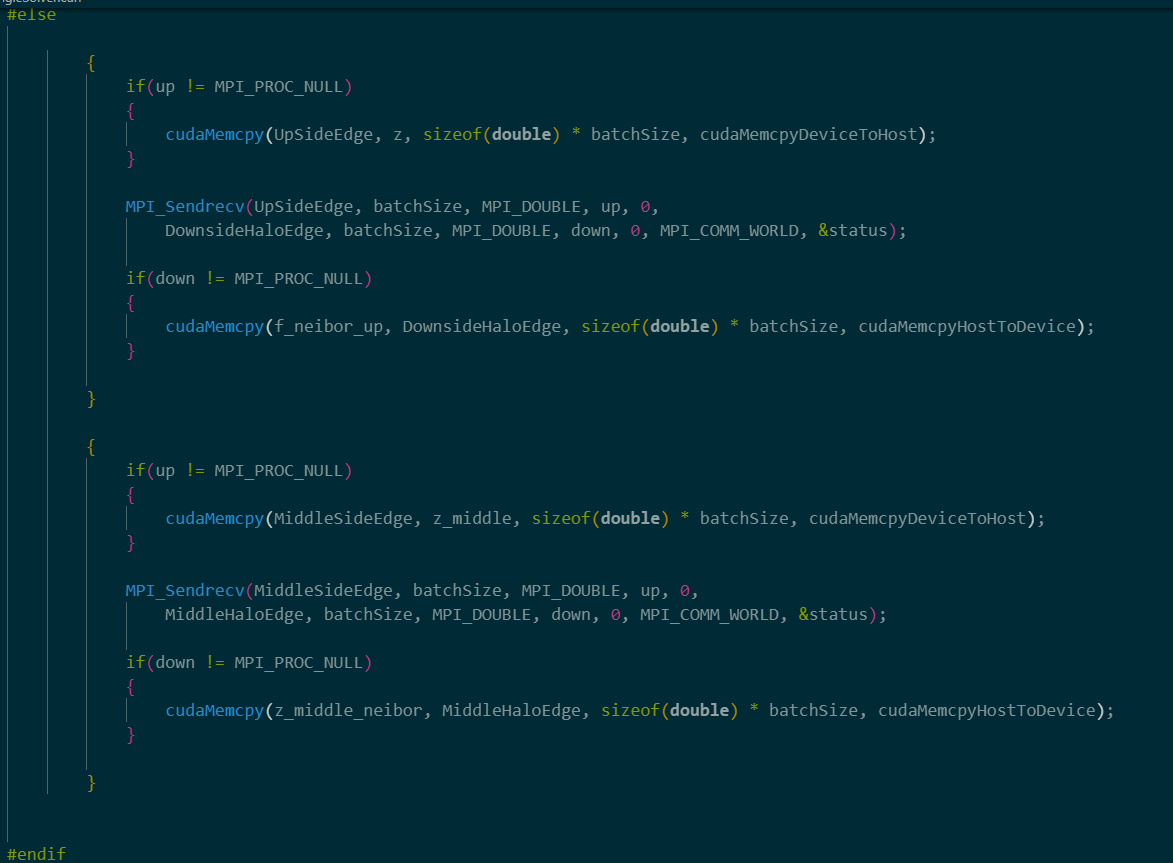

Why do I use mpi for multi-card communication in a function is much slower than using mpi for multi-card communication directly in the main function, the following is the code of mpi multi-card communication

please don’t post text as pictures on this forum