I have been running some kernel tests on the Jetson Xavier platform and comparing with the Jetson TX2 and GTX 1050. The performance of the Xavier is far closer to the 1050 GTX than the TX2 across a large number of kernels, but I have discovered that for one particular configuration, one of the kernels hits a memory throttle and there’s a dramatic fall in throughput compared to the other platforms.

The kernel is proprietary, so I cannot post, but I’ll try to explain the pattern and also create some equivalent code that I can post that exhibits the behaviour.

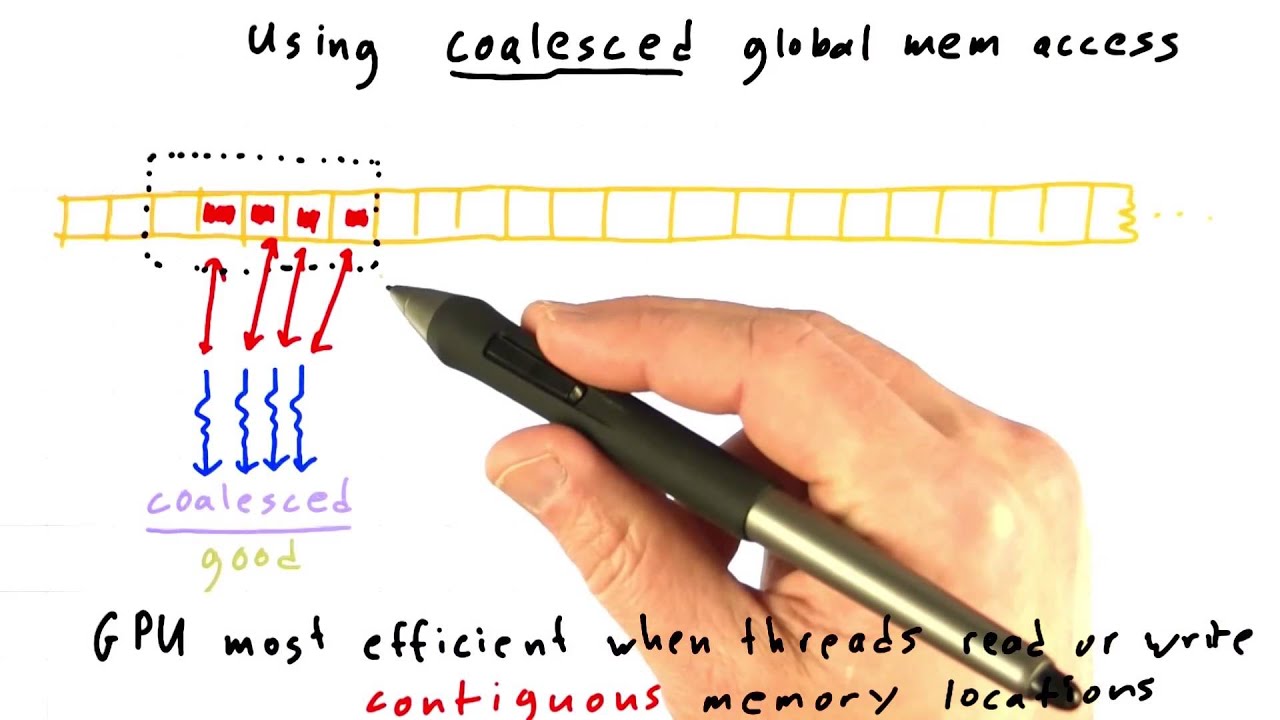

The situation arises when groups of multiple threads within a warp are reading aligned coalesced memory, but each of the groups of threads are reading from different locations.

One example is with 8-threads in a group, but it also occurs with 16-threads grouped together…

thread read/thread memory addr

0-7 8-bytes addr_a

8-15 8-bytes addr_b

16-24 8-bytes addr_c

25-31 8-bytes addr_d

addr_x is 64-byte aligned, and each time around the kernel inner loop we progress forward through each of the addresses. So in this example each group of 8 threads is reading 64-bytes coalesced

The problem arises specifically when there are 8-blocks running in parallel on each SM. I can also create this when reading 4-bytes per thread, but with 16-blocks running in parallel on each SM.

Are there any known memory issues on Xavier causing memory throttle? Any help much appreciated