As follows… my evaluation result shows a high precision

How did you “extrapolate later” ? Can you share command and full log?

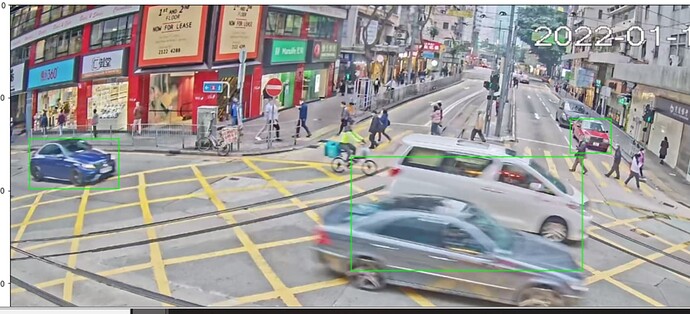

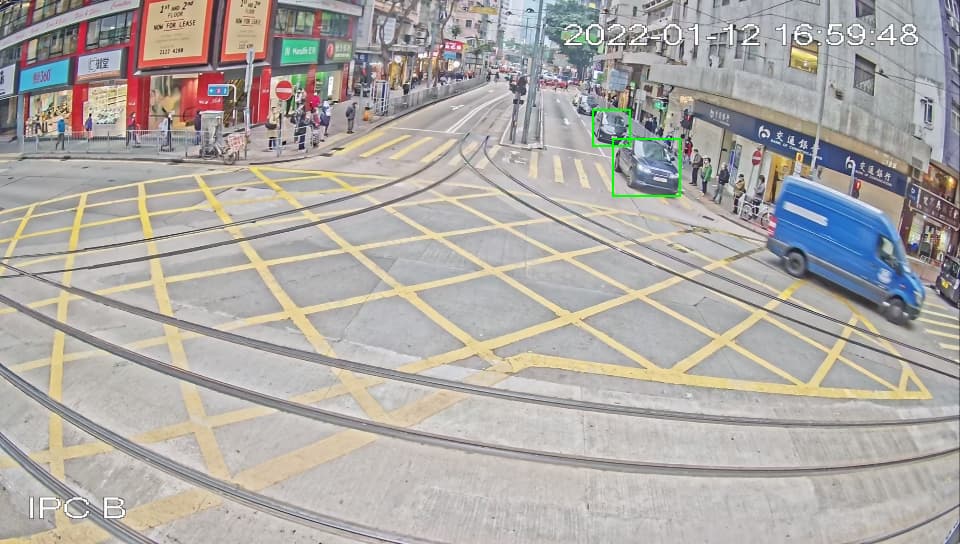

My friend told me after deploying the model and actually running it, and I looked at it from the Visualize inferences result, It does not have a high accuracy in detecting cars…

detectnet_v2_car.ipynb (1.2 MB)

From your .ipnb file, the mAP is above 92%.

When you run below

Can you share your $SPECS_DIR/detectnet_v2_inference_kitti_tlt.txt ?

detectnet_v2_car.ipynb (1.2 MB)

this… and my friend told me after deploying the model and actually running it also can not show a 90% result …

Your latest .ipynb shows 93% mAP.

I am afraid there is something wrong in your $SPECS_DIR/detectnet_v2_inference_kitti_tlt.txt .

Please share with us and also one inferenced image. Thanks.

Can you share an inferenced image?

Please resize to 960x544 and retry.

Oh, the camera has a resolution of 1080p. Do I need to modify the training profile? Or inference file(like detectnet_v2_inference_kitti_tlt.txt)

You have trained a 950x544 model. As mentioned above, try to run tao inference after resizing test image.

Or you can also run with deepstream. It is not needed by yourself for resizing when run in deepstream.

Very thx! but I resized images and found they seem to show a similar result – a not good result … Whether my model is overfitting?

Firstly, you can run inference against some training images to double check.

Then, please check the difference between training images and test images.

Previously, log shows that “Found 761 samples in training set”.

Try to add more training dataset. It is better to train images which are similar to test images.

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.