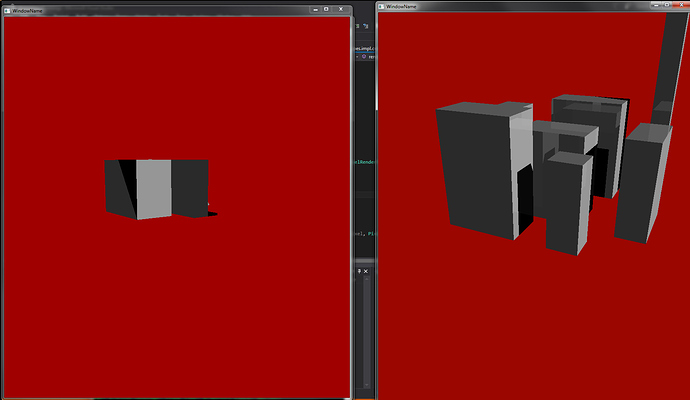

I’m writing a simple ray tracer and encountered a strange issue while testing. For some reason, I decided to switch to debug mode(in VS 2015) and and the output picture became really glitchy and nothing like what I was getting in Release mode (Release build gives me a half-decent image, while Debug generated something that barely resembled the shapes it was supposed to and those with a lot of missing parts and no reflections). Is there any difference in floating point arithmetic or anything like that between the two modes?

The part of the code that’s probably is “misbehaving” is this:

template<typename HitType>

struct PixelRenderFrame {

Photon photon;

typename ShadedOctree<HitType>::RaycastHit hit;

ColorRGB color;

ShaderBounce bounce;

int bounceId;

};

template<typename HitType, unsigned int MaxStackSize>

struct PixelRenderProcess {

SceneDataHandles<HitType> world;

PixelRenderFrame<HitType> stack[MaxStackSize];

PixelRenderFrame<HitType> *end;

PixelRenderFrame<HitType> *ptr;

Pixel::PixelColor output;

bool renderComplete;

bool shadeStarted;

bool midShade;

Photon savedPhoton;

int lightId;

int castsLeft;

__device__ __host__ inline bool shade() {

PixelRenderFrame<HitType> &frame = (*ptr);

if (!shadeStarted) {

if (castsLeft <= 0) {

midShade = true;

return true;

}

else {

midShade = false;

shadeStarted = true;

castsLeft--;

}

if (frame.photon.dead()) return false;

if (!world.world->cast(frame.photon.ray, frame.hit, false)) return false;

frame.color(0.0f, 0.0f, 0.0f);

// BOUNCE FROM INDIRECT ILLUMINATION:

if ((ptr + 1) != end) {

ShaderBounceInfo<HitType> bounceInfo = { frame.hit.object.object, frame.photon, frame.hit.hitPoint };

frame.hit.object.material->bounce(bounceInfo, &frame.bounce);

}

else frame.bounce.count = 0;

frame.bounceId = 0;

}

// COLOR FROM LIGHT SOURCES:

const Stacktor<Light> &lights = (*world.lights);

for (int i = lightId; i < lights.size(); i++) {

bool noShadows;

Photon p;

if (midShade) {

noShadows = false;

p = savedPhoton;

}

else {

noShadows = false;

p = lights[i].getPhoton(frame.hit.hitPoint, &noShadows);

if (p.dead()) continue;

}

if (!noShadows) {

if (castsLeft > 0) {

typename ShadedOctree<HitType>::RaycastHit lightHit;

if (world.world->cast(p.ray, lightHit, false)) {

if ((frame.hit.hitPoint - lightHit.hitPoint).sqrMagnitude() <= 128.0f * VECTOR_EPSILON)

noShadows = true;

}

else noShadows = true;

midShade = false;

castsLeft--;

}

else {

midShade = true;

savedPhoton = p;

lightId = i;

return true;

}

}

if (noShadows) {

ShaderHitInfo<HitType> castInfo = { frame.hit.object.object, p, frame.hit.hitPoint, frame.photon.ray.origin };

frame.color += frame.hit.object.material->illuminate(castInfo).color;

}

}

frame.color *= frame.photon.color;

shadeStarted = false;

midShade = false;

lightId = 0;

return true;

}

__device__ __host__ inline bool iterate() {

while (true) {

if (midShade || (ptr->bounceId < ptr->bounce.count)) {

if (!midShade) {

Photon sample = ptr->bounce.samples[ptr->bounceId];

sample.ray.origin += sample.ray.direction * (128.0f * VECTOR_EPSILON);

ptr->bounceId++;

ptr++;

ptr->photon = sample;

}

if (!shade()) {

if (ptr != stack) ptr--;

else {

output.depth = -1;

renderComplete = true;

return true;

}

}

else if (midShade) return false;

}

else if(ptr == stack) {

output.color = ptr->color;

output.depth = ptr->hit.hitDistance;

renderComplete = true;

return true;

}

else {

ColorRGB col = ptr->color;

ptr--;

ptr->color += col;

}

}

}

__device__ __host__ inline void setup(const Photon &photon, const SceneDataHandles<HitType> &world) {

this->world = world;

end = (stack + MaxStackSize);

ptr = stack;

ptr->photon = photon;

shadeStarted = false;

midShade = true;

lightId = 0;

renderComplete = false;

castsLeft = 0;

}

};

// Code below is temporary and will be changed in the future

template<typename HitType, unsigned int MaxStackSize>

__device__ __host__ inline static Pixel::PixelColor renderPixel(const Photon &photon, const SceneDataHandles<HitType> &world, PixelRenderProcess<HitType, MaxStackSize> *stack) {

PixelRenderProcess<HitType, MaxStackSize> pixel;

pixel.setup(photon, world);

while (!pixel.renderComplete) {

pixel.castsLeft = 1; // this variable will be used to limit raycasts per kernel in the future, to prevent crashes.

pixel.iterate();

}

return pixel.output;

}