• Hardware Platform (Jetson / GPU) : NVIDIA Jetson AGX Orin

• DeepStream Version : 7.1

• JetPack Version (valid for Jetson only) : 6.1

• TensorRT Version : 8.6.2.3

• Issue Type( questions, new requirements, bugs) : question

Hello,

I have a DeepStream pipeline structured as follows (refer to the attached diagram):

Brief overview:

• Source: A camera feed.

• File Recording Pipelines (Top 2 Pipelines):

- First pipeline: Saves video files in H.264 format.

- Second pipeline: Saves video files in H.265 format.

• Inference Pipelines (Bottom 2 Pipelines):

- These pipelines perform real-time inference on the camera stream.

Issues Encountered:

1. Delayed File Splitting in the H264 and H265 Pipeline

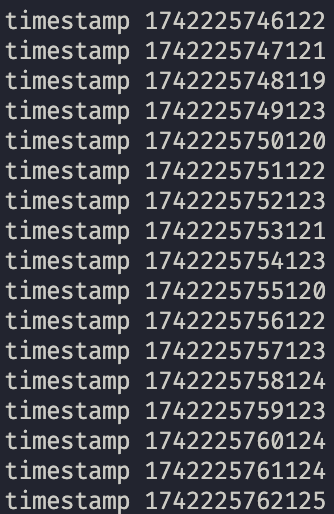

The pipeline is split into four different branches using tee element. H265 branch is connected to an appsink where a Python function handles file splitting every 1 second (similar to splitmuxsink but with custom logic). However, when inference pipelines are active, the expected 1-second interval for file splitting is disrupted, causing unexpected delays. If I disable both inference pipelines, the file splitting works correctly without any delay. Here are time logs in milliseconds.

No delay (no inference pipelines):

Delay with inference pipelines connected:

Questions:

-

Pipeline Synchronization: Does DeepStream require all branches of a tee element to reach their respective sinks before proceeding to the next frame, or does the tee distribute frames independently to each branch? I would like each branch that goes from tee to run independently from any other branch that I add to pipeline.

-

Avoiding Bottlenecks: How can I prevent the H264/H265 pipelines from waiting for inference pipelines to finish before continuing with the next frame?

2. Dynamically Enabling/Disabling Inference Pipelines

I need to dynamically enable or disable one of the inference pipelines at runtime. For example: at certain times, only the bottom inference pipeline should run. The second inference pipeline (called “arcing”) should be turned off, meaning no data should flow through it. To achieve this, I added a valve element at the start of each inference pipeline.

Problem: When drop=true is set for any valve, it unexpectedly disrupts the file-saving logic in the H.264/H.265 pipelines and other inference. It appears that the drop setting is affecting not just downstream but possibly upstream as well.

Question:

-

Why does the valve element seem to affect upstream elements? Does it affects them or no?

-

Is there an alternative element or method I can use at the beginning of each inference pipeline to enable/disable it dynamically without interfering with other parts of the pipeline?

Example use case: Every 10 minutes, the first inference pipeline is active while the second is disabled, then after another 10 minutes, the roles switch.