Just starting to learn on Jetson nano 2GB and I’m having issues with the “Hello AI World” tutorial on collecting and creating custom dataset for re-training ssd-mobilenet. Any help would be appreciated!

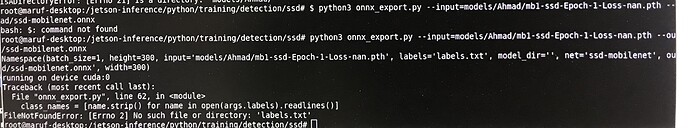

first, I get an error 21 when I try to convert to onnx:

running on device cuda:0

found best checkpoint with loss 10000.000000 ()

creating network: ssd-mobilenet

num classes: 6

loading checkpoint: models/stuff3/

Traceback (most recent call last):

File “onnx_export.py”, line 86, in

net.load(args.input)

File “/jetson-inference/python/training/detection/ssd/vision/ssd/ssd.py”, line 135, in load

self.load_state_dict(torch.load(model, map_location=lambda storage, loc: storage))

File “/usr/local/lib/python3.6/dist-packages/torch/serialization.py”, line 571, in load

with _open_file_like(f, ‘rb’) as opened_file:

File “/usr/local/lib/python3.6/dist-packages/torch/serialization.py”, line 229, in _open_file_like

return _open_file(name_or_buffer, mode)

File “/usr/local/lib/python3.6/dist-packages/torch/serialization.py”, line 210, in init

super(_open_file, self).init(open(name, mode))

IsADirectoryError: [Errno 21] Is a directory: ‘models/stuff3/’

I see the directory on desktop so I know it is there. Also, I noticed that as I train my dataset, the epochs register “nan” toward the end of its training:

2021-02-21 15:47:45 - Epoch: 1, Validation Loss: nan, Validation Regression Loss nan, Validation Classification Loss: 2.0592

2021-02-21 15:47:45 - Saved model models/stuff3/mb1-ssd-Epoch-1-Loss-nan.pth

2021-02-21 15:47:45 - Task done, exiting program.

This must be part of the problem? I captured my images via camera-capture and followed the “Training Object Detection Models” video tutorial to make the custom dataset. I had no issue with the earlier exercise of downloading the fruit images from Open Images dataset v6 and retraining ssd-mobilenet. But I also notice that there are more files and folders inside the “fruit” data folder than what I have in my custom dataset folder.

I’ve tried disabling GUI when running training and onnx convert. Also increased swap size, as recommended. I still get error when converting to onnx. Still get “nan” for validation loss and regression loss. Can someone point me to what I’m doing wrong?

Thank you!

Paul