Hai Sir,

-

Can ./imagenet (imagenet.cpp) /dev/video0 be used for live detection (when implemented outside jetson-inference directory) where the contents are same as in imagenet.cpp fiel along with CMakelist.txt file?

P.S: My idea is to do the kind of my-recognition code or the imagenet.cpp to the live camera feed, instead of only calling up single images like, polar.jpg etc. -

Is it possible to implement the image detection and object detection in C language , instead of C++ and Python codes?

-

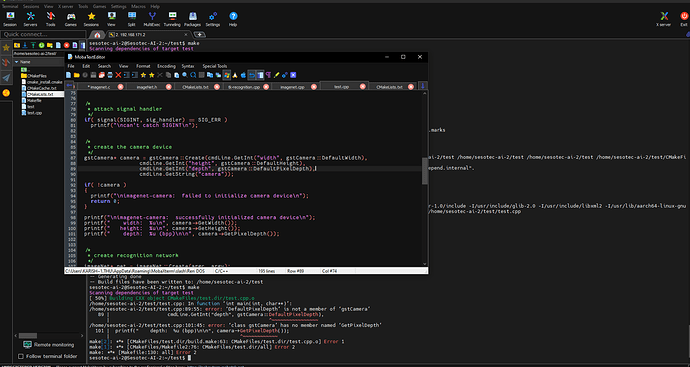

when I follow the steps and the codes as in this query thread, Jetson Nano Object Detection C/C++ Example - #7 by dusty_nv

I am having multiple issues. Would you mind helping me with figuring it out please.

I did the

cmake .

make

make VERBOSE=1

the test file at this point had the code of this thread: Jetson Nano Object Detection C/C++ Example - #9 by dusty_nv

so removed the line : printf(" depth: %u (bpp)\n \n", camera->GetPixelDepth());

and then ~/test

$ ./test --width=640 --height=480

This the error I am facing.

Later I changed the test file to this

include “jetson-utils/gstCamera.h”

include “jetson-utils/glDisplay.h”

include “cudaFont.h”include “jetson-inference/imageNet.h”

include “jetson-utils/commandLine.h”include <signal.h>

include <jetson-inference/detectNet.h>

bool signal_recieved = false;

void sig_handler(int signo)

{

if( signo == SIGINT )

{

LogVerbose(“received SIGINT\n”);

signal_recieved = true;

}

}int usage()

{

printf(“usage: imagenet [–help] [–network=NETWORK] …\n”);

printf(" input_URI [output_URI]\n\n");

printf(“Classify a video/image stream using an image recognition DNN.\n”);

printf(“See below for additional arguments that may not be shown above.\n\n”);

printf(“positional arguments:\n”);

printf(" input_URI resource URI of input stream (see videoSource below)\n");

printf(" output_URI resource URI of output stream (see videoOutput below)\n\n");

printf(“%s”, imageNet::Usage());

printf(“%s”, videoSource::Usage());

printf(“%s”, videoOutput::Usage());

printf(“%s”, Log::Usage());

return 0;

}int main( int argc, char** argv )

{

/*

- parse command line

*/

commandLine cmdLine(argc, argv);if( cmdLine.GetFlag(“help”) )

return usage();/*

- attach signal handler

*/

if( signal(SIGINT, sig_handler) == SIG_ERR )

LogError(“can’t catch SIGINT\n”);/*

- create input stream

/

videoSource input = videoSource::Create(cmdLine, ARG_POSITION(0));if( !input )

{

LogError(“imagenet: failed to create input stream\n”);

return 1;

}/*

- create output stream

/

videoOutput output = videoOutput::Create(cmdLine, ARG_POSITION(1));if( !output )

LogError(“imagenet: failed to create output stream\n”);/*

- create font for image overlay

/

cudaFont font = cudaFont::Create();if( !font )

{

LogError(“imagenet: failed to load font for overlay\n”);

return 1;

}/*

- create recognition network

/

imageNet net = imageNet::Create(cmdLine);if( !net )

{

LogError(“imagenet: failed to initialize imageNet\n”);

return 1;

}/*

processing loop

/

while( !signal_recieved )

{

// capture next image image

uchar3 image = NULL;if( !input->Capture(&image, 1000) )

{

// check for EOS

if( !input->IsStreaming() )

break;LogError("imagenet: failed to capture next frame\n"); continue;}

// classify image

float confidence = 0.0f;

const int img_class = net->Classify(image, input->GetWidth(), input->GetHeight(), &confidence);if( img_class >= 0 )

{

LogVerbose(“imagenet: %2.5f%% class #%i (%s)\n”, confidence * 100.0f, img_class, net->GetClassDesc(img_class));// overlay class label onto original image char str[256]; sprintf(str, "%05.2f%% %s", confidence * 100.0f, net->GetClassDesc(img_class)); font->OverlayText(image, input->GetWidth(), input->GetHeight(), str, 5, 5, make_float4(255, 255, 255, 255), make_float4(0, 0, 0, 100));}

// render outputs

if( output != NULL )

{

output->Render(image, input->GetWidth(), input->GetHeight());// update status bar char str[256]; sprintf(str, "TensorRT %i.%i.%i | %s | Network %.0f FPS", NV_TENSORRT_MAJOR, NV_TENSORRT_MINOR, NV_TENSORRT_PATCH, net->GetNetworkName(), net->GetNetworkFPS()); output->SetStatus(str); // check if the user quit if( !output->IsStreaming() ) signal_recieved = true;}

// print out timing info

net->PrintProfilerTimes();

}/*

- destroy resources

*/

LogVerbose(“imagenet: shutting down…\n”);SAFE_DELETE(input);

SAFE_DELETE(output);

SAFE_DELETE(net);LogVerbose(“imagenet: shutdown complete.\n”);

return 0;

}

and the CMAKELIST.TXT file contains the following

cmake_minimum_required(VERSION 2.8)

include_directories(${PROJECT_INCLUDE_DIR} ${PROJECT_INCLUDE_DIR}/jetson-inference ${PROJECT_INCLUDE_DIR}/jetson-utils)

include_directories(/usr/include/gstreamer-1.0 /usr/lib/aarch64-linux-gnu/gstreamer-1.0/include /usr/include/glib-2.0 /usr/include/libxml2 /usr/lib/aarch64-linux-gnu/glib-2.0/include/ /usr/local/include/jetson-utils)//declare my-recognition project

project(test)file(GLOB imagenetCameraSources *.cpp)

file(GLOB imagenetCameraIncludes *.h )find_package(jetson-utils)

find_package(jetson-inference)

find_package(CUDA)//add directory for libnvbuf-utils to program

link_directories(/usr/lib/aarch64-linux-gnu/tegra)cuda_add_executable(test ${imagenetCameraSources})

target_link_libraries(test jetson-inference)

install(TARGETS test DESTINATION bin)

if I run

$./test --width=640 --height=480 --threshold=0.2 through SSH remote

I am totally lost. Kindly excuse my lack of knowledge in this stream.

Regards,

Karishma