Hello NVIDIA team,

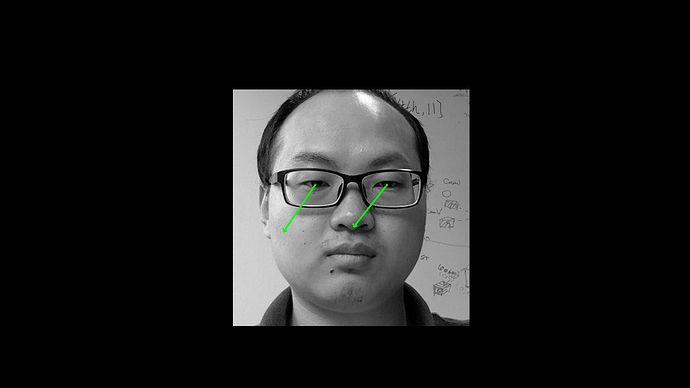

I’m hoping you can help. I am wondering if I could get support testing the deployable model, as the trainable model did not give great results out of the box (I imagine this is because it’s the trainable model and requires further training). The results I am referring to, are with the images provided by the notebook itself (not my own images). Has anyone else tried this? I’m only able to attach 1 image, as a new user, but 3/5 images were as off as this one (see below):

I can easily choose the deployable model before download, but given how the notebook is written (specifically for further training), it’s not very clear to me how I could test run a specific image that, say, I could upload onto jupyter, and use the deployable model without further training. I wouldn’t necessarily want to train, because I would want it to be able to identify a broad range of faces and situations–even if this means the accuracy will be lower.

I am using an NVIDIA image for an Azure VM (ngc_azure_17_11).

Many thanks for your help in advance!

Warmly,

Ale