Hi,

I have read several topics in the forum and used some of the repos from the GitHub. I can’t seem to get anywhere close with the +5 TB/s L2 cache bandwidth values.

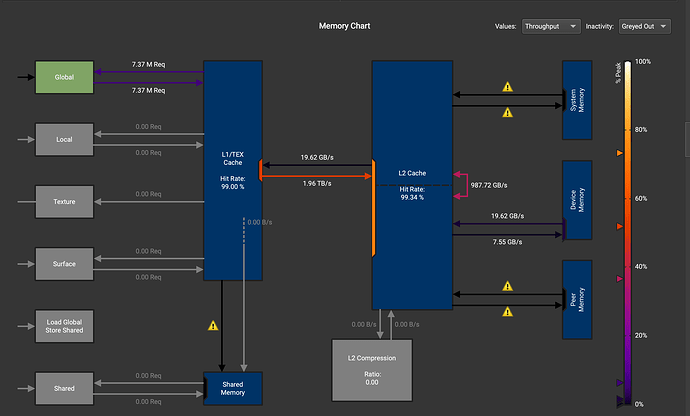

Currently I am using a modified version of the code provided topic here and it was achieving 2.5 TB/s and now in my measurements it is achieving ~3.9 TB/s. Which is still quite low and the size change to 150 MB still provides 3.9 TB/s which shouldn’t be the case since it is greater than L2 cache size for Nvidia A100 ( 40 MB) and it should be DRAM bandwidths around 1.3 TB/s I would expect.

Modified Code:

#include <iostream>

#include <cuda_runtime.h>

using T = double;

size_t num_times = 100;

__global__ void k(T *d, T *d2, int len, int lps){

for (int l = 0; l < lps; l++)

for (int i = threadIdx.x+blockDim.x*blockIdx.x; i<len; i+=gridDim.x*blockDim.x)

d[i] = __ldg(d2+i);

}

int main(int argc, char** argv) {

cudaDeviceProp prop;

cudaGetDeviceProperties(&prop, 0);

const int nTPSM = prop.maxThreadsPerMultiProcessor; // 2048

const int nSM = prop.multiProcessorCount * 16; // multiplying 16 to make all SMs busy enough

const unsigned l2size = prop.l2CacheSize; // 40 MB

unsigned int array_size = 0.45*l2size/(sizeof(T)); // This makes sure that it is 20 MB

std::cout << "Array size: " << array_size*sizeof(T)*1.0E-6 << " MB" << " (=" << array_size*sizeof(T)*1.0E-9 << " GB)" << std::endl;

const size_t lws = 1024;

const size_t gws = ((nSM + lws - 1) / lws) * lws; // global work size

T *d, *d2;

cudaMalloc(&d, array_size * sizeof(T));

cudaMalloc(&d2, array_size * sizeof(T));

k<<<gws, lws>>>(d, d2, array_size,1); // warm-up

cudaEvent_t start, stop;

cudaEventCreate(&start);

cudaEventCreate(&stop);

cudaEventRecord(start);

k<<<gws, lws>>>(d, d2, array_size,num_times);

cudaEventRecord(stop);

cudaDeviceSynchronize();

float milliseconds = 0;

cudaEventElapsedTime(&milliseconds, start, stop);

double dt = milliseconds / num_times;

double blockDV = array_size * sizeof(T); // Data processed per thread block

double bw = (blockDV * gws) / (dt * 1.0e9); // GB/s

std::cout << "bw: " << (array_size * 2 * sizeof(T)) / (dt * 1e3 * 1e6) << " TB/s" << std::endl;

std::cout << "bw: " << (bw) << " GB/s" << std::endl;

cudaFree(d);

cudaFree(d2);

return 0;

}

Cross checked with this repository and their results :

data set exec time spread Eff. bw

512 kB 1536 kB 25ms 0.1% 4149.3 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 2048 kB 24ms 0.2% 4291.3 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 2560 kB 25ms 0.2% 4206.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 3072 kB 25ms 0.6% 4231.9 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 3584 kB 25ms 0.6% 4231.2 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 4096 kB 25ms 0.5% 4262.9 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 4608 kB 25ms 0.1% 4215.9 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 5120 kB 25ms 0.2% 4194.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 5632 kB 25ms 0.2% 4227.1 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 6144 kB 25ms 0.2% 4221.7 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 6656 kB 25ms 0.1% 4180.4 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 7168 kB 25ms 0.2% 4169.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 7680 kB 25ms 0.1% 4182.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 8192 kB 25ms 0.1% 4223.5 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 8704 kB 25ms 0.1% 4194.5 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 9216 kB 25ms 0.3% 4187.5 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 9728 kB 25ms 0.3% 4158.2 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 10240 kB 26ms 0.3% 4101.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 11264 kB 25ms 0.3% 4213.9 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 12288 kB 25ms 0.2% 4226.9 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 13312 kB 25ms 0.2% 4213.0 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 14336 kB 25ms 0.3% 4213.0 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 15360 kB 25ms 0.3% 4201.1 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 16896 kB 25ms 0.2% 4235.0 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 18432 kB 25ms 0.4% 4231.5 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 19968 kB 25ms 0.3% 4134.1 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 21504 kB 25ms 0.1% 4181.9 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 23552 kB 25ms 0.1% 4218.8 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 25600 kB 25ms 0.2% 4175.3 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 28160 kB 26ms 7.7% 4046.4 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 30720 kB 34ms 1.2% 3079.8 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 33792 kB 38ms 1.4% 2790.0 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 36864 kB 45ms 0.8% 2334.4 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 40448 kB 55ms 0.8% 1905.5 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 44032 kB 62ms 1.2% 1696.8 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 48128 kB 67ms 1.0% 1573.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 52736 kB 69ms 0.3% 1521.7 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 57856 kB 69ms 0.2% 1512.7 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 63488 kB 70ms 0.3% 1508.3 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 69632 kB 70ms 0.2% 1506.3 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 76288 kB 70ms 0.2% 1501.8 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 83456 kB 70ms 0.3% 1504.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 91648 kB 70ms 0.3% 1502.4 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 100352 kB 70ms 0.3% 1503.1 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 110080 kB 70ms 0.3% 1501.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 120832 kB 70ms 0.3% 1500.2 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 132608 kB 70ms 0.3% 1499.1 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 145408 kB 70ms 0.4% 1496.0 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 159744 kB 70ms 0.3% 1496.4 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 175616 kB 70ms 0.3% 1495.2 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 193024 kB 70ms 0.3% 1492.4 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 211968 kB 70ms 0.3% 1489.8 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 232960 kB 70ms 0.3% 1488.9 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 256000 kB 70ms 0.4% 1487.7 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 281600 kB 71ms 0.4% 1483.3 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 309760 kB 71ms 0.4% 1481.5 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 340480 kB 71ms 0.4% 1480.8 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 374272 kB 70ms 1.6% 1491.9 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 411648 kB 70ms 2.6% 1504.1 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 452608 kB 71ms 0.5% 1467.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 497664 kB 72ms 0.5% 1463.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 547328 kB 72ms 0.6% 1459.5 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 601600 kB 72ms 0.6% 1454.0 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 661504 kB 72ms 0.6% 1448.7 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 727552 kB 73ms 0.7% 1442.3 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 800256 kB 73ms 0.9% 1438.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 880128 kB 73ms 0.9% 1430.8 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 967680 kB 74ms 0.8% 1421.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 1064448 kB 74ms 0.8% 1411.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 1170432 kB 75ms 0.7% 1403.2 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 1287168 kB 75ms 0.0% 1394.0 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 1415680 kB 75ms 0.1% 1394.0 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 1556992 kB 75ms 0.0% 1393.6 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 1712640 kB 75ms 0.1% 1393.4 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 1883648 kB 75ms 0.1% 1393.7 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 2071552 kB 75ms 0.2% 1396.0 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 2278400 kB 75ms 0.3% 1398.1 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 2506240 kB 75ms 0.1% 1394.3 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 2756608 kB 75ms 0.1% 1394.0 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

512 kB 3032064 kB 75ms 0.1% 1395.9 GB/s 0 GB/s 0 GB/s 0 GB/s 0 GB/s

Which is still a bit off and not perfect but it shows the drop at around 40 MB which makes sense.

What could be the solution here ?