Hello,

I am allocating few arrays with cudaMalloc. Some of arrays in device code shows instead of pointer only “???” nsight debug fails because of access violation on load (it cannot read from that pointer).

__device__ unsigned int* D_Inputs;

__device__ unsigned int* D_Offsets;

__device__ bool* D_NodeTypes;

__device__ short* D_ItemsCount;

__device__ unsigned int* D_NodeIndices;

__device__ bool* D_ResultVector;

__device__ int* D_ItemOrders;

void Init()

{

unsigned int freeMemBefore = GetFreeMemory(0);

unsigned int mMaxBlocks = 2000;

cudaMalloc((void**)&D_Offsets, sizeof(unsigned int) * mMaxBlocks);

cudaMalloc((void**)&D_NodeTypes, sizeof(bool) * mMaxBlocks);

cudaMalloc((void**)&D_ItemsCount, sizeof(short) * mMaxBlocks);

cudaMalloc((void**)&D_NodeIndices, sizeof(unsigned int) * mMaxBlocks);

cudaMalloc((void**)&D_ResultVector, sizeof(bool) * mMaxBlocks);

cudaMalloc((void**)&D_ItemOrders, sizeof(unsigned int) * mMaxBlocks);

cudaMalloc((void**)&D_Inputs, 2000 * mMaxBlocks);

unsigned int freeMemAfter = GetFreeMemory(0);

}

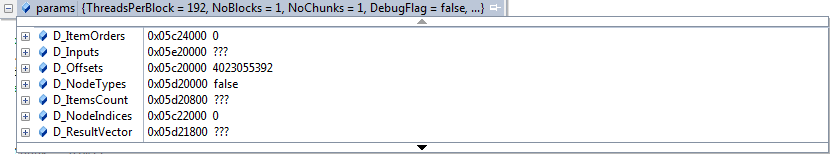

Together is not so much memory. I have 800 MB of free memory. It is interesting. D_ItemsCount is ???, but D_NodeIndices is correct even it is allocated later.

See arrays in kernel nsight debug:

Nsight output:

Memory Checker detected 192 access violations.

error = access violation on load (global memory)

gridid = 12

blockIdx = {0,0,0}

threadIdx = {0,0,0}

address = 0x05d20800

accessSize = 2

It somehow depends on memory size to allocate. If mMaxBlockSize is set to 10, all arrays are allocated ok.

I tested this on gtx 550 wich is primary display. And also on GTX 690 on both gpu cores with similar problem.

Can somebody please tell me what I am doing wrong ?