[11/01/2021-13:17:13] [V] [TRT] ModelImporter.cpp:125: StatefulPartitionedCall/functional_3/tf_op_layer_Tile_6/Tile_6 [Tile] inputs: [StatefulPartitionedCall/functional_3/tf_op_layer_ExpandDims/ExpandDims:0 → (-1, -1, -1, -1)], [const_fold_opt__444 → (4)],

[11/01/2021-13:17:13] [V] [TRT] ImporterContext.hpp:141: Registering layer: StatefulPartitionedCall/functional_3/tf_op_layer_Tile_6/Tile_6 for ONNX node: StatefulPartitionedCall/functional_3/tf_op_layer_Tile_6/Tile_6

[11/01/2021-13:17:13] [V] [TRT] ImporterContext.hpp:116: Registering tensor: tf_op_layer_Tile_6 for ONNX tensor: tf_op_layer_Tile_6

[11/01/2021-13:17:13] [V] [TRT] ModelImporter.cpp:179: StatefulPartitionedCall/functional_3/tf_op_layer_Tile_6/Tile_6 [Tile] outputs: [tf_op_layer_Tile_6 → (-1, -1, -1, -1)],

[11/01/2021-13:17:13] [V] [TRT] ModelImporter.cpp:103: Parsing node: [BatchedNMS_TRT]

[11/01/2021-13:17:13] [V] [TRT] ModelImporter.cpp:119: Searching for input: tf_op_layer_Tile_6

[11/01/2021-13:17:13] [V] [TRT] ModelImporter.cpp:119: Searching for input: tf_op_layer_strided_slice_73

[11/01/2021-13:17:13] [V] [TRT] ModelImporter.cpp:125: [BatchedNMS_TRT] inputs: [tf_op_layer_Tile_6 → (-1, -1, -1, -1)], [tf_op_layer_strided_slice_73 → (-1, -1, -1)],

[11/01/2021-13:17:13] [I] [TRT] ModelImporter.cpp:135: No importer registered for op: BatchedNMS_TRT. Attempting to import as plugin.

[11/01/2021-13:17:13] [I] [TRT] builtin_op_importers.cpp:3659: Searching for plugin: BatchedNMS_TRT, plugin_version: 1, plugin_namespace:

[11/01/2021-13:17:13] [I] [TRT] builtin_op_importers.cpp:3676: Successfully created plugin: BatchedNMS_TRT

[11/01/2021-13:17:13] [V] [TRT] ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 1307) [PluginV2Ext] for ONNX node:

[11/01/2021-13:17:13] [E] [TRT] (Unnamed Layer* 1307) [PluginV2Ext]: PluginV2Layer must be V2DynamicExt when there are runtime input dimensions.

[11/01/2021-13:17:13] [V] [TRT] ImporterContext.hpp:116: Registering tensor: num_detections_1 for ONNX tensor: num_detections

[11/01/2021-13:17:13] [E] [TRT] (Unnamed Layer* 1307) [PluginV2Ext]: PluginV2Layer must be V2DynamicExt when there are runtime input dimensions.

[11/01/2021-13:17:13] [V] [TRT] ImporterContext.hpp:116: Registering tensor: nmsed_boxes_1 for ONNX tensor: nmsed_boxes

[11/01/2021-13:17:13] [E] [TRT] (Unnamed Layer* 1307) [PluginV2Ext]: PluginV2Layer must be V2DynamicExt when there are runtime input dimensions.

[11/01/2021-13:17:13] [V] [TRT] ImporterContext.hpp:116: Registering tensor: nmsed_scores_1 for ONNX tensor: nmsed_scores

[11/01/2021-13:17:13] [E] [TRT] (Unnamed Layer* 1307) [PluginV2Ext]: PluginV2Layer must be V2DynamicExt when there are runtime input dimensions.

[11/01/2021-13:17:13] [V] [TRT] ImporterContext.hpp:116: Registering tensor: nmsed_classes_1 for ONNX tensor: nmsed_classes

[11/01/2021-13:17:13] [V] [TRT] ModelImporter.cpp:179: [BatchedNMS_TRT] outputs: [num_detections → ()], [nmsed_boxes → ()], [nmsed_scores → ()], [nmsed_classes → ()],

[11/01/2021-13:17:13] [V] [TRT] ModelImporter.cpp:507: Marking num_detections_1 as output: num_detections

[11/01/2021-13:17:13] [V] [TRT] ModelImporter.cpp:507: Marking nmsed_boxes_1 as output: nmsed_boxes

[11/01/2021-13:17:13] [V] [TRT] ModelImporter.cpp:507: Marking nmsed_scores_1 as output: nmsed_scores

[11/01/2021-13:17:13] [V] [TRT] ModelImporter.cpp:507: Marking nmsed_classes_1 as output: nmsed_classes

----- Parsing of ONNX model www3_fix.onnx.nms_test.onnx is Done ----

[11/01/2021-13:17:13] [E] [TRT] (Unnamed Layer* 1307) [PluginV2Ext]: PluginV2Layer must be V2DynamicExt when there are runtime input dimensions.

[11/01/2021-13:17:13] [E] [TRT] (Unnamed Layer* 1307) [PluginV2Ext]: PluginV2Layer must be V2DynamicExt when there are runtime input dimensions.

[11/01/2021-13:17:13] [E] [TRT] (Unnamed Layer* 1307) [PluginV2Ext]: PluginV2Layer must be V2DynamicExt when there are runtime input dimensions.

[11/01/2021-13:17:13] [E] [TRT] (Unnamed Layer* 1307) [PluginV2Ext]: PluginV2Layer must be V2DynamicExt when there are runtime input dimensions.

[11/01/2021-13:17:13] [E] [TRT] (Unnamed Layer* 1307) [PluginV2Ext]: PluginV2Layer must be V2DynamicExt when there are runtime input dimensions.

[11/01/2021-13:17:13] [E] [TRT] Layer (Unnamed Layer* 1307) [PluginV2Ext] failed validation

[11/01/2021-13:17:13] [E] [TRT] Network validation failed.

[11/01/2021-13:17:13] [E] Engine creation failed

[11/01/2021-13:17:13] [E] Engine set up failed

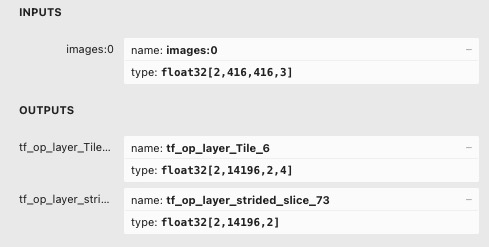

&&&& FAILED TensorRT.trtexec # /usr/src/tensorrt/bin/trtexec --onnx=www3_fix.onnx.nms_test.onnx --fp16 --saveEngine=add_BatchedNMS_TRT.engine --shapes=images:0:2x416x416x3 --verbose --explicitBatch