Description

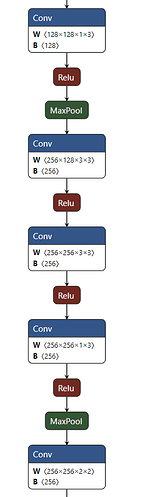

I have a simple conv net work :

the input shape is (1, 32, -1, 1), which means the input image width is dynamic.

I set dynamic shape profile:

min shape = ‘{“image_placeholder”: [1,32,16,1]}’

opt shape = ‘{“image_placeholder”: [1,32,128,1]}’

max shape= ‘{“image_placeholder”: [1,32,2048,1]}’

When I infer image with shape [1,32,128,1] it’s ok, but when I infer image with shape (1, 32, 170, 1), it give error: [convolutionRunner.cpp::executeConv::458] Error Code 1: Cudnn (CUDNN_STATUS_BAD_PARAM).

Moreover, the error only happens when I use fp32 mode, it’s ok for fp16 mode.

I get this error when I use TensorRT 8, for TensorRT7, I did not get this error.

Can you give any solutions? Thanks.

Environment

TensorRT Version: 8.0

GPU Type: v100

Nvidia Driver Version:

CUDA Version: 11.3

CUDNN Version: 8.2

Operating System + Version: ub18.04

Python Version (if applicable): 3.8

TensorFlow Version (if applicable):

PyTorch Version (if applicable):

Baremetal or Container (if container which image + tag):

Relevant Files

Please attach or include links to any models, data, files, or scripts necessary to reproduce your issue. (Github repo, Google Drive, Dropbox, etc.)

Steps To Reproduce

Please include:

- Exact steps/commands to build your repro

- Exact steps/commands to run your repro

- Full traceback of errors encountered