Description

At present, when I am accelerating my own model with tensorrt, I have encountered a problem, that is, when I use different data types to feed the network for inference, the inference speed and inference results of the network will be different, as follows:

the code as follows:

The impact of different data types on inference time:

different predictions:

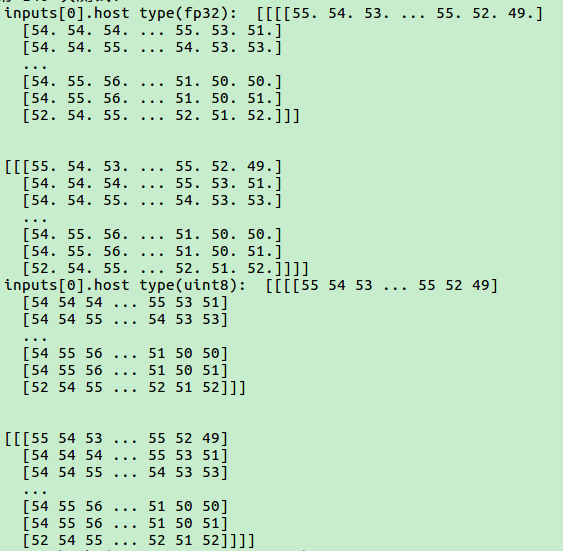

the float32 (input data type)predict results:

the uint8(input data type) predict results:

In addition, I also compared the results of uint8 and float32 input data, as follows:

I found that the values are the same except for the data type!

Currently, my two prediction results use the same tensorrt-accelerated model based on the fp16 acceleration method.

Looking forward to your help, thanks a lot!

Environment

TensorRT Version: 8.2

GPU Type: 3060ti

Nvidia Driver Version: 510

CUDA Version: 11.3

CUDNN Version: 8.2

Operating System + Version: ubunt20.04

Python Version (if applicable): 3.7.9

TensorFlow Version (if applicable): no use

PyTorch Version (if applicable): 1.10.1+cu113

Baremetal or Container (if container which image + tag):