Hi everyone,

I’m working on optimizing a multi-GPU training task using FSDP and have encountered a significant performance issue related to compute-communication overlap. I’ve narrowed it down to a classic “tail effect” problem and would appreciate any insights or advanced optimization strategies.

The Problem: Overlap-Induced Tail Effect

I’ve created a simple script to test the impact of overlapping a gemm kernel with an allgather kernel, which mimics the behavior of FSDP’s prefetching mechanism.

My findings are as follows:

- When the

gemmkernel runs standalone, it achieves high MFU (~80%). - When it runs concurrently with an

allgatherkernel, thegemmkernel’s execution time nearly doubles, and its MFU drops to ~47%.

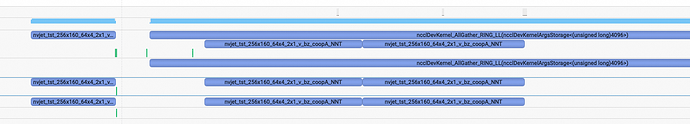

Root Cause Analysis with Nsight Systems (nsys)

The nsys profile provides a clear picture of what’s happening on my H100 GPU, which has 132 SMs:

- The

gemmkernel is perfectly sized, launching exactly 132 blocks. In an ideal scenario, this should complete in a single wave, fully utilizing all SMs. - However, the concurrent

ncclAllGatherkernel launches 24 blocks, preemptively occupying a few SMs. - As a result, the CUDA scheduler can only dispatch less than 132 blocks of the

gemmkernel in the first wave. - The remaining blocks of the

gemmkernel are forced into a second wave.

This creates a severe tail effect. During the second wave, the GPU is massively underutilized: only a few SMs are busy with the gemm tail, and another 24 SMs are busy with the allgather kernel, leaving most SMs completely idle. This wastage of resources is the primary cause of the performance degradation.

What I’m Looking For

The core challenge is that the gemm kernel I’m using is a closed-source, pre-compiled kernel from NVIDIA’s libraries, so I cannot directly modify it or change launch config

I’m looking for advanced strategies to mitigate this tail effect. The ideal solution would be a way to “fill the gap” in the second wave by co-scheduling blocks from different kernels

Are there any techniques, perhaps involving stream priorities, or other low-level NCCL configurations, that could help solve this? The goal is to make the GPU fully loaded during that second wave, or even better, to avoid creating the second wave for the gemm kernel altogether.

Here are some relevant resources I’ve been looking at:

- CUDA Pro Tip: Minimize the Tail Effect: CUDA Pro Tip: Minimize the Tail Effect | NVIDIA Technical Blog

- NVIDIA Forums - How to optimize tail effect: How to optimize tail effect?

Any advice on how to tackle this “pathological overlap” scenario would be greatly appreciated.