It seems that it is not possible to independently run kernels on a multi-GPU system in parallel from a single process, with multiple threads. When using multiple processes to do so, it works fine. Note that this issue manifests at random times. Sometimes I need to run the program a couple times before it starts. When it does start, the issue does not go away (even though the amount to which it affects runtime varies).

I originally reported the issue here: TensorRT unnecessary synchronization in multi-GPU system. But it seems it is not TensorRT-specific.

Environment

TensorRT Version: 8.5.2.2

GPU Type: 10x RTX A4000

Nvidia Driver Version: 525.60

CUDA Version: 11.8

CUDNN Version: 8.7.0

Operating System + Version: Ubuntu 22.04.1 LTS

Baremetal or Container (if container which image + tag): Baremetal

System

CPU:

Info: 2x 20-core model: Intel Xeon Silver 4316 bits: 64 type: MT MCP SMP cache:

L2: 2x 25 MiB (50 MiB)

Speed (MHz): avg: 916 min/max: 800/3400 cores: 1: 801 2: 801 3: 800 4: 800 5: 800 6: 800

7: 801 8: 801 9: 801 10: 801 11: 800 12: 801 13: 800 14: 801 15: 801 16: 800 17: 801 18: 801

19: 800 20: 801 21: 801 22: 800 23: 801 24: 801 25: 800 26: 801 27: 801 28: 801 29: 2302

30: 801 31: 801 32: 1890 33: 801 34: 801 35: 1085 36: 1403 37: 801 38: 1413 39: 1243 40: 801

41: 800 42: 801 43: 801 44: 801 45: 801 46: 801 47: 801 48: 801 49: 801 50: 1648 51: 800

52: 800 53: 800 54: 2301 55: 800 56: 800 57: 801 58: 801 59: 801 60: 801 61: 801 62: 801

63: 801 64: 802 65: 800 66: 801 67: 801 68: 801 69: 1416 70: 801 71: 801 72: 1758 73: 800

74: 800 75: 846 76: 801 77: 801 78: 1440 79: 897 80: 801

Graphics:

Device-1: ASPEED Graphics Family driver: ast v: kernel

Device-2: NVIDIA GA104GL [RTX A4000] driver: nvidia v: 525.60.11

Device-3: NVIDIA GA104GL [RTX A4000] driver: nvidia v: 525.60.11

Device-4: NVIDIA GA104GL [RTX A4000] driver: nvidia v: 525.60.11

Device-5: NVIDIA GA104GL [RTX A4000] driver: nvidia v: 525.60.11

Device-6: NVIDIA GA104GL [RTX A4000] driver: nvidia v: 525.60.11

Device-7: NVIDIA GA104GL [RTX A4000] driver: nvidia v: 525.60.11

Device-8: NVIDIA GA104GL [RTX A4000] driver: nvidia v: 525.60.11

Device-9: NVIDIA GA104GL [RTX A4000] driver: nvidia v: 525.60.11

Device-10: NVIDIA GA104GL [RTX A4000] driver: nvidia v: 525.60.11

Device-11: NVIDIA GA104GL [RTX A4000] driver: nvidia v: 525.60.11

Display: server: No display server data found. Headless machine? tty: 238x51

MRE

I modified the matrixMul sample in the CUDA samples. See code attached. The modified version runs one worker (in a thread) per GPU and then concurrently executes kernels from them.

Single-GPU

This runs a single worker thread, on a single GPU (to establish baseline):

CUDA_VISIBLE_DEVICES=0 ./matrixMul

[Matrix Multiply Using CUDA] - Starting...

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

Computing result using CUDA Kernel...

done

LAUNCH!

Performance= 1445.88 GFlop/s, Time= 0.091 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

DONE

Runtime around 0.091 msec

Multi-threaded, multi-GPU

This one runs one worker per GPU, running the kernel 10k iterations as fast as possible. Even though the GPUs should operate independently, there seems to be synchronization as the average runtime drops by about 4x:

./matrixMul

[Matrix Multiply Using CUDA] - Starting...

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

Computing result using CUDA Kernel...

done

done

Computing result using CUDA Kernel...

Computing result using CUDA Kernel...

done

done

LAUNCH!

Performance= 413.12 GFlop/s, Time= 0.317 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Performance= 408.03 GFlop/s, Time= 0.321 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Performance= 398.93 GFlop/s, Time= 0.329 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Performance= 391.88 GFlop/s, Time= 0.334 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Performance= 393.24 GFlop/s, Time= 0.333 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Performance= 389.40 GFlop/s, Time= 0.337 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Performance= 392.55 GFlop/s, Time= 0.334 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Result = PASS

Performance= 390.90 GFlop/s, Time= 0.335 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness:

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Performance= 388.16 GFlop/s, Time= 0.338 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Performance= 386.39 GFlop/s, Time= 0.339 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

DONE

Runtime around 0.33 msec (4x slower)

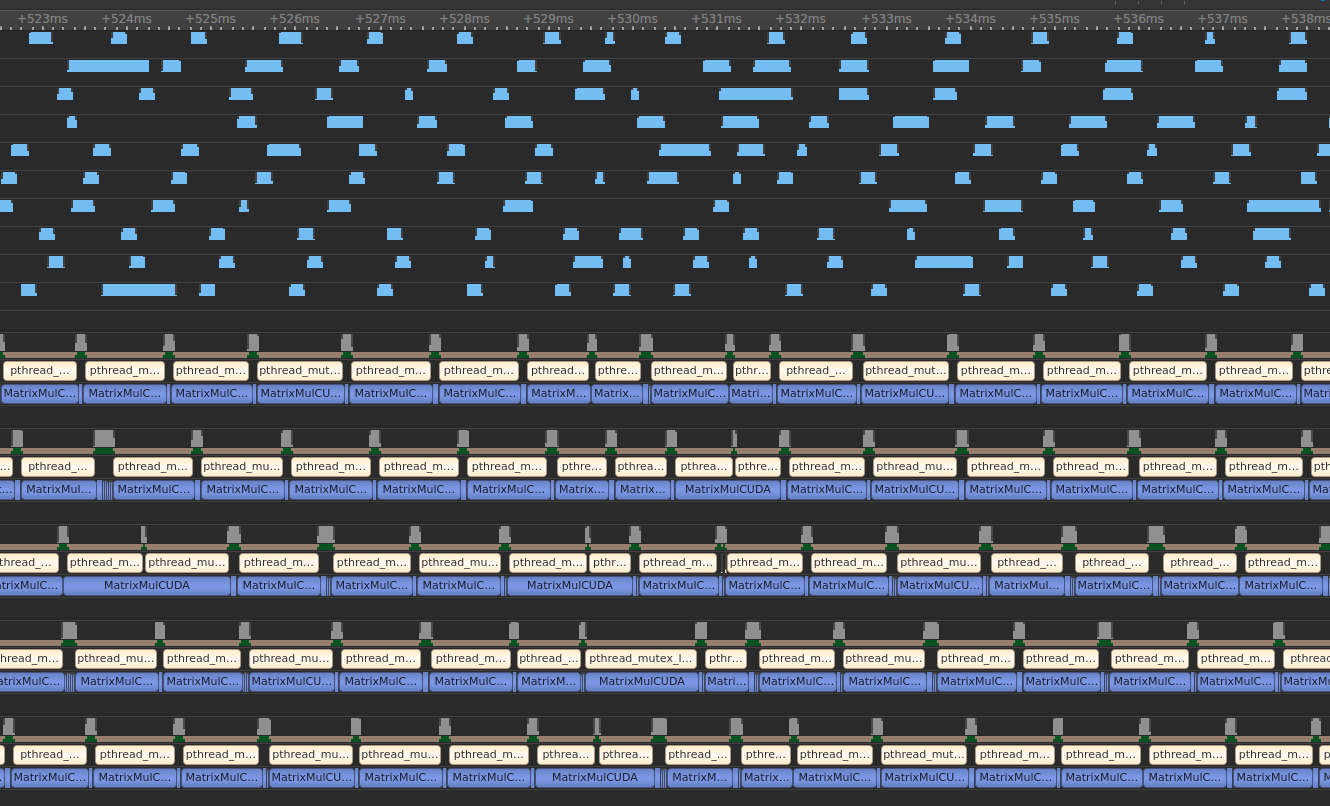

This is what the trace looks like:

Multi-process, multi-GPU

This sample does the same thing as above, except instead of running workers in threads, it starts one process per GPU and then runs the kernel 10k iterations (parallel over processes). This works:

CUDA_VISIBLE_DEVICES=0 CUDA_MODULE_LOADING=LAZY ./matrixMul & \

CUDA_VISIBLE_DEVICES=1 CUDA_MODULE_LOADING=LAZY ./matrixMul & \

CUDA_VISIBLE_DEVICES=2 CUDA_MODULE_LOADING=LAZY ./matrixMul & \

CUDA_VISIBLE_DEVICES=3 CUDA_MODULE_LOADING=LAZY ./matrixMul & \

CUDA_VISIBLE_DEVICES=4 CUDA_MODULE_LOADING=LAZY ./matrixMul & \

CUDA_VISIBLE_DEVICES=5 CUDA_MODULE_LOADING=LAZY ./matrixMul & \

CUDA_VISIBLE_DEVICES=6 CUDA_MODULE_LOADING=LAZY ./matrixMul & \

CUDA_VISIBLE_DEVICES=7 CUDA_MODULE_LOADING=LAZY ./matrixMul & \

CUDA_VISIBLE_DEVICES=8 CUDA_MODULE_LOADING=LAZY ./matrixMul & \

CUDA_VISIBLE_DEVICES=9 CUDA_MODULE_LOADING=LAZY ./matrixMul

[Matrix Multiply Using CUDA] - Starting...

[Matrix Multiply Using CUDA] - Starting...

[Matrix Multiply Using CUDA] - Starting...

[Matrix Multiply Using CUDA] - Starting...

[Matrix Multiply Using CUDA] - Starting...

[Matrix Multiply Using CUDA] - Starting...

[Matrix Multiply Using CUDA] - Starting...

[Matrix Multiply Using CUDA] - Starting...

[Matrix Multiply Using CUDA] - Starting...

[Matrix Multiply Using CUDA] - Starting...

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

GPU Device 0: "Ampere" with compute capability 8.6

MatrixA(320,320), MatrixB(640,320)

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

done

Computing result using CUDA Kernel...

Computing result using CUDA Kernel...

done

done

Computing result using CUDA Kernel...

done

LAUNCH!

LAUNCH!

LAUNCH!

LAUNCH!

LAUNCH!

LAUNCH!

LAUNCH!

LAUNCH!

LAUNCH!

LAUNCH!

Performance= 1447.73 GFlop/s, Time= 0.091 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

DONE

Performance= 1432.36 GFlop/s, Time= 0.092 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

DONE

Performance= 1450.05 GFlop/s, Time= 0.090 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

DONE

Performance= 1469.87 GFlop/s, Time= 0.089 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

DONE

Performance= 1456.77 GFlop/s, Time= 0.090 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

DONE

Performance= 1462.62 GFlop/s, Time= 0.090 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

DONE

Performance= 1426.73 GFlop/s, Time= 0.092 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

DONE

Performance= 1436.94 GFlop/s, Time= 0.091 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Performance= 1427.04 GFlop/s, Time= 0.092 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

DONE

DONE

Performance= 1443.78 GFlop/s, Time= 0.091 msec, Size= 131072000 Ops, WorkgroupSize= 1024 threads/block

Checking computed result for correctness: Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

DONE

Runtime around 0.09 msec (same as sequential, so fastest possible)

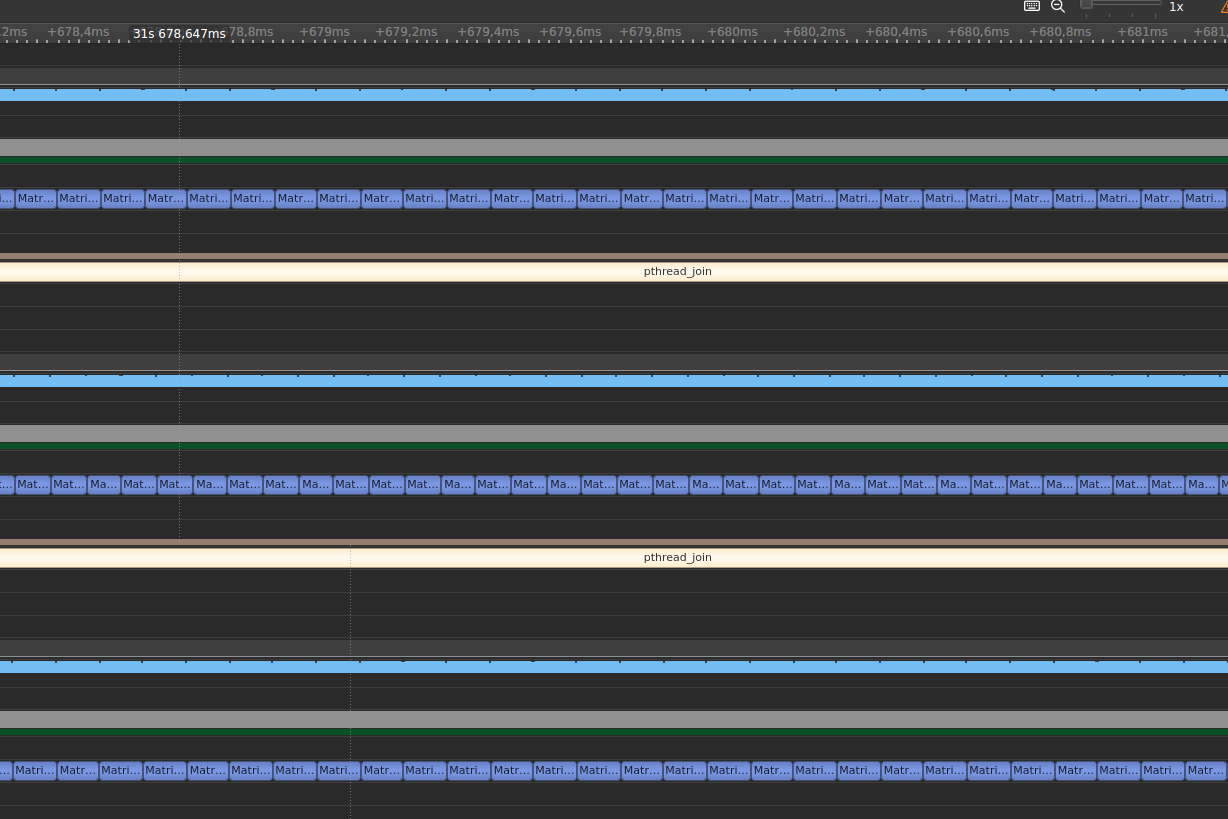

The trace:

Resources

- matrixMul.cu (modified version) (12.2 KB)