Hi, I have been working on learning OptiX to hopefully use in a research project I am in. I have been going through the Siggraph Optix7 Course trying to understand the pipeline layout. Keep in mind I don’t have a lot of experience with C++ and even less with CUDA so some of these questions might be less about OptiX but please bear with me as I am trying to sort through everything.

First a little bit about what I want to do. I want to load in an elevation map(as mesh) and then render both color and depth values from it. Eventually I want to be able to render several sparse images from different view points rather than a single dense image from a single view point(working now).

I worked up through example 7 in the Siggraph course which explains how to import a mesh from a file and render it. I then started to diverge a little and started to work on the depth rendering. For this I consulted the OptixSDK example “RayCasting”. In the RayCasting example the depth is retrieved through the function optixGetRayTMax() and is defined as a const unsigned int as seen here:

extern "C" __global__ void __closesthit__buffer_hit()

{

const unsigned int t = optixGetRayTmax();

whitted::HitGroupData* rt_data = (whitted::HitGroupData*)optixGetSbtDataPointer();

LocalGeometry geom = getLocalGeometry( rt_data->geometry_data );

// Set the hit data

optixSetPayload_0( __float_as_uint( t ) );

optixSetPayload_1( __float_as_uint( geom.N.x ) );

optixSetPayload_2( __float_as_uint( geom.N.y ) );

optixSetPayload_3( __float_as_uint( geom.N.z ) );

}

I tried implementing something similar in my modified version of the CH program. I have tried two different ways:

- I changed my vec3f to vec4f and appended the depth to end.

- I set up a new payload and implemented the depth just like the RayCasting example.

extern "C" __global__ void __closesthit__radiance()

{

const TriangleMeshSBTData &sbtData

= *(const TriangleMeshSBTData*)optixGetSbtDataPointer();

// get depth

const uint32_t t = optixGetRayTmax();

// vec3f &prd = *(vec3f*)getPRD<vec3f>();

vec4f &prd = *(vec4f*)getPRD<vec4f>();

prd[0] = sbtData.color[0];

prd[1] = sbtData.color[1];

prd[2] = sbtDatacolor[2];

prd[3] = t;

}

Then when placing the value in the buffer I extracted if from the vec4f and saved it to a separate depth buffer, following the syntax of the original example. Instead of the rgb values, I set all the values to depth(this rendered a white depth image instead of a red one). I also again, tried doing this just like the RayCasting example

Modified from the Optix 7 Siggraph Course

vec4f pixelColorPRD = vec4f(0.f);

// the values we store the PRD pointer in:

uint32_t u0, u1, u2;

packPointer( &pixelColorPRD, u0, u1 );

optixTrace(optixLaunchParams.traversable,

camera.position,

rayDir,

0.f, //t_min

1e2f, //t_max

0.0f, // rayTime

OptixVisibilityMask( 255 ),

OPTIX_RAY_FLAG_DISABLE_ANYHIT, // OPTIX_RAY_FLAG_NONE,

SURFACE_RAY_TYPE, // SBT Offset

RAY_TYPE_COUNT, // SBT Stride

SURFACE_RAY_TYPE, // miss SBT Index

u0, u1 );

const int r = int(255.99f*pixelColorPRD.x);

const int g = int(255.99f*pixelColorPRD.y);

const int b = int(255.99f*pixelColorPRD.z);

const int d = int(pixelColorPRD.w);

// convert to 32-bit rgba value (we explicitly set alpha to 0xff

// to make stb_image_write happy ...

const uint32_t rgba = 0xff000000

| (r<<0) | (g<<8) | (b<<16);

const uint32_t depth = 0xff000000

| (d<<0) | (d<<8) | (d<<16);

// and write to frame buffer ...

const uint32_t fbIndex = ix+iy*optixLaunchParams.frame.size.x;

optixLaunchParams.frame.colorBuffer[fbIndex] = rgba;

optixLaunchParams.frame.depthBuffer[fbIndex] = depth;

Following the RayCasting Example:

optixTrace(optixLaunchParams.traversable,

camera.position,

rayDir,

0.f, //t_min

1e2f, //t_max

0.0f, // rayTime

OptixVisibilityMask( 255 ),

OPTIX_RAY_FLAG_DISABLE_ANYHIT, // OPTIX_RAY_FLAG_NONE,

SURFACE_RAY_TYPE, // SBT Offset

RAY_TYPE_COUNT, // SBT Stride

SURFACE_RAY_TYPE, // miss SBT Index

u0, u1, u2);

const int r = int(255.99f*pixelColorPRD.x);

const int g = int(255.99f*pixelColorPRD.y);

const int b = int(255.99f*pixelColorPRD.z);

const float depth = __uint_as_float( u2 );

// convert to 32-bit rgba value (we explicitly set alpha to 0xff

// to make stb_image_write happy ...

const uint32_t rgba = 0xff000000

| (r<<0) | (g<<8) | (b<<16);

// and write to frame buffer ...

const uint32_t fbIndex = ix+iy*optixLaunchParams.frame.size.x;

optixLaunchParams.frame.colorBuffer[fbIndex] = rgba;

optixLaunchParams.frame.depthBuffer[fbIndex] = depth;

}

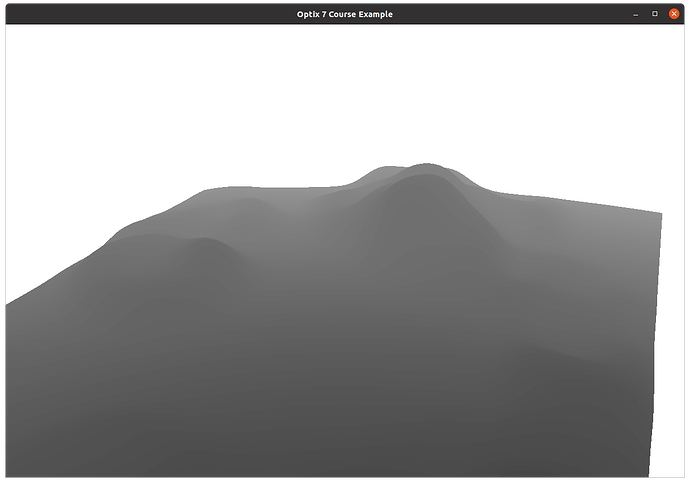

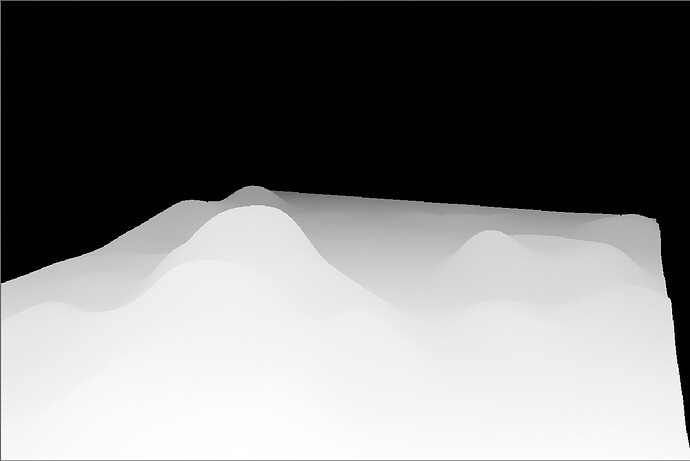

This works to render a depth image. However, there striations present in the image. I guess these are due to some kind clipping in the depth values from the way they are being send back to the host?

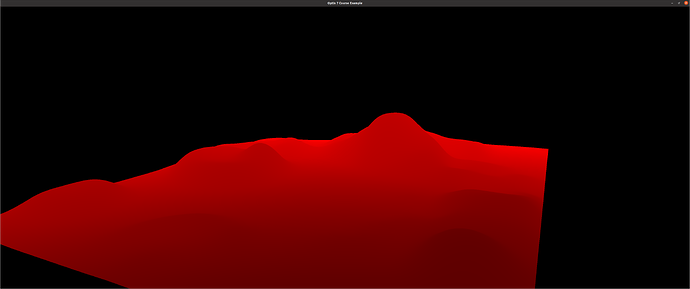

I can also render an image when following the RayCasting Example, but it gives a red hued image. I think I partially understand why, but I also have no experience in OpenGL so I was unable to figure out how to render just one channel.

So I have been trying understand where these striations are coming from. My first thought is had something to do with going back and forth between int and float, but I just don’t have enough experience in this yet to really understand what is going on in each conversion. I wanted to see if I could inspect the data directly, but was having trouble even just printing values to the terminal. I finally figured out to save the image to a file, instead of a rendering to a window. However, I can only save the rgb image and not the depth image. The rgb image saves upside down(probably easy to fix), but the depths just shows a bunch of squares like an invisible layer. While debugging this, I tried changing the call to optixGetRayTMax() and defined it as const float t = optixGetRayTMax() ; To my surprise, this seemed to get rid of the striations.

I am not sure if I am just not wanting to see them, or they are not there, but it seems better. However, can you explain why this would be the case? I am really hoping to develop a better understanding/intuition about these seemingly simple questions.

Is there a better way to get the depth? As I mentioned previously, I eventually want to be able to render a bunch of sparse images from various points, so I want to make sure I am setting this up in an efficient manner.

Finally, can you point me to any documentation or instructions on how to set up a good debugging environment for Optix? I am using VSCode to write everything and build with Cmake.

I am on Ubuntu 20.04, Optix 7.7(I have 8 too, but have done most of the work in 7.7). CUDA 11.8, Nvidia 3080.

Thank you!

Benjamin