Description

Hello!

My task is to train RetinaNet (backbone: resnet50, keras).

RetinaNet:

After training, I got the weights .h5. Next, I need to convert weights into ONNX model with dynamic batch_size.

Code for converting weights .h5 in ONNX-the model I took from here: keras2onnx

And then I want to convert ONNX-model to TensorRT Engine using trtexec. I have an ONNX model, but further conversion to TensorRT Engine does not work.

When I run the command:

trtexec --onnx=retinanet-bbox.onnx --saveEngine=retinaNet.trt --minShapes=images:1x512x512x3 --optShapes=images:6x512x512x3 --maxShapes=images:12x512x512x3 --useCudaGraph --memPoolSize=workspace:3000 --noTF32 --fp16

I get an error:

Error[2]: [myelinBuilderUtils.cpp::getMyelinSupportType::1270] Error Code 2: Internal Error (ForeignNode does not support data-dependent shape for now.)

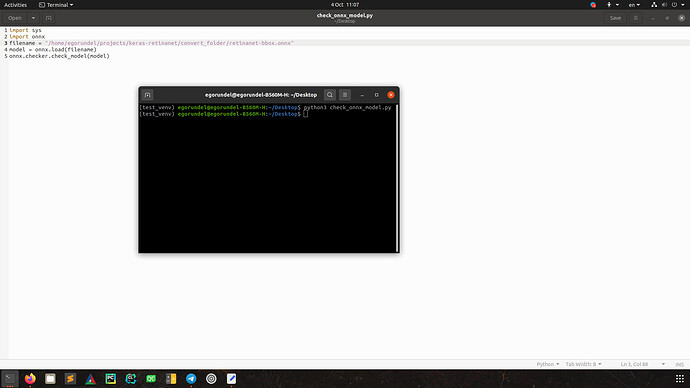

My ONNX-model is correct. I checked it with code: check_onnx_model.py

Maybe I’m creating the ONNX model incorrectly…

I can't understand why this is happening? Could you help me solve this problem?

P.S. An Internet search did not provide answers to this problem.I have attached my ONNX model in the attachment below.

Environment

TensorRT Version: 8.6

GPU Type: RTX3060

Nvidia Driver Version: nvidia-driver-535 (proprietary)

CUDA Version: 11.1

CUDNN Version: 8.0.4

Operating System + Version: Ubuntu 20.04

Python Version (if applicable): 3.8

TensorFlow Version (if applicable): 2.4.0

Relevant Files

model.h5:

retinanet-bbox.onnx: