Hi.

I have profiled a nn.linear(1408, 1408) layer in nsight compute.

(input shape: (256, 6, 1408), output shape: (256, 6, 1408))

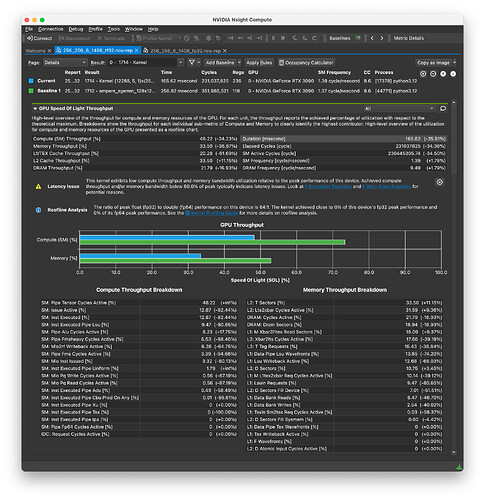

I used fp32 for the first profiling and it gave 73.32% of SM throughput and 63.44% of FMA pipe utilization(which seems well utilizing the compute units…).

But when I used tf32 for the same kernel(added torch.backends.cuda.matmul.allow_tf32 = True and

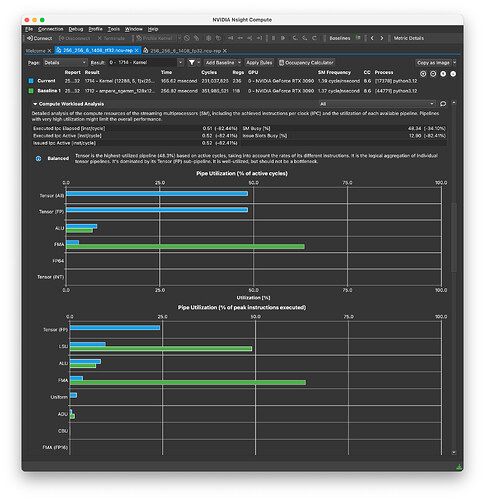

torch.backends.cudnn.allow_tf32 = True), SM throughput goes down to 48.22%. Also, tensor core utilization in Compute Workload Analysis saids tensor pipe utilization is only 48.34%. And the gpu__time_duration.sum is reduced by 35.51%.

I know that the SM throughput and pipe utilization can go up with increasing batch size, but the tensor pipe utilization does not go up over 50%.

I cannot understand why this is happening.

- Why is the tensor pipe utilization for tf32 so low?

- Why does the tensor pipe utilization not go up with higher batch sizes?

(similar things happened even if i used (nn.linear(1152, 1152), input shape: (64, 16, 1152), output shape: (64, 16, 1152))

I attached screenshots of ncu-rep for both tf32 and fp32. (blue bar is tf32, and green bar is fp32). I would very appreciate if I can get any insights for this.

tf32 vs fp32.pdf (2.1 MB)