Hi, everyone!

now i have a trained mb1-ssd-Epoch-…pth and a ssd-mobilenet.onnx file with our own dataset.

Now i want to load the trained ssd-v1 model to do other works, which i need to know the retun value of the object detection, for example the detected type and the confidence.

As i read the example code from jetbot, there’s two way to load the model.

one way is:

from jetbot import ObjectDetector

model = ObjectDetector(‘ssd_mobilenet_v2_coco.engine’)

another: model = torchvision.models… and model.load_state_dict(torch.load(‘–.pth’))

But there’s no pre-trained models.mobilenet_v1 availabe in Pytorch. So i cannot use this way.

Now i want to know if it is possible to get our own “ssd_mobilenet_v1_coco.engine” by using our trained .pth or .onnx file.

I see there’s a ssd_tensorrt folder and i want to ask can we use this one?

Hi @MaxMarth, I’m not sure that JetBot supports these ONNX-based SSD-Mobilenet models trained with train_ssd.py from jetson-inference. The JetBot object detection code is setup to use the UFF detection models that were exported from TensorFlow.

Are you actually using JetBot, or are you just trying to run the model that you trained? Because using detectnet/detectnet.py from jetson-inference tutorial is the typical way of deploying those models that were trained with train_ssd.py. If you are indeed using JetBot, you could try changing it’s object_detection.py code to use jetson.inference.detectNet instead.

Hi @dusty_nv ,

actually i’m using JetBot now.

Now i try two ways to use the trained model.

One is to convert .onnx into .engine follow this: TensorRT/samples/trtexec at master · NVIDIA/TensorRT · GitHub

But i cant successful do it on Jetbot.

Another is to use detectnet.py from Jetson-inference and use it in Jetbot, now still trying.

Could u give me some suggestion on the mentioned two?

Later i will try your suggestion, to change objetc_detection.py in Jetbot to use jetson.inference.detctNet.

The jetson.inference.detectNet interface (aka detectnet.py) is the recommended way to use the ssd-mobilenet.onnx trained with train_ssd.py. Otherwise you may need to make code changes to JetBot/ect to support the pre/post-processing that model expects.

To run trtexec, try this:

$ cd /usr/src/tensorrt/bin

$ ./trtexec

Thank you @dusty_nv ,

i now convert the file and load it on jetbot.

But i think there may be a datatype conflict, since there are no bounding-box or results in the image. And there’s warning, onnx uses INT64 and engine uses INT32.

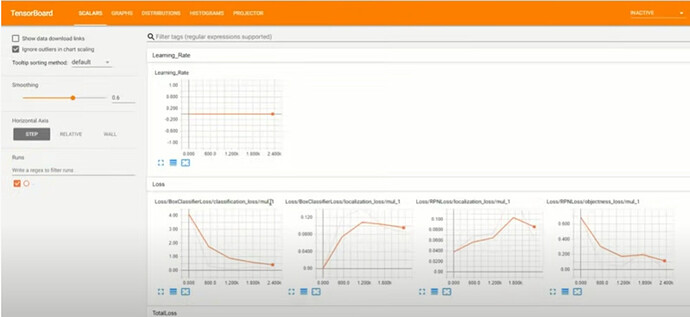

Also i want to ask a question, if it is possilble to get the information of the loss function during training, or directly get the line graph like this:

You can ignore the INT64/INT32 warning, that is fine. Unfortunately I’m unable to directly help integrate the model with JetBot, although you are probably correct in that it has different pre/post-processing - hence my suggestion to run it with jetson.inference.detectNet() and then deliver the resulting bounding boxes to JetBot.

PyTorch supports tensorboard, you would need to add it to train_ssd.py though:

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.