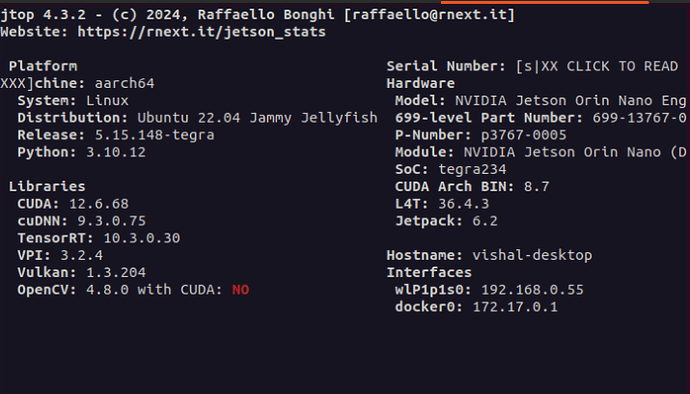

I tried installing Tensorflow 2.17 as it said jetpack 6.1 has support for it Installing TensorFlow for Jetson Platform - NVIDIA Docs

And after installing it , i keep getting gpu not detected under tensorflow

Then i found this forum Could you please let me know how to install tf2.17.0 on Jetson agx orin of jetpack 6.1 without docker container?

Where this gave me information to install 2.18 but that as well didnt work for me

This was the output it gave me after i tried

These were the logs while i tried to install 2.18

pip3 install --extra-index-url https://pypi.jetson-ai-lab.dev/jp6/cu126 tensorflow==2.18.0

Defaulting to user installation because normal site-packages is not writeable

Looking in indexes: Simple index, https://pypi.jetson-ai-lab.dev/jp6/cu126

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.HTTPSConnection object at 0xffff916df7f0>: Failed to establish a new connection: [Errno 113] No route to host’)‘: /jp6/cu126/tensorflow/

WARNING: Retrying (Retry(total=3, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.HTTPSConnection object at 0xffff916dfac0>: Failed to establish a new connection: [Errno 113] No route to host’)‘: /jp6/cu126/tensorflow/

WARNING: Retrying (Retry(total=2, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.HTTPSConnection object at 0xffff916dfca0>: Failed to establish a new connection: [Errno 113] No route to host’)‘: /jp6/cu126/tensorflow/

WARNING: Retrying (Retry(total=1, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.HTTPSConnection object at 0xffff916dfeb0>: Failed to establish a new connection: [Errno 113] No route to host’)‘: /jp6/cu126/tensorflow/

WARNING: Retrying (Retry(total=0, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.HTTPSConnection object at 0xffff916dff70>: Failed to establish a new connection: [Errno 113] No route to host’)‘: /jp6/cu126/tensorflow/

Collecting tensorflow==2.18.0

Downloading tensorflow-2.18.0-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl.metadata (4.0 kB)

Requirement already satisfied: absl-py>=1.0.0 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (2.3.1)

Requirement already satisfied: astunparse>=1.6.0 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (1.6.3)

Requirement already satisfied: flatbuffers>=24.3.25 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (25.2.10)

Requirement already satisfied: gast!=0.5.0,!=0.5.1,!=0.5.2,>=0.2.1 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (0.6.0)

Requirement already satisfied: google-pasta>=0.1.1 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (0.2.0)

Requirement already satisfied: libclang>=13.0.0 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (18.1.1)

Requirement already satisfied: opt-einsum>=2.3.2 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (3.4.0)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from tensorflow==2.18.0) (25.0)

Requirement already satisfied: protobuf!=4.21.0,!=4.21.1,!=4.21.2,!=4.21.3,!=4.21.4,!=4.21.5,<6.0.0dev,>=3.20.3 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (4.21.12)

Requirement already satisfied: requests<3,>=2.21.0 in /usr/lib/python3/dist-packages (from tensorflow==2.18.0) (2.25.1)

Requirement already satisfied: setuptools in /usr/lib/python3/dist-packages (from tensorflow==2.18.0) (59.6.0)

Requirement already satisfied: six>=1.12.0 in /usr/lib/python3/dist-packages (from tensorflow==2.18.0) (1.16.0)

Requirement already satisfied: termcolor>=1.1.0 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (3.1.0)

Requirement already satisfied: typing-extensions>=3.6.6 in /usr/local/lib/python3.10/dist-packages (from tensorflow==2.18.0) (4.14.1)

Requirement already satisfied: wrapt>=1.11.0 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (1.14.1)

Requirement already satisfied: grpcio<2.0,>=1.24.3 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (1.73.1)

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.HTTPSConnection object at 0xffff90f5fee0>: Failed to establish a new connection: [Errno 113] No route to host’)‘: /jp6/cu126/tensorboard/

WARNING: Retrying (Retry(total=3, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.HTTPSConnection object at 0xffff90f4c190>: Failed to establish a new connection: [Errno 113] No route to host’)‘: /jp6/cu126/tensorboard/

WARNING: Retrying (Retry(total=2, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.HTTPSConnection object at 0xffff90f4c340>: Failed to establish a new connection: [Errno 113] No route to host’)‘: /jp6/cu126/tensorboard/

WARNING: Retrying (Retry(total=1, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.HTTPSConnection object at 0xffff90f4c4f0>: Failed to establish a new connection: [Errno 113] No route to host’)‘: /jp6/cu126/tensorboard/

WARNING: Retrying (Retry(total=0, connect=None, read=None, redirect=None, status=None)) after connection broken by ‘NewConnectionError(’<pip._vendor.urllib3.connection.HTTPSConnection object at 0xffff90f4c6a0>: Failed to establish a new connection: [Errno 113] No route to host’)': /jp6/cu126/tensorboard/

Collecting tensorboard<2.19,>=2.18 (from tensorflow==2.18.0)

Downloading tensorboard-2.18.0-py3-none-any.whl.metadata (1.6 kB)

Requirement already satisfied: keras>=3.5.0 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (3.10.0)

Requirement already satisfied: numpy<2.1.0,>=1.26.0 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (1.26.4)

Requirement already satisfied: h5py>=3.11.0 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (3.14.0)

Requirement already satisfied: ml-dtypes<0.5.0,>=0.4.0 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (0.4.1)

Requirement already satisfied: tensorflow-io-gcs-filesystem>=0.23.1 in ./.local/lib/python3.10/site-packages (from tensorflow==2.18.0) (0.37.1)

Requirement already satisfied: markdown>=2.6.8 in ./.local/lib/python3.10/site-packages (from tensorboard<2.19,>=2.18->tensorflow==2.18.0) (3.8.2)

Requirement already satisfied: tensorboard-data-server<0.8.0,>=0.7.0 in ./.local/lib/python3.10/site-packages (from tensorboard<2.19,>=2.18->tensorflow==2.18.0) (0.7.2)

Requirement already satisfied: werkzeug>=1.0.1 in ./.local/lib/python3.10/site-packages (from tensorboard<2.19,>=2.18->tensorflow==2.18.0) (3.1.3)

Requirement already satisfied: wheel<1.0,>=0.23.0 in /usr/lib/python3/dist-packages (from astunparse>=1.6.0->tensorflow==2.18.0) (0.37.1)

Requirement already satisfied: rich in ./.local/lib/python3.10/site-packages (from keras>=3.5.0->tensorflow==2.18.0) (14.0.0)

Requirement already satisfied: namex in ./.local/lib/python3.10/site-packages (from keras>=3.5.0->tensorflow==2.18.0) (0.1.0)

Requirement already satisfied: optree in ./.local/lib/python3.10/site-packages (from keras>=3.5.0->tensorflow==2.18.0) (0.16.0)

Requirement already satisfied: MarkupSafe>=2.1.1 in ./.local/lib/python3.10/site-packages (from werkzeug>=1.0.1->tensorboard<2.19,>=2.18->tensorflow==2.18.0) (3.0.2)

Requirement already satisfied: markdown-it-py>=2.2.0 in ./.local/lib/python3.10/site-packages (from rich->keras>=3.5.0->tensorflow==2.18.0) (3.0.0)

Requirement already satisfied: pygments<3.0.0,>=2.13.0 in ./.local/lib/python3.10/site-packages (from rich->keras>=3.5.0->tensorflow==2.18.0) (2.19.2)

Requirement already satisfied: mdurl~=0.1 in ./.local/lib/python3.10/site-packages (from markdown-it-py>=2.2.0->rich->keras>=3.5.0->tensorflow==2.18.0) (0.1.2)

Downloading tensorflow-2.18.0-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl (231.7 MB)

\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501 231.7/231.7 MB 51.6 MB/s eta 0:00:00

Downloading tensorboard-2.18.0-py3-none-any.whl (5.5 MB)

\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501\u2501 5.5/5.5 MB 46.7 MB/s eta 0:00:00

Installing collected packages: tensorboard, tensorflow

Attempting uninstall: tensorboard

Found existing installation: tensorboard 2.17.1

Uninstalling tensorboard-2.17.1:

Successfully uninstalled tensorboard-2.17.1

Attempting uninstall: tensorflow

Found existing installation: tensorflow 2.17.0

Uninstalling tensorflow-2.17.0:

Successfully uninstalled tensorflow-2.17.0

Successfully installed tensorboard-2.18.0 tensorflow-2.18.0

vishal@vishal-desktop:~$ python3 -c “import tensorflow as tf; print(tf.version, ‘| CUDA Enabled:’, tf.test.is_built_with_cuda(), ‘| GPU Devices:’, tf.config.list_physical_devices(‘GPU’))”

/usr/lib/python3/dist-packages/scipy/init.py:146: UserWarning: A NumPy version >=1.17.3 and <1.25.0 is required for this version of SciPy (detected version 1.26.4

warnings.warn(f"A NumPy version >={np_minversion} and <{np_maxversion}"

2.18.0 | CUDA Enabled: False | GPU Devices:

Please help