• Hardware Platform (Jetson) • DeepStream Version 6.1 • JetPack Version 5.0.2 GA • TensorRT Version 5.0.2 • Issue Type(questions)

Can different operations be performed on multiple input sources in the test3 project, and if so, how can the inference position of a particular source be distinguished ?

Below is an example

diff --git a/sources/apps/sample_apps/deepstream-test3/dstest3_config.yml b/sources/apps/sample_apps/deepstream-test3/dstest3_config.yml

index 159daec..2270cca 100755

--- a/sources/apps/sample_apps/deepstream-test3/dstest3_config.yml

+++ b/sources/apps/sample_apps/deepstream-test3/dstest3_config.yml

@@ -22,10 +22,10 @@

source-list:

#semicolon separated uri. For ex- uri1;uri2;uriN;

- list: file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4;

+ list: file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4;file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4;file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4;file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4;

streammux:

- batch-size: 1

+ batch-size: 4

batched-push-timeout: 40000

width: 1920

height: 1080

diff --git a/sources/apps/sample_apps/deepstream-test3/dstest3_pgie_config.yml b/sources/apps/sample_apps/deepstream-test3/dstest3_pgie_config.yml

index 2c46456..c716573 100755

--- a/sources/apps/sample_apps/deepstream-test3/dstest3_pgie_config.yml

+++ b/sources/apps/sample_apps/deepstream-test3/dstest3_pgie_config.yml

@@ -62,7 +62,7 @@ property:

net-scale-factor: 0.00392156862745098

tlt-model-key: tlt_encode

tlt-encoded-model: ../../../../samples/models/Primary_Detector/resnet18_trafficcamnet.etlt

- model-engine-file: ../../../../samples/models/Primary_Detector/resnet18_trafficcamnet.etlt_b1_gpu0_int8.engine

+ model-engine-file: ../../../../samples/models/Primary_Detector/resnet18_trafficcamnet.etlt_b4_gpu0_int8.engine

labelfile-path: ../../../../samples/models/Primary_Detector/labels.txt

int8-calib-file: ../../../../samples/models/Primary_Detector/cal_trt.bin

force-implicit-batch-dim: 1

You can get the value of the source_id member of frame_meta;

for (l_frame = batch_meta->frame_meta_list; l_frame != NULL;

l_frame = l_frame->next) {

NvDsFrameMeta *frame_meta = (NvDsFrameMeta *) (l_frame->data);

frame_meta->source_id;

1 Like

Thank you for your answer, it’s very helpful!

1.Batch-size represents the number of video frames processed at one time. Whether to modify it depends on how you use it.

2.If you want to use the self-trained onnx model in nvinfer, you can refer to the documentation of the nvinfer plugin.https://docs.nvidia.com/metropolis/deepstream/6.3/dev-guide/text/DS_plugin_gst-nvinfer.html

I don’t have the resnet18_trafficcamnet.etlt_b4_gpu0_int8.engine.How do I generate this file?Or where can I modify batch-size to 4 to obtain this file?

There is no need to generate it yourself, it will be generated by the nvinfer plugin.

For my patch above for deepstream-test3, please refer to that modification.

OK.Thanks for your help!

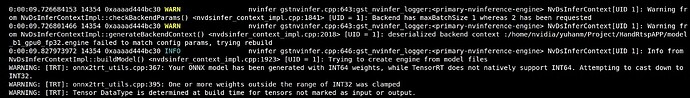

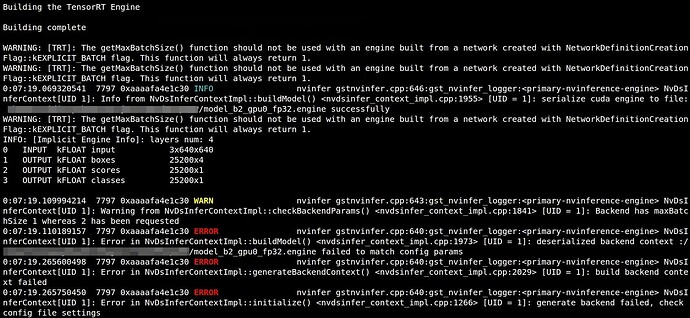

When I used a self-trained YOLO model to perform similar operations, I encountered the following errors.

I used nvdsinfer_custom_impl_Yolo to generate model engine file.What has gone wrong?

Did you copy the engine file from somewhere else?

This error indicates that the batch size parameter of the generated engine file is incorrect.

Delete the engine file and run it again

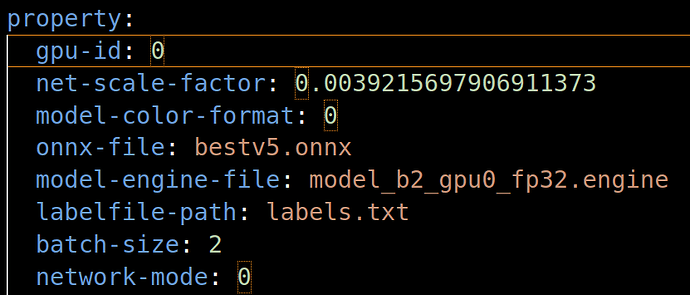

No.I just modified the parameters in the dstest3_pigie_config.yml file like this.

And the engine is automatically generated, as shown in the first two lines of the previous image.

Which version of yolo is your model based on? There should be some problems with the parameters in your configuration file.

You can refer to the yolo configuration items in this project

GitHub - marcoslucianops/DeepStream-Yolo: NVIDIA DeepStream SDK 6.3 / 6.2 / 6.1.1 / 6.1 / 6.0.1 / 6.0 / 5.1 implementation for YOLO models

Ultralytics-YOLOv5.Can I use this model to run the test3 project?

Yes, of course, follow the steps of the link I posted above and you can see the results.

It is no different from test3

Thank you for your help. That’s helpful!

Use this example to remove the source of the problem. You can open a new topic to discuss this issue

system

February 15, 2024, 2:46am

18

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.