Description

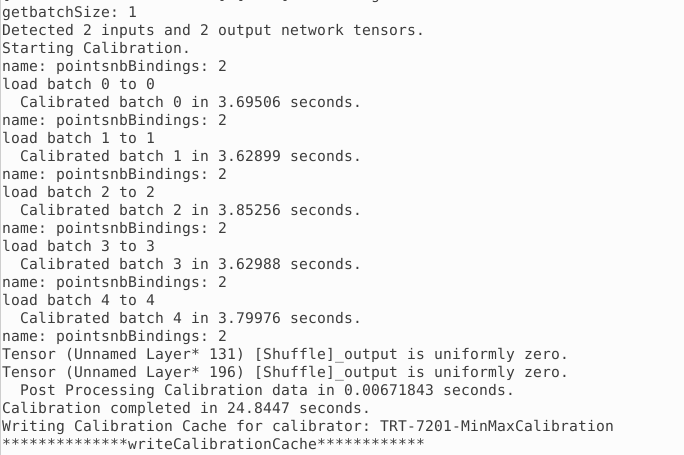

I Convert Pointpillar onnx into tensorRT Engine.

Now we have generated calibartor table, but it seems not correct because the Inference performs urgly (AP values are extrem low). We have tried all TensorRT supported calibration APIs but still bad results. maybe not the optimal calibrator table generated.

-

How to select calibration sataset? which calibration function (IINT8EntropyCalibrator or …) is suitable for object detection network?

-

While Calibrating there are some warning like : “Tensor (Unnamed Layer* 131) [Shuffle]_output is uniformly zero.” Will it influence the AP accuracy?

do inference: the output are false:

AP results on kitti:

Environment

TensorRT Version: 7.2

GPU Type: RTX3070

Nvidia Driver Version: 11.4

CUDA Version: 11.1

CUDNN Version: 8.0.5

Operating System + Version: unbuntu20.4

Python Version (if applicable): 3.8

TensorFlow Version (if applicable):

PyTorch Version (if applicable):

Baremetal or Container (if container which image + tag):

Relevant Files

Calibrator table:

calibrator.calib.backup (4.2 KB)