Based on Jetson Orin nx, connect 2 channels of GL3008+IMX415. After recording, save them as video files, support reading the video files afterwards, and realize frame synchronization parsing so that the algorithm can complete video stitching. It can be 2 video files, with frame synchronization achieved by timestamps, or saved as a single video file, along with a method to parse it into 2 channels of video.

How should this be implemented? Could you provide me with a demo?

First the HW need design sync signal and check with Sony how to have the sensor output synchronize frame.

Then reference to MMAPI sample syncSensor/syncStero to capture by single session.

For stitch maybe check with OpenCv.

Hi shaneCCC,

Since the camera sensors do not support hardware synchronization, we now intend to save the two video streams as two files, and adopt a solution that uses kernel timestamps on the software side and discards frames with a large time difference to achieve dual-sensor synchronization.

How should we do this? could you provide a demo?

Check the syncStero for timestamp checking.

Hi ShaneCCC,

After I set up the environment and run the generated executable file, I don’t find any recorded videos in the directory.

Could it be that I made a mistake somewhere?

You need to modify the code to remove the EEPROM check to run.

And this sample only have preview but generate any encoder file.

Hi ShaneCCC,

Okay, thank you for replying to my request for help.

I will modify the code to remove the EEPROM to pass the inspection, and I can add code to save dual-channel video files.

But I wonder if this demo includes timestamps? We will need this for subsequent functions. If it does, how can I obtain and process the timestamp information of the dual-channel video files in the code?

If you are checking the syncStereo you should see how to get the timestamp.

Hi ShaneCCC,

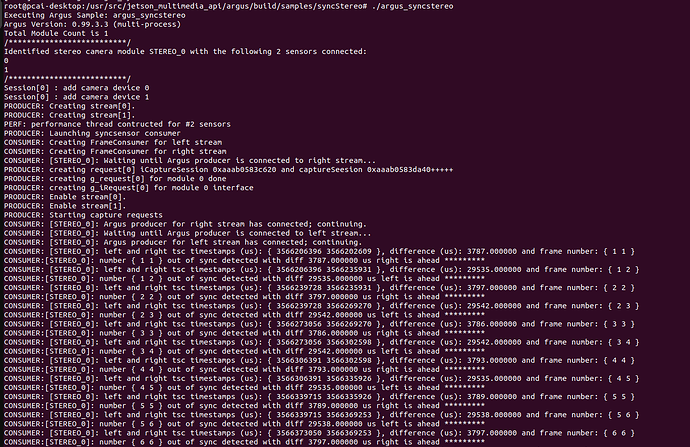

I have removed the EEPROM-related code from the program (and haven’t yet added the video-saving functionality), so this use case can run successfully.

However, I cannot see the real camera preview, and it seems that the two video streams always have a time difference of 11~22ms(We want an error within one millimeter). Could you please provide some relevant suggestions?

This is my source file:

main.c.txt (28.9 KB)

relevant log files:

dmesg-trace.txt (2.9 MB)

Looks like this sample don’t show the image only check the time diff.

Hi ShaneCCC,

Then, may I ask if there is a relevant sample demo that I need?(we now intend to save the two video streams as two files, and adopt a solution that uses timestamps on the software side and discards frames with a large time difference to achieve dual-sensor synchronization)

Suppose the MMAPI have save to file sample. You can integrate it to syncStereo to save to files.

Hi ShaneCCC,

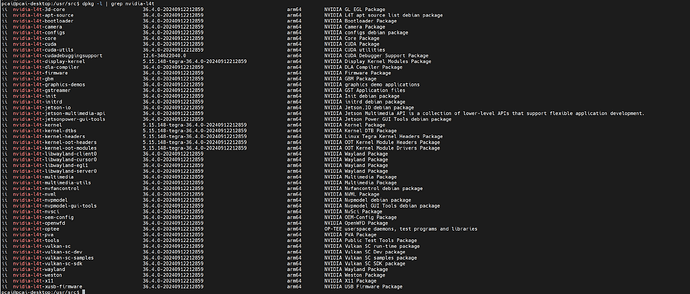

While using the API to implement the functionality I wanted, I updated the API package with the command. This has caused many library files to have inconsistent versions. Could you please tell me how to recover from this?

sudo apt-get install --reinstall nvidia-l4t-jetson-multimedia-api

I don’t have idea to recover others package but you can get the MMAPI by below command.

sudo apt list -a nvidia-l4t-jetson-multimedia-api

sudo apt install nvidia-l4t-jetson-multimedia-api=36.xxxx

Hi ShaneCCC,

It seems that using this command can revert the environment to the previous version. I’m not sure, but I’ll try again.

And when using timestamps to achieve software synchronization of data frames, should we use MMAPI to stamp the captured image data with timestamps? Or should we store the timestamps in the data structure of the driver layer (such as imx415.c or nvidia platform driver source files) and parse the timestamps at the application layer?

In addition, my supervisor asked whether it is possible to manipulate timestamps using the source files of V4L2 (instead of the MMAPI provided by NVIDIA). Since I couldn’t find relevant ones in the directory, I have to ask you again.

Looking forward to your reply.

Suppose the vi5_fops.c should store the timestamp to the v4l buffer for v4l2 use case. You can download the kernel source code to confirm it.

Hi ShaneCCC,

Assuming that timestamps are stored in this file, how can I use the timestamps stored in this file to perform frame synchronization?

The vi5_fops.c store the timestamp to the v4l buffer.

Suppose you can get it from user space via v4l API.

BTW, you can reference to v4l2-ctl public source code.

Thanks

Hi ShaneCCC,

Where can I find the public sample program mentioned here?