I am trying to load the ocdnet model as sgie in deepstream. I could load the model but deepstream is failing to parse the output. What is name of the output-blob-names for the pre-trained model from ngc? I set it to pred but not working. Also, for parsing will nvocdr libnvocdr_impl.so work only for ocdnet?

Actually I was able to load the ocdnet as secondary engine. But getting no output from it. Neither any error. Probably for the parser.

Config for sgie0

[property]

gpu-id=0

net-scale-factor=0.0039215697906911373

model-color-format=0

#custom-network-config=yolo-obj-box-detection.cfg

#model-file=yolo-obj_best_box.weights

#onnx-file=yolov4_-1_3_608_608_dynamic.onnx

onnx-file=/home/sigmind/deepstream_sdk_v6.3.0_x86_64/opt/nvidia/deepstream/deepstream-6.3/samples/models/Secondary_VehicleTypes/ocdnet.onnx

#model-engine-file=model_b4_gpu0_fp32.engine

model-engine-file=/home/sigmind/deepstream_sdk_v6.3.0_x86_64/opt/nvidia/deepstream/deepstream-6.3/samples/models/Secondary_VehicleTypes/ocdnet.fp16.engine

#int8-calib-file=calib.table

labelfile-path=labels.txt

batch-size=1

network-mode=2

num-detected-classes=1

interval=0

gie-unique-id=1

process-mode=1

network-type=0

cluster-mode=2

maintain-aspect-ratio=0

symmetric-padding=1

force-implicit-batch-dim=0

#workspace-size=2000

parse-bbox-func-name=NvDsInferParseYolo

#parse-bbox-func-name=NvDsInferParseYoloCuda

custom-lib-path=/media/sigmind/URSTP_HDD1416/DeepStream-Yolo/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so

engine-create-func-name=NvDsInferYoloCudaEngineGet

tensor-meta=1[class-attrs-all]

pre-cluster-threshold=0.2

topk=300

Different models require different post-processing source code, the configuration files you provided will not work, they are only applicable to the output parsing of some Yolo models

For OCDNet, this is the sample code.

But for OCDNet, you usually have to work with OCRNet,In addition, OCDNet is usually used as PGIE

Can you share your goal? I don’t understand your intention

I know the the yolo parsing won’t work. But could successfully load the model. Now working on the parsing. My goal is to detect boxes on a conveyor belt then detect texts on the boxes. Then ocr the text. I am thinking box detection model yolo as pgie. OCDnet as sgie0 and OCRnet as sgie1.

Actually I was able to generate the parser. Would be helpful if you could suggest any modification for proper parsing.

#include "nvdsinfer_custom_impl.h"

#include <opencv2/opencv.hpp>

extern "C" bool NvDsInferParseYolo(

std::vector<NvDsInferLayerInfo> const& outputLayersInfo,

NvDsInferNetworkInfo const& networkInfo,

NvDsInferParseDetectionParams const& detectionParams,

std::vector<NvDsInferParseObjectInfo>& objectList);

static float contourScore(const cv::Mat& binary, const std::vector<cv::Point>& contour) {

cv::Rect rect = cv::boundingRect(contour);

int xmin = std::max(rect.x, 0);

int xmax = std::min(rect.x + rect.width, binary.cols - 1);

int ymin = std::max(rect.y, 0);

int ymax = std::min(rect.y + rect.height, binary.rows - 1);

cv::Mat binROI = binary(cv::Rect(xmin, ymin, xmax - xmin + 1, ymax - ymin + 1));

cv::Mat mask = cv::Mat::zeros(ymax - ymin + 1, xmax - xmin + 1, CV_8U);

std::vector<cv::Point> roiContour;

for (const auto& pt : contour) {

roiContour.emplace_back(cv::Point(pt.x - xmin, pt.y - ymin));

}

std::vector<std::vector<cv::Point>> roiContours = {roiContour};

cv::fillPoly(mask, roiContours, cv::Scalar(1));

return cv::mean(binROI, mask)[0];

}

static NvDsInferParseObjectInfo convertBBox(const cv::RotatedRect& box, const uint& netW, const uint& netH) {

NvDsInferParseObjectInfo b;

cv::Rect bbox = box.boundingRect();

// Clamp values to network dimensions

bbox.x = std::max(0, std::min(bbox.x, (int)netW));

bbox.y = std::max(0, std::min(bbox.y, (int)netH));

bbox.width = std::min(bbox.width, (int)netW - bbox.x);

bbox.height = std::min(bbox.height, (int)netH - bbox.y);

b.left = bbox.x;

b.top = bbox.y;

b.width = bbox.width;

b.height = bbox.height;

return b;

}

static std::vector<NvDsInferParseObjectInfo> decodeTensorYolo(

const float* output,

const uint& outputH, const uint& outputW,

const uint& netW, const uint& netH,

const std::vector<float>& preclusterThreshold)

{

std::vector<NvDsInferParseObjectInfo> binfo;

// Convert network output to OpenCV Mat

cv::Mat predMap(outputH, outputW, CV_32F, (void*)output);

// Threshold the prediction map

cv::Mat binary;

cv::threshold(predMap, binary, preclusterThreshold[0], 1.0, cv::THRESH_BINARY);

binary.convertTo(binary, CV_8U);

// Find contours

std::vector<std::vector<cv::Point>> contours;

cv::findContours(binary, contours, cv::RETR_LIST, cv::CHAIN_APPROX_SIMPLE);

// Process each contour

const float polygonThreshold = 0.3; // Same as default in OCDNetEngine

const int maxContours = 200; // Same as default in OCDNetEngine

size_t numCandidate = std::min(contours.size(), (size_t)maxContours);

for (size_t i = 0; i < numCandidate; i++) {

float score = contourScore(predMap, contours[i]);

if (score < polygonThreshold) {

continue;

}

// Get rotated rectangle

cv::RotatedRect box = cv::minAreaRect(contours[i]);

// Filter small boxes

float shortSide = std::min(box.size.width, box.size.height);

if (shortSide < 1) {

continue;

}

// Convert to NvDsInferParseObjectInfo

NvDsInferParseObjectInfo bbi = convertBBox(box, netW, netH);

// Skip invalid detections

if (bbi.width < 1 || bbi.height < 1) {

continue;

}

bbi.detectionConfidence = score;

bbi.classId = 0; // Single class for text detection

binfo.push_back(bbi);

}

return binfo;

}

static bool NvDsInferParseCustomYolo(

std::vector<NvDsInferLayerInfo> const& outputLayersInfo,

NvDsInferNetworkInfo const& networkInfo,

NvDsInferParseDetectionParams const& detectionParams,

std::vector<NvDsInferParseObjectInfo>& objectList)

{

if (outputLayersInfo.empty()) {

std::cerr << "ERROR: Could not find output layer in bbox parsing" << std::endl;

return false;

}

const NvDsInferLayerInfo& output = outputLayersInfo[0];

// Get output dimensions

const uint outputH = output.inferDims.d[1]; // Height

const uint outputW = output.inferDims.d[2]; // Width

std::vector<NvDsInferParseObjectInfo> objects = decodeTensorYolo(

(const float*)(output.buffer),

outputH, outputW,

networkInfo.width, networkInfo.height,

detectionParams.perClassPreclusterThreshold);

objectList = objects;

return true;

}

extern "C" bool NvDsInferParseYolo(

std::vector<NvDsInferLayerInfo> const& outputLayersInfo,

NvDsInferNetworkInfo const& networkInfo,

NvDsInferParseDetectionParams const& detectionParams,

std::vector<NvDsInferParseObjectInfo>& objectList)

{

return NvDsInferParseCustomYolo(

outputLayersInfo,

networkInfo,

detectionParams,

objectList);

}

CHECK_CUSTOM_PARSE_FUNC_PROTOTYPE(NvDsInferParseYolo);

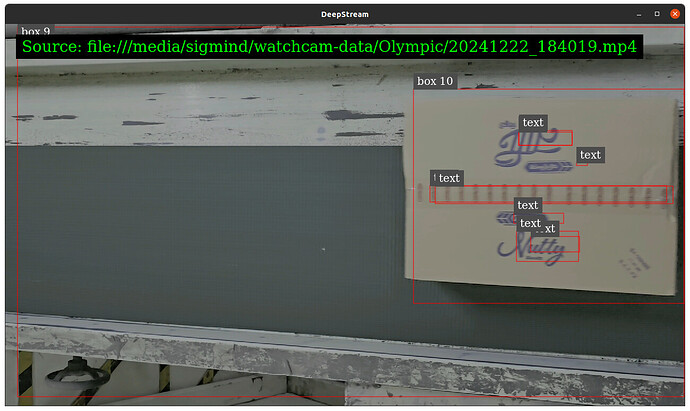

current output:

I understand your intention, but the pipeline I suggest is this

Yolo --> videotemplate

|

add probe function at pgie src, then crop the boxes to a image.

Refer to the implementation of deepstream-nvocdr-app

When cropping boxes, remove all padding

I implemented the pipeline as you suggested. Also, implemented the pipeline as I planned. For both implementation OCDnet is unable to capture vertical texts.

The sample code can recognize vertical text. Can you share the test stream so that we can test it?

This problem should only be caused by accuracy. I used deepstream_nvocdr_app for testing without adding YOLO as pgie, and it worked normally. Vertical text is also recognized correctly.

Actually I made a mistake. I am getting the result now. Thank you for the help.