I made a pipeline that intend to infer multiple local files.

The pipeline simple description is this:

-

The pipeline composed by 4 parts, each part is a Gst.Bin, and their numbers are follows:

- source-bin: can be many (this pipeline demo has 2)

- preprocess-bin: can be many, same number as

source-bin - inference-bin: only one

- sink-bin: only one

-

Within the

preprocess-bin, ateeelement is used to output 2 sources as inputs forstreammux

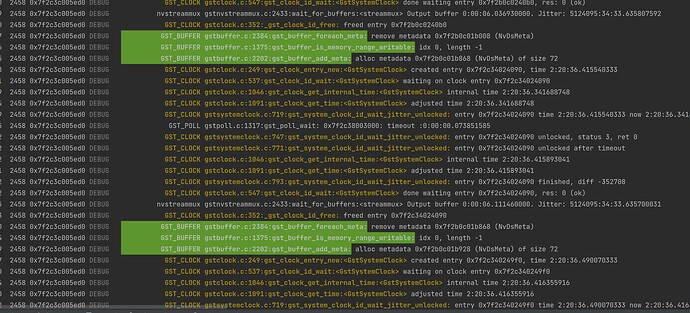

With the pipeline shows above, I encountered a DeadLock when I add NvIfer after Streammux. The pipeline will never quit. The debug info shows that streammux is waiting for buffers and GST_CLOCK is waiting for unlock? I’m new to gstreamer and deepstream, so I dont where to go.

But, when I only use 1 srouce-bin and all others keep unchanged, there is no deadlock and pipeline runs fast.

It seems that if NvInfer’s batch-size larger than 4, the pipeline runs slow and deadlock appear. I’m confusing.

(I use PeopleNet model from NGC)

Lastly, this are my FPS test on difference cases. I run my programe in Docker Container: nvcr.io/nvidia/deepstream:6.0.1-devel

1. Use 2 source-bin and Use NvIfer (FPS slow, DeadLock here)

2. Use 2 source-bin but without NvInfer (FPS fast, No DeadLock)

3. Use 1 source-bin and Use NvInfer (FPS still fast, No DeadLock)

• Hardware Platform (GPU): GPU 3080

• DeepStream Version: 6.0.1

• TensorRT Version

• NVIDIA GPU Driver Version (valid for GPU only) : 495.29.05

• Issue Type( questions, new requirements, bugs): questions