Hi zhaopeng_eng,

your understanding of the organization and the shared memory banks seems all correct to me. However, the issue you are seeing is caused by which threads are actually grouped together in a warp. For your block layout of TX=32 and TY=16 you end up with each warp executing one row block.

To print the thread indices, banks, and bank offsets for your code sample for the first warp of the first block, I changed your existing commented debug output to:

if (blockIdx.x == 0 && blockIdx.y == 0 && blockIdx.z == 0 && threadIdx.x < 32 && threadIdx.y == 0) {

const auto index = col_i * (TX + offset) + row_i;

printf("tx[%2d]: %3d bank:%2d offset:%d\n", threadIdx.x, index, index%32, index/32);

}

For the first variant of your code with offset = 0, the printed output is shown in the following:

tx[ 0]: 0 bank: 0 offset:0

tx[ 1]: 32 bank: 0 offset:1

tx[ 2]: 64 bank: 0 offset:2

tx[ 3]: 96 bank: 0 offset:3

tx[ 4]: 128 bank: 0 offset:4

tx[ 5]: 160 bank: 0 offset:5

tx[ 6]: 192 bank: 0 offset:6

tx[ 7]: 224 bank: 0 offset:7

tx[ 8]: 256 bank: 0 offset:8

tx[ 9]: 288 bank: 0 offset:9

tx[10]: 320 bank: 0 offset:10

tx[11]: 352 bank: 0 offset:11

tx[12]: 384 bank: 0 offset:12

tx[13]: 416 bank: 0 offset:13

tx[14]: 448 bank: 0 offset:14

tx[15]: 480 bank: 0 offset:15

tx[16]: 1 bank: 1 offset:0

tx[17]: 33 bank: 1 offset:1

tx[18]: 65 bank: 1 offset:2

tx[19]: 97 bank: 1 offset:3

tx[20]: 129 bank: 1 offset:4

tx[21]: 161 bank: 1 offset:5

tx[22]: 193 bank: 1 offset:6

tx[23]: 225 bank: 1 offset:7

tx[24]: 257 bank: 1 offset:8

tx[25]: 289 bank: 1 offset:9

tx[26]: 321 bank: 1 offset:10

tx[27]: 353 bank: 1 offset:11

tx[28]: 385 bank: 1 offset:12

tx[29]: 417 bank: 1 offset:13

tx[30]: 449 bank: 1 offset:14

tx[31]: 481 bank: 1 offset:15

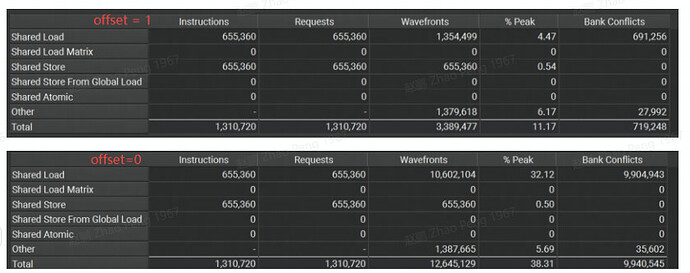

As you also stated in your comment, this pattern leads to a lot of bank conflicts. More specifically it is a 16-way bank conflict for each warp. We only access 2 banks and have to read 16 unique bank offsets from each of the two banks. If you look on the Source Page, the kernel executes a shared memory load for 655,360 warps. For a 16-way bank conflict that ends up being 16 * 655,360 = 10,485,760 L1 Wavefronts Shared. We classify 15 out of the 16 wavefronts excessive as an ideal memory pattern for this access would only take a single wavefront. Consequently, the metric L1 Wavefronts Shared Excessive ends up being 15 * 655,360 = 9,830,400.

Repeating the same analysis for your code with offset = 1, leads to the following output:

tx[ 0]: 0 bank: 0 offset:0

tx[ 1]: 33 bank: 1 offset:1

tx[ 2]: 66 bank: 2 offset:2

tx[ 3]: 99 bank: 3 offset:3

tx[ 4]: 132 bank: 4 offset:4

tx[ 5]: 165 bank: 5 offset:5

tx[ 6]: 198 bank: 6 offset:6

tx[ 7]: 231 bank: 7 offset:7

tx[ 8]: 264 bank: 8 offset:8

tx[ 9]: 297 bank: 9 offset:9

tx[10]: 330 bank:10 offset:10

tx[11]: 363 bank:11 offset:11

tx[12]: 396 bank:12 offset:12

tx[13]: 429 bank:13 offset:13

tx[14]: 462 bank:14 offset:14

tx[15]: 495 bank:15 offset:15

tx[16]: 1 bank: 1 offset:0

tx[17]: 34 bank: 2 offset:1

tx[18]: 67 bank: 3 offset:2

tx[19]: 100 bank: 4 offset:3

tx[20]: 133 bank: 5 offset:4

tx[21]: 166 bank: 6 offset:5

tx[22]: 199 bank: 7 offset:6

tx[23]: 232 bank: 8 offset:7

tx[24]: 265 bank: 9 offset:8

tx[25]: 298 bank:10 offset:9

tx[26]: 331 bank:11 offset:10

tx[27]: 364 bank:12 offset:11

tx[28]: 397 bank:13 offset:12

tx[29]: 430 bank:14 offset:13

tx[30]: 463 bank:15 offset:14

tx[31]: 496 bank:16 offset:15

From the output we can see that we now read from more banks, but we still have to read 2 unique values for most of the accessed banks. In other words, we now have a 2-way bank conflict for each warp. Looking at the number again on the Source Page for this access, we end up with 2 * 655,360 = 1,310,720 overall L1 Wavefronts Shared. One out of the two wavefronts is considered excessive, which leaves us with 655,360 L1 Wavefronts Shared Excessive. For comparison, this is the output from the report in this case:

I hope that helps to explain the numbers in the report and why you are seeing the reduction in wavefronts when switching between the variants. In order to get to zero bank conflicts for this sample, you need to further change the indexing calculation so that you access all 32 banks for the 32 threads of each warp.