Hello, I have a Jetson nano. I want to run a deep learning inference program in it and analyze its memory access. The inference program is executed by the python 3 interpreter, which inputs a picture into the built-in neural network of torch, such as resnet50, and outputs the inference result.

Question: I want to know how much memory is accessed by the data from DRAM to L2, then to L1, and then to the kernel when the program is running.

Here is my analysis process:

I plan to use nvprof to analyze the program.

Because it is CC5 3. Therefore, it is not supported to collect the memory accesses from DRAM to L2, as shown in the figure below (reference:CUDA Toolkit V11.61.

)

however, according to the output of nvprof – query metrics, as shown in the following figure:

I can collect gld_transactions, converted to MB (gld_transactions * 4 / 1024 / 1024 (MB)), can it represent the number of bytes read by the kernel from L1 cache?

And L2_ global_ load_ Bytes, converted to MB (l2_global_load_bytes / 1024 / 1024 (MB)), can it represent the number of bytes read by L1 from L2?

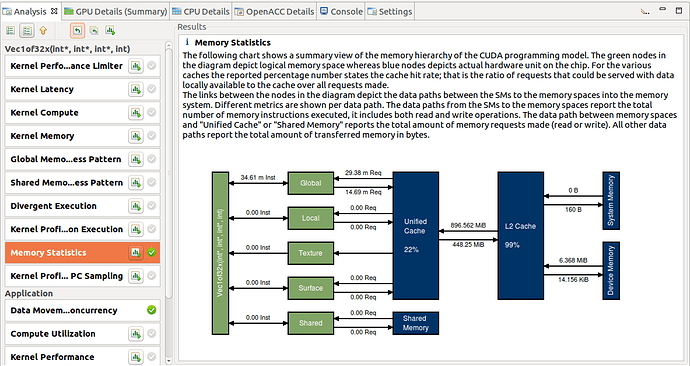

Since nvprof’s metrics cannot collect the amount of DRAM memory accessed by L2, I found the visual profiler tool in the previous link, which can analyze the memory flow:

However, I found that the article pointed out that the Jetson nano cannot directly use visual Profiler:

So when I execute the program, I use the following statements:

sudo /usr/local/cuda/bin/nvprof -o tf-resnet50.nvvp python3 resnet50-infer.py

Save the analysis results to tf-resnet50.nvvp, use the same version of visual profiler on the PC to tf-resnet50.nvvp analysis yielded the following results:

Does the total bytes shown in the bottom right corner of memcpy (htod) represent the amount of memory accessed by L2 cache from DRAM?If yes, the value corresponding to memcpy (htod) can be collected in Jetson nano using nvprof – which metric in query metrics?Because the data of visual profiler is collected by nvprof.

It can be summarized into three questions:

(1) gld_transactions, converted to MB (gld_transactions * 4 / 1024 / 1024 (MB)), can it represent the number of bytes read by the kernel from L1 cache?

(2)l2_ global_ load_ Bytes, converted to MB (l2_global_load_bytes / 1024 / 1024 (MB)), can it represent the number of bytes read by L1 from L2?

(3) Does memcpy (htod) in visual profiler represent the amount of data read from DRAM by L2 cache? If yes, the value corresponding to memcpy (htod) can be collected in Jetson nano using nvprof – which metric in query metrics?

These questions have bothered me for a long time. If you can answer them patiently, I will be very grateful.