I used YOLOv3-ONNX Nvidia sample and changed it a bit for my network (YOLOv3-Tiny with differenc number of classes). As a result got .onnx model from my Darknet (.cfg + .weights) model.

If you don’t know what YOLOv3-Tiny model is — it has two (13x13 and 26x26) convolution outputs with data in this format:

[x1|y1|width1|height1|box probability1|class1 prob1|class2 prob1|...|x2|...|x3|...]

And with included in sample Python script to run this model it works perfectly fine. Objects are reckognised as expected.

But when I tried to use this exact model in C++ program (reworked sampleOnnxMNIST) — I got very different result.

Bounding boxes for some objects in C++ are about 2 times bigger.

Other objects got 2-3 multiple small bounding boxes instead of one proper.

Sometimes I can see both issues.

Here is archive with 10 frames and C++/Python boundary boxes as example.

When I compared output of network right after exp/sigmoid (so the values will be in [0, 1] interval) — I got similar shape of plot of results.

Similar but noticable different: for example difference between sigmoid(x) values can be up to 0.25 (for values in [0, 1] interval!).

Plots of sigmoid(x_output) as example:

• C++ (red) and Python (blue):

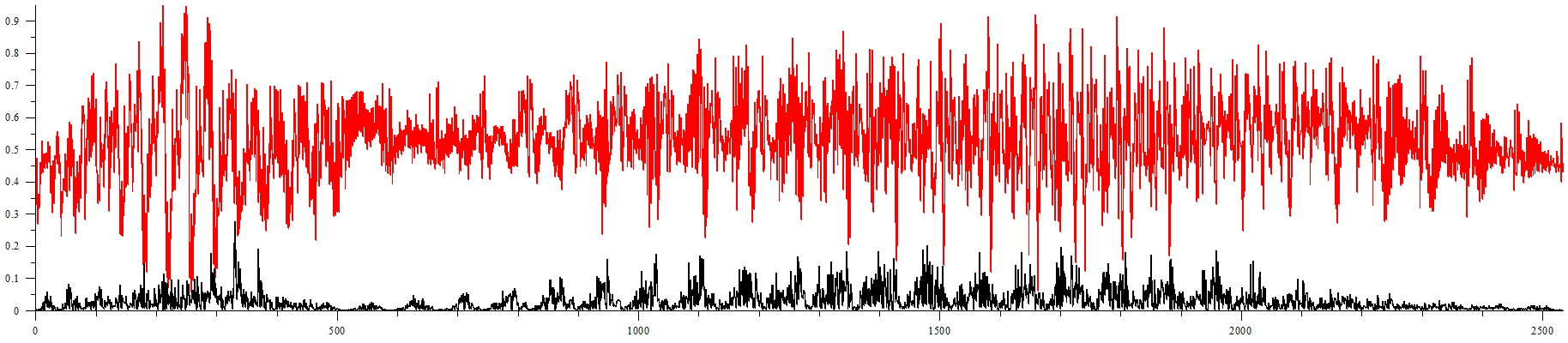

• red = average between C++ and Python; black = abs(difference):

When in comes to boxes probabilities — there are only ~3-10 values above 0.03 and they often have different indicies.

For Python code you can look here (onnx_to_tensorrt.py and data_processing.py are pretty much it). Original Nvidia code I can’t find on github now but this one has minimum changes.

My C++ code is here. Includes for it can be found here.

Not sure if I can share model itself because it is probably protected by NDA of our company.

So the question is: What is the reason of this?

• Is it wrong indexing (or something else) in my C++ code?

• Or there’s really that big difference between engine outputs in C++ and Python?