So, we ran a couple more tests on my colleague’s RTX 4080, using variable and fixed GPU and memory rates, on Windows and Ubuntu. Below, I put down the measured average times the tests took with standard deviations as well as the nvidia-smi readings taken while running the tests. We fixed the GPU and memory rates for the respective tests using nvidia-smi --lock-gpu-clocks and nvidia-smi --lock-memory-clocks.

Variable rates, Windows

Mesured durance of tests:

Durchschn. Dauer: 837.917 ms

Durchschn. Abweichung: 40.322 ms

(rel. Durchschn. Abweichung: 4.81218 %)

nvidia-smi output:

C:\Windows\System32>nvidia-smi --query-gpu=timestamp,name,pci.bus_id,driver_version,pstate,pcie.link.gen.max,pcie.link.gen.current,pcie.link.width.max,pcie.link.width.current,temperature.gpu,utilization.gpu,utilization.memory,memory.total,memory.free,memory.used,clocks_event_reasons.hw_thermal_slowdown,clocks_event_reasons.hw_power_brake_slowdown,clocks_event_reasons.sw_thermal_slowdown,power.draw.instant,power.limit,power.max_limit --format=csv -l 2

timestamp, name, pci.bus_id, driver_version, pstate, pcie.link.gen.max, pcie.link.gen.current, pcie.link.width.max, pcie.link.width.current, temperature.gpu, utilization.gpu [%], utilization.memory [%], memory.total [MiB], memory.free [MiB], memory.used [MiB], clocks_event_reasons.hw_thermal_slowdown, clocks_event_reasons.hw_power_brake_slowdown, clocks_event_reasons.sw_thermal_slowdown, power.draw.instant [W], power.limit [W], power.max_limit [W]

2025/12/10 18:47:59.020, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 4, 16, 16, 38, 6 %, 35 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 20.63 W, 320.00 W, 400.00 W

2025/12/10 18:48:01.035, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P0, 4, 4, 16, 16, 39, 1 %, 1 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 50.30 W, 320.00 W, 400.00 W

2025/12/10 18:48:03.049, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 39, 2 %, 2 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 32.48 W, 320.00 W, 400.00 W

2025/12/10 18:48:05.063, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P5, 4, 2, 16, 16, 39, 17 %, 22 %, 16376 MiB, 14958 MiB, 1093 MiB, Not Active, Not Active, Not Active, 25.42 W, 320.00 W, 400.00 W

2025/12/10 18:48:07.078, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 4 %, 45 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 22.77 W, 320.00 W, 400.00 W

2025/12/10 18:48:09.095, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 4 %, 42 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 23.27 W, 320.00 W, 400.00 W

2025/12/10 18:48:11.108, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 8 %, 35 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 22.78 W, 320.00 W, 400.00 W

2025/12/10 18:48:13.113, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 5 %, 40 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 22.05 W, 320.00 W, 400.00 W

2025/12/10 18:48:15.117, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 5 %, 33 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 22.64 W, 320.00 W, 400.00 W

2025/12/10 18:48:17.119, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 6 %, 41 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 22.21 W, 320.00 W, 400.00 W

2025/12/10 18:48:19.122, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 7 %, 45 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 23.02 W, 320.00 W, 400.00 W

2025/12/10 18:48:21.128, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 4 %, 46 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 22.15 W, 320.00 W, 400.00 W

2025/12/10 18:48:23.141, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 6 %, 48 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 22.34 W, 320.00 W, 400.00 W

2025/12/10 18:48:25.144, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 4 %, 53 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 21.91 W, 320.00 W, 400.00 W

2025/12/10 18:48:27.148, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 4 %, 46 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 22.18 W, 320.00 W, 400.00 W

2025/12/10 18:48:29.151, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 6 %, 47 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 23.16 W, 320.00 W, 400.00 W

2025/12/10 18:48:31.153, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 9 %, 42 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 24.50 W, 320.00 W, 400.00 W

2025/12/10 18:48:33.167, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 8 %, 37 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 23.65 W, 320.00 W, 400.00 W

2025/12/10 18:48:35.182, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 4 %, 36 %, 16376 MiB, 14959 MiB, 1092 MiB, Not Active, Not Active, Not Active, 23.44 W, 320.00 W, 400.00 W

2025/12/10 18:48:37.185, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 6 %, 54 %, 16376 MiB, 14989 MiB, 1062 MiB, Not Active, Not Active, Not Active, 23.52 W, 320.00 W, 400.00 W

2025/12/10 18:48:39.190, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 8 %, 43 %, 16376 MiB, 14989 MiB, 1062 MiB, Not Active, Not Active, Not Active, 22.87 W, 320.00 W, 400.00 W

2025/12/10 18:48:41.194, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 39, 4 %, 39 %, 16376 MiB, 14989 MiB, 1062 MiB, Not Active, Not Active, Not Active, 22.41 W, 320.00 W, 400.00 W

2025/12/10 18:48:43.209, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P2, 4, 4, 16, 16, 40, 1 %, 1 %, 16376 MiB, 14752 MiB, 1299 MiB, Not Active, Not Active, Not Active, 50.57 W, 320.00 W, 400.00 W

2025/12/10 18:48:45.222, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P2, 4, 4, 16, 16, 40, 0 %, 1 %, 16376 MiB, 14752 MiB, 1299 MiB, Not Active, Not Active, Not Active, 48.66 W, 320.00 W, 400.00 W

2025/12/10 18:48:47.700, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P2, 4, 4, 16, 16, 40, 0 %, 1 %, 16376 MiB, 14752 MiB, 1299 MiB, Not Active, Not Active, Not Active, 49.12 W, 320.00 W, 400.00 W

2025/12/10 18:48:50.071, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P5, 4, 2, 16, 16, 40, 4 %, 13 %, 16376 MiB, 14752 MiB, 1299 MiB, Not Active, Not Active, Not Active, 28.15 W, 320.00 W, 400.00 W

2025/12/10 18:48:52.194, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P5, 4, 2, 16, 16, 40, 43 %, 29 %, 16376 MiB, 14726 MiB, 1325 MiB, Not Active, Not Active, Not Active, 29.10 W, 320.00 W, 400.00 W

2025/12/10 18:48:54.309, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 4 %, 40 %, 16376 MiB, 14742 MiB, 1309 MiB, Not Active, Not Active, Not Active, 22.23 W, 320.00 W, 400.00 W

2025/12/10 18:48:56.417, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 2 %, 40 %, 16376 MiB, 14742 MiB, 1309 MiB, Not Active, Not Active, Not Active, 22.26 W, 320.00 W, 400.00 W

2025/12/10 18:48:58.509, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 5 %, 38 %, 16376 MiB, 14742 MiB, 1309 MiB, Not Active, Not Active, Not Active, 21.30 W, 320.00 W, 400.00 W

2025/12/10 18:49:00.622, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 4 %, 36 %, 16376 MiB, 14742 MiB, 1309 MiB, Not Active, Not Active, Not Active, 21.74 W, 320.00 W, 400.00 W

2025/12/10 18:49:02.725, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 4 %, 44 %, 16376 MiB, 14742 MiB, 1309 MiB, Not Active, Not Active, Not Active, 20.73 W, 320.00 W, 400.00 W

2025/12/10 18:49:04.828, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 4 %, 45 %, 16376 MiB, 14742 MiB, 1309 MiB, Not Active, Not Active, Not Active, 21.52 W, 320.00 W, 400.00 W

2025/12/10 18:49:06.937, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 4 %, 39 %, 16376 MiB, 14742 MiB, 1309 MiB, Not Active, Not Active, Not Active, 21.58 W, 320.00 W, 400.00 W

2025/12/10 18:49:09.043, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 2 %, 34 %, 16376 MiB, 14742 MiB, 1309 MiB, Not Active, Not Active, Not Active, 22.64 W, 320.00 W, 400.00 W

2025/12/10 18:49:11.149, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 5 %, 39 %, 16376 MiB, 14742 MiB, 1309 MiB, Not Active, Not Active, Not Active, 23.18 W, 320.00 W, 400.00 W

2025/12/10 18:49:13.254, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 9 %, 37 %, 16376 MiB, 14742 MiB, 1309 MiB, Not Active, Not Active, Not Active, 22.65 W, 320.00 W, 400.00 W

2025/12/10 18:49:15.359, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 5 %, 43 %, 16376 MiB, 14735 MiB, 1316 MiB, Not Active, Not Active, Not Active, 22.32 W, 320.00 W, 400.00 W

2025/12/10 18:49:17.468, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 5 %, 52 %, 16376 MiB, 14735 MiB, 1316 MiB, Not Active, Not Active, Not Active, 20.16 W, 320.00 W, 400.00 W

2025/12/10 18:49:19.619, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P8, 4, 1, 16, 16, 40, 7 %, 41 %, 16376 MiB, 14735 MiB, 1316 MiB, Not Active, Not Active, Not Active, 20.92 W, 320.00 W, 400.00 W

Fixed rates, Windows

Mesured durance of tests:

Durchschn. Dauer: 612.206 ms

Durchschn. Abweichung: 3.314 ms

(rel. Durchschn. Abweichung: 0.541322 %)

nvidia-smi output:

C:\Windows\System32>nvidia-smi --query-gpu=timestamp,name,pci.bus_id,driver_version,pstate,pcie.link.gen.max,pcie.link.gen.current,pcie.link.width.max,pcie.link.width.current,temperature.gpu,utilization.gpu,utilization.memory,memory.total,memory.free,memory.used,clocks_event_reasons.hw_thermal_slowdown,clocks_event_reasons.hw_power_brake_slowdown,clocks_event_reasons.sw_thermal_slowdown,power.draw.instant,power.limit,power.max_limit --format=csv -l 2

timestamp, name, pci.bus_id, driver_version, pstate, pcie.link.gen.max, pcie.link.gen.current, pcie.link.width.max, pcie.link.width.current, temperature.gpu, utilization.gpu [%], utilization.memory [%], memory.total [MiB], memory.free [MiB], memory.used [MiB], clocks_event_reasons.hw_thermal_slowdown, clocks_event_reasons.hw_power_brake_slowdown, clocks_event_reasons.sw_thermal_slowdown, power.draw.instant [W], power.limit [W], power.max_limit [W]

2025/12/10 19:13:00.392, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 51, 2 %, 2 %, 16376 MiB, 14582 MiB, 1469 MiB, Not Active, Not Active, Not Active, 55.84 W, 320.00 W, 400.00 W

2025/12/10 19:13:02.396, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 51, 0 %, 1 %, 16376 MiB, 14582 MiB, 1469 MiB, Not Active, Not Active, Not Active, 54.74 W, 320.00 W, 400.00 W

2025/12/10 19:13:04.410, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 51, 0 %, 2 %, 16376 MiB, 14582 MiB, 1469 MiB, Not Active, Not Active, Not Active, 55.38 W, 320.00 W, 400.00 W

2025/12/10 19:13:06.413, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 51, 0 %, 2 %, 16376 MiB, 14582 MiB, 1469 MiB, Not Active, Not Active, Not Active, 55.80 W, 320.00 W, 400.00 W

2025/12/10 19:13:08.428, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 51, 0 %, 2 %, 16376 MiB, 14582 MiB, 1469 MiB, Not Active, Not Active, Not Active, 54.63 W, 320.00 W, 400.00 W

2025/12/10 19:13:10.443, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 51, 0 %, 2 %, 16376 MiB, 14344 MiB, 1707 MiB, Not Active, Not Active, Not Active, 55.55 W, 320.00 W, 400.00 W

2025/12/10 19:13:12.985, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 51, 0 %, 2 %, 16376 MiB, 14344 MiB, 1707 MiB, Not Active, Not Active, Not Active, 55.61 W, 320.00 W, 400.00 W

2025/12/10 19:13:15.366, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14344 MiB, 1707 MiB, Not Active, Not Active, Not Active, 55.38 W, 320.00 W, 400.00 W

2025/12/10 19:13:17.737, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14344 MiB, 1707 MiB, Not Active, Not Active, Not Active, 54.53 W, 320.00 W, 400.00 W

2025/12/10 19:13:20.037, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 55, 0 %, 2 %, 16376 MiB, 14340 MiB, 1711 MiB, Not Active, Not Active, Not Active, 85.93 W, 320.00 W, 400.00 W

2025/12/10 19:13:22.067, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14340 MiB, 1711 MiB, Not Active, Not Active, Not Active, 58.86 W, 320.00 W, 400.00 W

2025/12/10 19:13:24.463, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 1 %, 2 %, 16376 MiB, 14335 MiB, 1716 MiB, Not Active, Not Active, Not Active, 55.40 W, 320.00 W, 400.00 W

2025/12/10 19:13:26.587, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.33 W, 320.00 W, 400.00 W

2025/12/10 19:13:28.692, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 54.74 W, 320.00 W, 400.00 W

2025/12/10 19:13:30.797, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 54.99 W, 320.00 W, 400.00 W

2025/12/10 19:13:32.810, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 1 %, 3 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 54.97 W, 320.00 W, 400.00 W

2025/12/10 19:13:34.832, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.06 W, 320.00 W, 400.00 W

2025/12/10 19:13:36.943, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.05 W, 320.00 W, 400.00 W

2025/12/10 19:13:39.046, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 54.90 W, 320.00 W, 400.00 W

2025/12/10 19:13:41.162, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.14 W, 320.00 W, 400.00 W

2025/12/10 19:13:43.266, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.49 W, 320.00 W, 400.00 W

2025/12/10 19:13:45.280, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.28 W, 320.00 W, 400.00 W

2025/12/10 19:13:47.299, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 3 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.50 W, 320.00 W, 400.00 W

2025/12/10 19:13:49.403, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.22 W, 320.00 W, 400.00 W

2025/12/10 19:13:51.516, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 3 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.62 W, 320.00 W, 400.00 W

2025/12/10 19:13:53.615, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.21 W, 320.00 W, 400.00 W

2025/12/10 19:13:55.721, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 54, 38 %, 12 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 90.66 W, 320.00 W, 400.00 W

2025/12/10 19:13:57.732, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 1 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.42 W, 320.00 W, 400.00 W

2025/12/10 19:13:59.836, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 54.94 W, 320.00 W, 400.00 W

2025/12/10 19:14:01.937, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.19 W, 320.00 W, 400.00 W

2025/12/10 19:14:04.045, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.06 W, 320.00 W, 400.00 W

2025/12/10 19:14:06.156, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 52, 0 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 55.58 W, 320.00 W, 400.00 W

2025/12/10 19:14:08.258, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 581.42, P3, 4, 4, 16, 16, 53, 2 %, 2 %, 16376 MiB, 14337 MiB, 1714 MiB, Not Active, Not Active, Not Active, 56.28 W, 320.00 W, 400.00 W

Variable rates, Ubuntu

Mesured durance of tests:

Durchschn. Dauer: 1073.07 ms

Durchschn. Abweichung: 2.80199 ms

(rel. Durchschn. Abweichung: 0.261119 %)

nvidia-smi output:

~$ nvidia-smi --query-gpu=timestamp,name,pci.bus_id,driver_version,pstate,pcie.link.gen.max,pcie.link.gen.current,pcie.link.width.max,pcie.link.width.current,temperature.gpu,utilization.gpu,utilization.memory,memory.total,memory.free,memory.used,clocks_event_reasons.hw_thermal_slowdown,clocks_event_reasons.hw_power_brake_slowdown,clocks_event_reasons.sw_thermal_slowdown,power.draw.instant,power.limit,power.max_limit --format=csv -l 2

timestamp, name, pci.bus_id, driver_version, pstate, pcie.link.gen.max, pcie.link.gen.current, pcie.link.width.max, pcie.link.width.current, temperature.gpu, utilization.gpu [%], utilization.memory [%], memory.total [MiB], memory.free [MiB], memory.used [MiB], clocks_event_reasons.hw_thermal_slowdown, clocks_event_reasons.hw_power_brake_slowdown, clocks_event_reasons.sw_thermal_slowdown, power.draw.instant [W], power.limit [W], power.max_limit [W]

2025/12/10 19:29:14.499, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 45, 0 %, 18 %, 16376 MiB, 15388 MiB, 523 MiB, Not Active, Not Active, Not Active, 19.94 W, 320.00 W, 400.00 W

2025/12/10 19:29:16.513, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 45, 0 %, 39 %, 16376 MiB, 15389 MiB, 522 MiB, Not Active, Not Active, Not Active, 17.36 W, 320.00 W, 400.00 W

2025/12/10 19:29:18.517, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 44 %, 17 %, 16376 MiB, 15377 MiB, 534 MiB, Not Active, Not Active, Not Active, 26.12 W, 320.00 W, 400.00 W

2025/12/10 19:29:20.518, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 11 %, 9 %, 16376 MiB, 15381 MiB, 530 MiB, Not Active, Not Active, Not Active, 25.25 W, 320.00 W, 400.00 W

2025/12/10 19:29:22.523, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 13 %, 13 %, 16376 MiB, 15381 MiB, 530 MiB, Not Active, Not Active, Not Active, 26.29 W, 320.00 W, 400.00 W

2025/12/10 19:29:24.528, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 11 %, 9 %, 16376 MiB, 15381 MiB, 530 MiB, Not Active, Not Active, Not Active, 24.69 W, 320.00 W, 400.00 W

2025/12/10 19:29:26.532, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 50 %, 30 %, 16376 MiB, 15380 MiB, 531 MiB, Not Active, Not Active, Not Active, 27.37 W, 320.00 W, 400.00 W

2025/12/10 19:29:28.538, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P2, 3, 3, 16, 16, 46, 0 %, 0 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 47.98 W, 320.00 W, 400.00 W

2025/12/10 19:29:30.540, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P2, 3, 3, 16, 16, 47, 2 %, 0 %, 16376 MiB, 15119 MiB, 792 MiB, Not Active, Not Active, Not Active, 47.96 W, 320.00 W, 400.00 W

2025/12/10 19:29:32.544, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P2, 3, 3, 16, 16, 47, 1 %, 0 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 47.95 W, 320.00 W, 400.00 W

2025/12/10 19:29:34.545, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P2, 3, 3, 16, 16, 47, 0 %, 0 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 47.63 W, 320.00 W, 400.00 W

2025/12/10 19:29:36.547, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P2, 3, 3, 16, 16, 47, 0 %, 0 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 47.35 W, 320.00 W, 400.00 W

2025/12/10 19:29:38.548, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P2, 3, 3, 16, 16, 47, 1 %, 0 %, 16376 MiB, 15117 MiB, 794 MiB, Not Active, Not Active, Not Active, 48.02 W, 320.00 W, 400.00 W

2025/12/10 19:29:40.552, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P2, 3, 3, 16, 16, 47, 0 %, 0 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 48.22 W, 320.00 W, 400.00 W

2025/12/10 19:29:42.553, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P2, 3, 3, 16, 16, 47, 0 %, 0 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 48.20 W, 320.00 W, 400.00 W

2025/12/10 19:29:44.555, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P5, 3, 2, 16, 16, 46, 5 %, 4 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 27.30 W, 320.00 W, 400.00 W

2025/12/10 19:29:46.559, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 12 %, 7 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 23.79 W, 320.00 W, 400.00 W

2025/12/10 19:29:48.561, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 2 %, 8 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 24.35 W, 320.00 W, 400.00 W

2025/12/10 19:29:50.563, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 5 %, 8 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 24.86 W, 320.00 W, 400.00 W

2025/12/10 19:29:52.565, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 1 %, 7 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 24.89 W, 320.00 W, 400.00 W

2025/12/10 19:29:54.568, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 11 %, 8 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 24.68 W, 320.00 W, 400.00 W

2025/12/10 19:29:56.570, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 5 %, 16 %, 16376 MiB, 15117 MiB, 793 MiB, Not Active, Not Active, Not Active, 21.04 W, 320.00 W, 400.00 W

2025/12/10 19:29:58.572, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 0 %, 16 %, 16376 MiB, 15117 MiB, 794 MiB, Not Active, Not Active, Not Active, 16.78 W, 320.00 W, 400.00 W

2025/12/10 19:30:00.574, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 0 %, 38 %, 16376 MiB, 15117 MiB, 794 MiB, Not Active, Not Active, Not Active, 17.08 W, 320.00 W, 400.00 W

2025/12/10 19:30:02.576, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 0 %, 21 %, 16376 MiB, 15117 MiB, 794 MiB, Not Active, Not Active, Not Active, 17.81 W, 320.00 W, 400.00 W

2025/12/10 19:30:04.581, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 0 %, 28 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 17.44 W, 320.00 W, 400.00 W

2025/12/10 19:30:06.583, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 0 %, 28 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 17.26 W, 320.00 W, 400.00 W

2025/12/10 19:30:08.585, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 38 %, 41 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 22.06 W, 320.00 W, 400.00 W

2025/12/10 19:30:10.587, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 0 %, 38 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 17.12 W, 320.00 W, 400.00 W

2025/12/10 19:30:12.590, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 0 %, 26 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 16.55 W, 320.00 W, 400.00 W

2025/12/10 19:30:14.592, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 2 %, 18 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 19.06 W, 320.00 W, 400.00 W

2025/12/10 19:30:16.594, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 0 %, 34 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 17.16 W, 320.00 W, 400.00 W

2025/12/10 19:30:18.598, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 0 %, 32 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 16.88 W, 320.00 W, 400.00 W

2025/12/10 19:30:20.600, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 0 %, 35 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 16.75 W, 320.00 W, 400.00 W

2025/12/10 19:30:22.602, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P8, 3, 1, 16, 16, 46, 1 %, 34 %, 16376 MiB, 15118 MiB, 793 MiB, Not Active, Not Active, Not Active, 17.36 W, 320.00 W, 400.00 W

Fixed rates, Ubuntu

Mesured durance of tests:

Durchschn. Dauer: 1073.57 ms

Durchschn. Abweichung: 4.24725 ms

(rel. Durchschn. Abweichung: 0.395619 %)

nvidia-smi output:

~$ nvidia-smi --query-gpu=timestamp,name,pci.bus_id,driver_version,pstate,pcie.link.gen.max,pcie.link.gen.current,pcie.link.width.max,pcie.link.width.current,temperature.gpu,utilization.gpu,utilization.memory,memory.total,memory.free,memory.used,clocks_event_reasons.hw_thermal_slowdown,clocks_event_reasons.hw_power_brake_slowdown,clocks_event_reasons.sw_thermal_slowdown,power.draw.instant,power.limit,power.max_limit --format=csv -l 2

timestamp, name, pci.bus_id, driver_version, pstate, pcie.link.gen.max, pcie.link.gen.current, pcie.link.width.max, pcie.link.width.current, temperature.gpu, utilization.gpu [%], utilization.memory [%], memory.total [MiB], memory.free [MiB], memory.used [MiB], clocks_event_reasons.hw_thermal_slowdown, clocks_event_reasons.hw_power_brake_slowdown, clocks_event_reasons.sw_thermal_slowdown, power.draw.instant [W], power.limit [W], power.max_limit [W]

2025/12/10 19:46:24.687, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 0 %, 1 %, 16376 MiB, 15444 MiB, 467 MiB, Not Active, Not Active, Not Active, 49.51 W, 320.00 W, 400.00 W

2025/12/10 19:46:26.692, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 0 %, 0 %, 16376 MiB, 15444 MiB, 467 MiB, Not Active, Not Active, Not Active, 49.75 W, 320.00 W, 400.00 W

2025/12/10 19:46:28.696, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 0 %, 1 %, 16376 MiB, 15444 MiB, 467 MiB, Not Active, Not Active, Not Active, 49.60 W, 320.00 W, 400.00 W

2025/12/10 19:46:30.700, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 0 %, 1 %, 16376 MiB, 15444 MiB, 467 MiB, Not Active, Not Active, Not Active, 49.57 W, 320.00 W, 400.00 W

2025/12/10 19:46:32.704, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 0 %, 1 %, 16376 MiB, 15444 MiB, 467 MiB, Not Active, Not Active, Not Active, 49.99 W, 320.00 W, 400.00 W

2025/12/10 19:46:34.707, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 0 %, 1 %, 16376 MiB, 15198 MiB, 713 MiB, Not Active, Not Active, Not Active, 49.92 W, 320.00 W, 400.00 W

2025/12/10 19:46:36.708, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 0 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.16 W, 320.00 W, 400.00 W

2025/12/10 19:46:38.710, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 0 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 49.94 W, 320.00 W, 400.00 W

2025/12/10 19:46:40.711, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 0 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.34 W, 320.00 W, 400.00 W

2025/12/10 19:46:42.713, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 0 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 49.15 W, 320.00 W, 400.00 W

2025/12/10 19:46:44.716, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 0 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.23 W, 320.00 W, 400.00 W

2025/12/10 19:46:46.717, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 50, 3 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.55 W, 320.00 W, 400.00 W

2025/12/10 19:46:48.721, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 1 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.85 W, 320.00 W, 400.00 W

2025/12/10 19:46:50.722, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.17 W, 320.00 W, 400.00 W

2025/12/10 19:46:52.724, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.56 W, 320.00 W, 400.00 W

2025/12/10 19:46:54.725, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 2 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.65 W, 320.00 W, 400.00 W

2025/12/10 19:46:56.729, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.57 W, 320.00 W, 400.00 W

2025/12/10 19:46:58.730, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.30 W, 320.00 W, 400.00 W

2025/12/10 19:47:00.732, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 2 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.52 W, 320.00 W, 400.00 W

2025/12/10 19:47:02.734, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 1 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 49.94 W, 320.00 W, 400.00 W

2025/12/10 19:47:04.736, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 0 %, 16376 MiB, 15181 MiB, 729 MiB, Not Active, Not Active, Not Active, 49.84 W, 320.00 W, 400.00 W

2025/12/10 19:47:06.738, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 2 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 51.18 W, 320.00 W, 400.00 W

2025/12/10 19:47:08.741, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 1 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.99 W, 320.00 W, 400.00 W

2025/12/10 19:47:10.742, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 1 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.59 W, 320.00 W, 400.00 W

2025/12/10 19:47:12.744, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 2 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.93 W, 320.00 W, 400.00 W

2025/12/10 19:47:14.746, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 2 %, 1 %, 16376 MiB, 15182 MiB, 729 MiB, Not Active, Not Active, Not Active, 50.20 W, 320.00 W, 400.00 W

2025/12/10 19:47:16.750, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 1 %, 1 %, 16376 MiB, 15182 MiB, 728 MiB, Not Active, Not Active, Not Active, 50.84 W, 320.00 W, 400.00 W

2025/12/10 19:47:18.751, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 1 %, 16376 MiB, 15182 MiB, 728 MiB, Not Active, Not Active, Not Active, 50.27 W, 320.00 W, 400.00 W

2025/12/10 19:47:20.753, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 0 %, 16376 MiB, 15128 MiB, 783 MiB, Not Active, Not Active, Not Active, 49.78 W, 320.00 W, 400.00 W

2025/12/10 19:47:22.756, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 0 %, 16376 MiB, 15128 MiB, 783 MiB, Not Active, Not Active, Not Active, 49.41 W, 320.00 W, 400.00 W

2025/12/10 19:47:24.757, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 25 %, 6 %, 16376 MiB, 15095 MiB, 816 MiB, Not Active, Not Active, Not Active, 56.05 W, 320.00 W, 400.00 W

2025/12/10 19:47:26.760, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 4 %, 1 %, 16376 MiB, 15097 MiB, 814 MiB, Not Active, Not Active, Not Active, 50.59 W, 320.00 W, 400.00 W

2025/12/10 19:47:28.761, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 0 %, 16376 MiB, 15131 MiB, 780 MiB, Not Active, Not Active, Not Active, 49.64 W, 320.00 W, 400.00 W

2025/12/10 19:47:30.765, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 1 %, 16376 MiB, 15130 MiB, 781 MiB, Not Active, Not Active, Not Active, 50.45 W, 320.00 W, 400.00 W

2025/12/10 19:47:32.766, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 3 %, 2 %, 16376 MiB, 15097 MiB, 814 MiB, Not Active, Not Active, Not Active, 51.12 W, 320.00 W, 400.00 W

2025/12/10 19:47:34.769, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 1 %, 1 %, 16376 MiB, 15095 MiB, 816 MiB, Not Active, Not Active, Not Active, 50.45 W, 320.00 W, 400.00 W

2025/12/10 19:47:36.770, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 1 %, 16376 MiB, 15096 MiB, 815 MiB, Not Active, Not Active, Not Active, 50.58 W, 320.00 W, 400.00 W

2025/12/10 19:47:38.772, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 1 %, 16376 MiB, 15128 MiB, 783 MiB, Not Active, Not Active, Not Active, 50.21 W, 320.00 W, 400.00 W

2025/12/10 19:47:40.775, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 51, 0 %, 1 %, 16376 MiB, 15128 MiB, 783 MiB, Not Active, Not Active, Not Active, 50.37 W, 320.00 W, 400.00 W

2025/12/10 19:47:42.776, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15130 MiB, 781 MiB, Not Active, Not Active, Not Active, 50.65 W, 320.00 W, 400.00 W

2025/12/10 19:47:44.780, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 0 %, 16376 MiB, 15132 MiB, 779 MiB, Not Active, Not Active, Not Active, 50.47 W, 320.00 W, 400.00 W

2025/12/10 19:47:46.781, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15136 MiB, 775 MiB, Not Active, Not Active, Not Active, 50.60 W, 320.00 W, 400.00 W

2025/12/10 19:47:48.783, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15157 MiB, 754 MiB, Not Active, Not Active, Not Active, 50.17 W, 320.00 W, 400.00 W

2025/12/10 19:47:50.784, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15189 MiB, 722 MiB, Not Active, Not Active, Not Active, 50.41 W, 320.00 W, 400.00 W

2025/12/10 19:47:52.785, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 54, 32 %, 2 %, 16376 MiB, 6597 MiB, 9314 MiB, Not Active, Not Active, Not Active, 53.76 W, 320.00 W, 400.00 W

2025/12/10 19:47:54.786, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 1 %, 1 %, 16376 MiB, 15184 MiB, 727 MiB, Not Active, Not Active, Not Active, 50.31 W, 320.00 W, 400.00 W

2025/12/10 19:47:56.787, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 1 %, 1 %, 16376 MiB, 15180 MiB, 731 MiB, Not Active, Not Active, Not Active, 50.18 W, 320.00 W, 400.00 W

2025/12/10 19:47:58.788, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 2 %, 1 %, 16376 MiB, 15192 MiB, 719 MiB, Not Active, Not Active, Not Active, 50.51 W, 320.00 W, 400.00 W

2025/12/10 19:48:00.789, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15180 MiB, 731 MiB, Not Active, Not Active, Not Active, 50.48 W, 320.00 W, 400.00 W

2025/12/10 19:48:02.790, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 2 %, 1 %, 16376 MiB, 15180 MiB, 731 MiB, Not Active, Not Active, Not Active, 50.63 W, 320.00 W, 400.00 W

2025/12/10 19:48:04.792, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 1 %, 1 %, 16376 MiB, 15180 MiB, 731 MiB, Not Active, Not Active, Not Active, 50.56 W, 320.00 W, 400.00 W

2025/12/10 19:48:06.794, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 1 %, 1 %, 16376 MiB, 15180 MiB, 731 MiB, Not Active, Not Active, Not Active, 50.89 W, 320.00 W, 400.00 W

2025/12/10 19:48:08.802, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15179 MiB, 732 MiB, Not Active, Not Active, Not Active, 50.83 W, 320.00 W, 400.00 W

2025/12/10 19:48:10.803, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15178 MiB, 733 MiB, Not Active, Not Active, Not Active, 50.55 W, 320.00 W, 400.00 W

2025/12/10 19:48:12.807, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 1 %, 1 %, 16376 MiB, 15179 MiB, 732 MiB, Not Active, Not Active, Not Active, 50.74 W, 320.00 W, 400.00 W

2025/12/10 19:48:14.808, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15179 MiB, 732 MiB, Not Active, Not Active, Not Active, 50.79 W, 320.00 W, 400.00 W

2025/12/10 19:48:16.811, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 1 %, 1 %, 16376 MiB, 15179 MiB, 732 MiB, Not Active, Not Active, Not Active, 50.66 W, 320.00 W, 400.00 W

2025/12/10 19:48:18.812, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15179 MiB, 732 MiB, Not Active, Not Active, Not Active, 50.34 W, 320.00 W, 400.00 W

2025/12/10 19:48:20.814, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15179 MiB, 732 MiB, Not Active, Not Active, Not Active, 50.48 W, 320.00 W, 400.00 W

2025/12/10 19:48:22.816, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15179 MiB, 732 MiB, Not Active, Not Active, Not Active, 50.31 W, 320.00 W, 400.00 W

2025/12/10 19:48:24.818, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15179 MiB, 732 MiB, Not Active, Not Active, Not Active, 50.57 W, 320.00 W, 400.00 W

2025/12/10 19:48:26.820, NVIDIA GeForce RTX 4080, 00000000:01:00.0, 580.105.08, P3, 3, 3, 16, 16, 52, 0 %, 1 %, 16376 MiB, 15179 MiB, 732 MiB, Not Active, Not Active, Not Active, 50.23 W, 320.00 W, 400.00 W

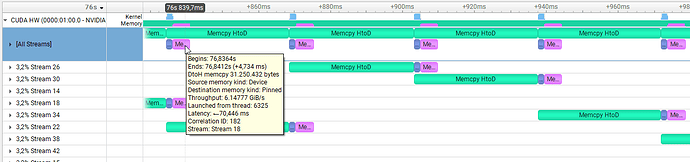

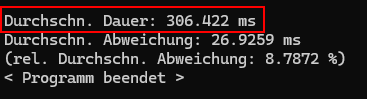

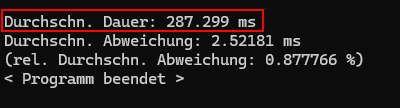

What we see is this:

On Windows, using fixed rates improves the performance of the test code by about 27 %. Also, standard deviation of the measured durances drops from almost 5 % to 0.5 %. Meanwhiles, Nsight Systems shows there’s still no overlapping of transfers and computation happening here. The improvement in performance seems to be due to slightly better kernel execution times which would be expected if we keep the GPU in a high performance state. Memory transfers for variable and fixed rates are about the same, somewhere between 22.5 GiB/s and 24.5 GiB/s.

On Ubuntu, execution times between variable and fixed rates stay the same. Okay? Kernel execution here, too, as a bit faster with fixed rates. Also, under Ubuntu there is overlapping happening between kernel executions, H2D transfers and D2H transfers. But, memory transfers are happening reeeally slowly, with less than 7 GiB/s.

I strongly feel that the explanation - and possibly solution - for this behaviour on Ubuntu lies in the following bits of information. Note that all tests listed above were run using the same GPU, GeForce RTX 4080. Only the OS (plus drivers, of course) changed:

- On Windows,

nvidia-smistates the device is using PCIe 4x16 while on Ubuntu, the maximum seems to be PCIe 3x16.

- On Windows,

cudaDeviceProp states an asyncEngineCount of 1 which indicates that concurrent kernel execution and memory transfers are possible, but not concurrent H2D and D2H transfers. On Ubuntu, asyncEngineCount is stated to be 2 which would mean that concurrent H2D and D2H transfers are also possible.

- On Windows, there is no overlapping whatsoever to be seen in Nsight Systems. On Ubuntu, I see maximum overlapping with kernel executions, H2D and D2H transfers all happening at the same time.

Surely, this behaviour must have some explanation in how the OS or the driver utilizes the hardware? I’d assume there should even be a way to configure this? Anyone?