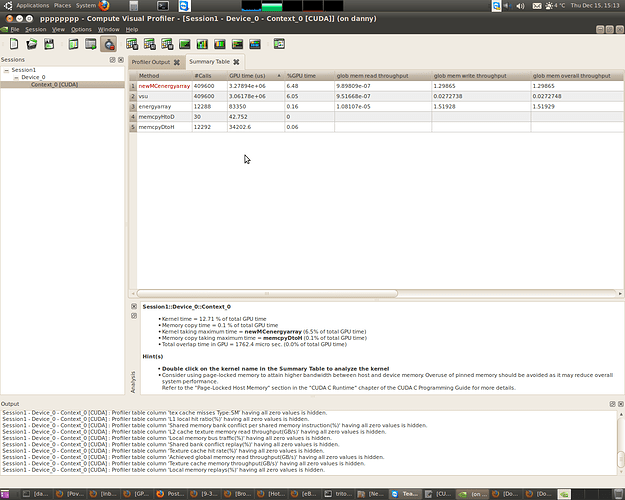

Memory Throughput Analysis for kernel newMCenergyarray on device Tesla C2070

Kernel requested global memory read throughput(GB/s): 6.16

Kernel requested global memory write throughput(GB/s): 1.03

Kernel requested global memory throughput(GB/s): 7.18

L1 cache read throughput(GB/s): 35.28

L1 cache global hit ratio (%): 74.56

Texture cache memory throughput(GB/s): 0.00

Texture cache hit rate(%): 0.00

L2 cache texture memory read throughput(GB/s): 0.00

L2 cache global memory read throughput(GB/s): 3.13

L2 cache global memory write throughput(GB/s): 1.03

L2 cache global memory throughput(GB/s): 4.17

Local memory bus traffic(%): 0.00

Global memory excess load(%): -96.62

Global memory excess store(%): 0.70

Achieved global memory read throughput(GB/s): 0.00

Achieved global memory write throughput(GB/s): 1.30

Achieved global memory throughput(GB/s): 1.30

Peak global memory throughput(GB/s): 143.42

Hint(s)

Consider using shared memory as a user managed cache for frequently accessed global memory resources.

Refer to the “Shared Memory” section in the “CUDA C Runtime” chapter of the CUDA C Programming Guide for more details.

The achieved global memory throughput is low compared to the peak global memory throughput. To achieve closer to peak global memory throughput, try to

Launch enough threads to hide memory latency (check occupancy analysis);

Process more data per thread to hide memory latency;

Consider using texture memory for read only global memory, texture memory has its own cache so it does not pollute L1 cache, this cache is also optimized for 2D spatial locality.

Refer to the “Texture Memory” section in the “CUDA C Runtime” chapter of the CUDA C Programming Guide for more details.

Factors that may affect analysis

If display is attached to the GPU that is being profiled, the DRAM reads, DRAM writes, l2 read hit ratio and l2 write hit ratio may include data for display in addition to the data for kernel that is being profiled.

The thresholds that are used to provide the hints may not be accurate in all cases. It is recommended to analyze all derived statistics and signals and correlate them with your algorithm before arriving to any conclusion.

The value of a particular derived statistic provided in the analysis window is the average value of the derived statistic for all calls of that kernel. To know the value of the derived statistic corresponding to a particular call please refer to the kernel profiler table.

The counters of type SM are collected only for 1 multiprocessor in the chip and the values are extrapolated to get the behavior of entire GPU assuming equal work distribution. This may result in some inaccuracy in the analysis in some cases.

The counters for some derived stats are collected in different runs of application. This may cause some inaccuracy in the derived statistics as the blocks scheduled on each multiprocessor may be different for each run and for some applications the behavior changes for each run.