According to this can I have any example resources to do inference on this model using deepstream SDK?

Please refer to deepstream_tao_apps/apps/tao_others/deepstream-mdx-perception-app at master · NVIDIA-AI-IOT/deepstream_tao_apps (github.com)

how can I modify the pipeline to get the reidentification embeddings of the detections out?

This line: deepstream_tao_apps/apps/tao_others/deepstream-mdx-perception-app/deepstream_mdx_perception_app.c at master · NVIDIA-AI-IOT/deepstream_tao_apps (github.com) The embedding verctor is copied from tensor output to another CUDA buffer.

This architecture is provided in the notebook.

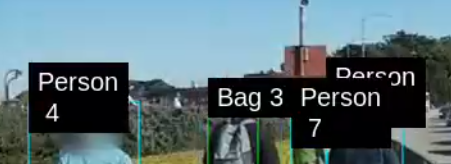

Referring to this output generated from the pipeline

I have a question like from where the tracking ids for the objects are generated? from the peoplenet nvinfer plugin or the Re-ID model nvinfer plugin? and what type of tracker is used there?

Please refer to NVIDIA-AI-IOT/deepstream-retail-analytics: A DeepStream sample application demonstrating end-to-end retail video analytics for brick-and-mortar retail. (github.com) and Building an End-to-End Retail Analytics Application with NVIDIA DeepStream and NVIDIA TAO Toolkit | NVIDIA Technical Blog

Need a clarification on handling the embeddings as well.

In the notebook description there is a mention about the vector database saying the embedding vector can form a query to a database of embeddings and find the closest match.

Is this actually happening now inside the pipeline?

If yes, can I get a clarification on what’s happening in the background? and what database is used there? any configurations available?

If no, does this mean we need to build a plugin to store and retrieve the embedding from a vector database?

Please refer to NVIDIA-AI-IOT/deepstream-retail-analytics: A DeepStream sample application demonstrating end-to-end retail video analytics for brick-and-mortar retail. (github.com) and Building an End-to-End Retail Analytics Application with NVIDIA DeepStream and NVIDIA TAO Toolkit | NVIDIA Technical Blog

The tracking id is generated by the nvtracker(Gst-nvtracker — DeepStream documentation 6.4 documentation).

Please refer to the configuration file deepstream_tao_apps/configs/app/retail_object_detection_recognition.txt at master · NVIDIA-AI-IOT/deepstream_tao_apps (github.com)

This part is not implemented inside DeepStream pipeline, it should be implemented outside. E.G. In GitHub - NVIDIA-AI-IOT/deepstream-retail-analytics: A DeepStream sample application demonstrating end-to-end retail video analytics for brick-and-mortar retail., the embedding vectors are sent to the Kafka cloud server and stored in the database in the Kafka server.

Please provide complete information as applicable to your setup.

• Hardware Platform (Jetson / GPU)

• DeepStream Version

• JetPack Version (valid for Jetson only)

• TensorRT Version

• NVIDIA GPU Driver Version (valid for GPU only)

• Issue Type( questions, new requirements, bugs)

• How to reproduce the issue ? (This is for bugs. Including which sample app is using, the configuration files content, the command line used and other details for reproducing)

• Requirement details( This is for new requirement. Including the module name-for which plugin or for which sample application, the function description)

Hardware Platform (Jetson / GPU) Jetson AGX

• DeepStream Version 6.4

• JetPack Version (valid for Jetson only) Jetpack 5.1.2

• TensorRT Version 8.5.2.2

• Issue Type Bugs

• How to reproduce the issue ?

I tried to run the peoplenet reidentification pipeline in the Jetson AGX and found this issue.

The log shows there are some problems with your installation. Can the deepstream-test1 work in your board?

DeepStream 6.4 can only run on JetPack 6.0 DP and Orin platform.

How could you run DeepStream 6.4 with JetPack 5.1.2?

Please check the compatibility. Quickstart Guide — DeepStream documentation 6.4 documentation

Yeah It is Deepstream 6.3, my bad.

I tried to run the pipeline inside a docker container which is pulled from nvcr.io/nvidia/deepstream:6.3-triton-multiarch

Still I found an issue,

Have you installed JetPack5.1.2 in your host?

Can you install DeepStream 6.3 directly on your board and run the deepstream-test1?