Please provide complete information as applicable to your setup.

• Hardware Platform (Jetson / GPU) gpu

• DeepStream Version 6.3-docker

• JetPack Version (valid for Jetson only)

• TensorRT Version 8.5

• NVIDIA GPU Driver Version (valid for GPU only) 550.142

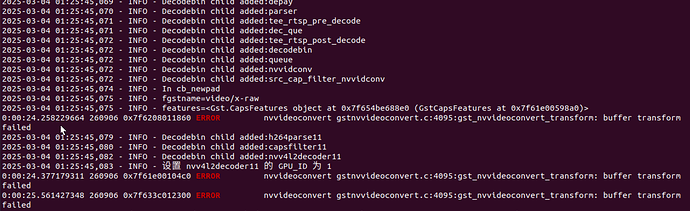

I have 3 graphics cards and I get an error when I specify a card with gpu-id=1:

0:00:22.544105378 257726 0x7fd778028760 WARN nvinfer gstnvinfer.cpp:1504:gst_nvinfer_process_full_frame:<primary-inference> error: Memory Compatibility Error:Input surface gpu-id doesnt match with configured gpu-id for element, please allocate input using unified memory, or use same gpu-ids OR, if same gpu-ids are used ensure appropriate Cuda memories are used

0:00:22.544159307 257726 0x7fd778028760 WARN nvinfer gstnvinfer.cpp:1504:gst_nvinfer_process_full_frame:<primary-inference> error: surface-gpu-id=0,primary-inference-gpu-id=1

2025-03-03 07:29:56,425 - ERROR - Error from primary-inference: gst-resource-error-quark: Memory Compatibility Error:Input surface gpu-id doesnt match with configured gpu-id for element, please allocate input using unified memory, or use same gpu-ids OR, if same gpu-ids are used ensure appropriate Cuda memories are used (1)

2025-03-03 07:29:56,425 - ERROR - Debugging info: gstnvinfer.cpp(1504): gst_nvinfer_process_full_frame (): /GstPipeline:pipeline0/GstNvInfer:primary-inference:

surface-gpu-id=0,primary-inference-gpu-id=1

[property]

gpu-id=1

net-scale-factor=0.0039215697906911373

model-color-format=0

onnx-file=/home/user/Downloads/TwistLock/best.pt.onnx

model-engine-file=/home/user/Downloads/TwistLock/twistlock.engine

#int8-calib-file=calib.table

labelfile-path=/home/user/Downloads/TwistLock/best.names

batch-size=1

network-mode=0

num-detected-classes=1

interval=0

gie-unique-id=1

process-mode=1

network-type=0

cluster-mode=2

maintain-aspect-ratio=1

symmetric-padding=1

#workspace-size=2000

parse-bbox-func-name=NvDsInferParseYolo

#parse-bbox-func-name=NvDsInferParseYoloCuda

custom-lib-path=/home/user/Work/DeepStream-Yolo/nvdsinfer_custom_impl_Yolo/libnvdsinfer_custom_impl_Yolo.so

engine-create-func-name=NvDsInferYoloCudaEngineGet

[class-attrs-all]

nms-iou-threshold=0.45

pre-cluster-threshold=0.25

topk=300

Here’s my code:

def cb_newpad(self, decodebin, decoder_src_pad, data):

MyLogger.info("In cb_newpad")

caps = decoder_src_pad.get_current_caps()

if not caps:

caps = decoder_src_pad.query_caps()

gststruct = caps.get_structure(0)

gstname = gststruct.get_name()

source_bin = data

features = caps.get_features(0)

# Need to check if the pad created by the decodebin is for video and not

# audio.

MyLogger.info(f"fgstname={gstname}")

if gstname.find("video") != -1:

MyLogger.info(f"features={features}")

if features.contains("memory:NVMM"):

# Get the source bin ghost pad

bin_ghost_pad = source_bin.get_static_pad("src")

if not bin_ghost_pad.set_target(decoder_src_pad):

MyLogger.error("Failed to link decoder src pad to source bin ghost pad")

else:

MyLogger.error(" Error: Decodebin did not pick nvidia decoder plugin.")

def decodebin_child_added(self, child_proxy, Object, name, user_data):

MyLogger.info(f"Decodebin child added:{name}")

if (name.find("decodebin") != -1):

Object.connect("child-added", self.decodebin_child_added, user_data)

if "source" in name:

source_element = child_proxy.get_by_name("source")

if source_element.find_property('drop-on-latency') != None:

Object.set_property("drop-on-latency", True)

def create_pipeline(self):

MyLogger.info("Creating Pipeline ")

self.pipeline = Gst.Pipeline()

self.is_live = False

if not self.pipeline:

MyLogger.error(" Unable to create Pipeline")

MyLogger.info("Creating streamux ")

# Create nvstreammux instance to form batches from one or more sources.

self.streammux = Gst.ElementFactory.make("nvstreammux", "Stream-muxer")

if not self.streammux:

MyLogger.error(" Unable to create NvStreamMux ")

return False

self.pipeline.add(self.streammux)

valid_sources = []

for i in range(self.number_sources):

self.last_frame_time_dict[i] = time.time()

MyLogger.info(f"Creating source_bin: {i}")

uri_name = self.stream_paths[i]

if uri_name.find("rtsp://") == 0:

self.is_live = True

# todo

self.source_bin = self.create_source_bin(i, uri_name)

# if not self.source_bin:

# MyLogger.error("Unable to create source bin")

# return False

# self.pipeline.add(self.source_bin)

if self.source_bin is not None:

# valid_sources.append(source_bin)

# ½« source_bin Ìí¼Óµ½Ö÷ Pipeline ÖÐ

valid_sources.append(self.source_bin)

self.pipeline.add(self.source_bin)

else:

MyLogger.error(f"Skipping invalid source: {uri_name}")

continue

padname = "sink_%u" % i

self.sinkpad = self.streammux.get_request_pad(padname)

if not self.sinkpad:

MyLogger.error("Unable to create sink pad bin")

return False

self.srcpad = self.source_bin.get_static_pad("src")

if not self.srcpad:

MyLogger.error("Unable to create src pad bin")

return False

self.srcpad.link(self.sinkpad)

self.number_sources = len(valid_sources)

# self.streammux.set_property("batch-size", self.number_sources)

# self.pgie.set_property("batch-size", self.number_sources)

self.queue1 = Gst.ElementFactory.make("queue", "queue1")

self.queue2 = Gst.ElementFactory.make("queue", "queue2")

self.queue3 = Gst.ElementFactory.make("queue", "queue3")

self.queue4 = Gst.ElementFactory.make("queue", "queue4")

self.queue5 = Gst.ElementFactory.make("queue", "queue5")

self.queue6 = Gst.ElementFactory.make("queue", "queue6")

self.queue7 = Gst.ElementFactory.make("queue", "queue7")

# self.queue8 = Gst.ElementFactory.make("queue", "queue8")

self.pipeline.add(self.queue1)

self.pipeline.add(self.queue2)

self.pipeline.add(self.queue3)

self.pipeline.add(self.queue4)

self.pipeline.add(self.queue5)

self.pipeline.add(self.queue6)

self.pipeline.add(self.queue7)

# self.pipeline.add(self.queue8)

self.nvdslogger = None

self.transform = None

MyLogger.info("Creating Pgie ")

if self.requested_pgie is not None and (

self.requested_pgie == 'nvinferserver' or self.requested_pgie == 'nvinferserver-grpc'):

self.pgie = Gst.ElementFactory.make("nvinferserver", "primary-inference")

elif self.requested_pgie is not None and self.requested_pgie == 'nvinfer':

self.pgie = Gst.ElementFactory.make("nvinfer", "primary-inference")

else:

self.pgie = Gst.ElementFactory.make("nvinfer", "primary-inference")

if not self.pgie:

MyLogger.error(f" Unable to create pgie : {self.requested_pgie}")

return False

if self.disable_probe:

# Use nvdslogger for perf measurement instead of probe function

MyLogger.info("Creating nvdslogger ")

self.nvdslogger = Gst.ElementFactory.make("nvdslogger", "nvdslogger")

MyLogger.info("Creating tiler ")

self.tiler = Gst.ElementFactory.make("nvmultistreamtiler", "nvtiler")

if not self.tiler:

MyLogger.error(" Unable to create tiler")

return False

MyLogger.info("Creating nvvidconv")

self.nvvidconv = Gst.ElementFactory.make("nvvideoconvert", "convertor")

if not self.nvvidconv:

MyLogger.error(" Unable to create nvvidconv")

return False

MyLogger.info("Creating nvosd")

self.nvosd = Gst.ElementFactory.make("nvdsosd", "onscreendisplay")

if not self.nvosd:

MyLogger.error(" Unable to create nvosd")

return False

self.nvosd.set_property('process-mode', OSD_PROCESS_MODE)

self.nvosd.set_property('display-text', OSD_DISPLAY_TEXT)

if not int(MyConfigReader.cfg_dict["nvr"]["show"]):

MyLogger.info("Creating Fakesink ")

self.sink = Gst.ElementFactory.make("fakesink", "fakesink")

self.sink.set_property('enable-last-sample', 0)

self.sink.set_property('sync', 0)

else:

if is_aarch64():

MyLogger.info("Creating transform")

# self.transform = Gst.ElementFactory.make("nvegltransform", "nvegl-transform")

# if not self.transform:

# MyLogger.error(" Unable to create transform")

self.sink = Gst.ElementFactory.make("appsink", f"appsink")

MyLogger.info("Creating EGLSink \n")

self.sink = Gst.ElementFactory.make("nveglglessink", "nvvideo-renderer")

self.sink.set_property('sync', 0)

# self.sink = Gst.ElementFactory.make("appsink", f"appsink1")

self.converter = Gst.ElementFactory.make("nvvideoconvert", f"converter2")

self.capsfilter = Gst.ElementFactory.make("capsfilter", f"capsfilter")

caps = Gst.Caps.from_string("video/x-raw(memory:NVMM), format=RGBA")

# self.converter.set_property("nvbuf-memory-type", 0)

mem_type = int(pyds.NVBUF_MEM_CUDA_UNIFIED)

self.converter.set_property("nvbuf-memory-type", mem_type)

self.tiler.set_property("nvbuf-memory-type", mem_type)

self.capsfilter.set_property("caps", caps)

if not self.sink:

MyLogger.error(" Unable to create sink element")

return False

if self.is_live:

MyLogger.info("At least one of the sources is live")

self.streammux.set_property('live-source', 1)

self.streammux.set_property('width', 1920)

self.streammux.set_property('height', 1080)

self.streammux.set_property('batch-size', self.number_sources)

self.streammux.set_property('batched-push-timeout', 40000)

if self.requested_pgie == "nvinferserver" and self.config is not None:

self.pgie.set_property('config-file-path', self.config)

elif self.requested_pgie == "nvinferserver-grpc" and self.config is not None:

self.pgie.set_property('config-file-path', self.config)

elif self.requested_pgie == "nvinfer" and self.config is not None:

self.pgie.set_property('config-file-path', self.config)

else:

# todo

self.pgie.set_property('config-file-path', self.config)

pgie_batch_size = self.pgie.get_property("batch-size")

if pgie_batch_size != self.number_sources:

# print( pgie_batch_size, ":", self.number_sources)

MyLogger.warning(

f"WARNING: Overriding infer-config batch-size{pgie_batch_size}with number of sources{self.number_sources}")

self.pgie.set_property("batch-size", self.number_sources)

tiler_rows = int(math.sqrt(self.number_sources))

tiler_columns = int(math.ceil((1.0 * self.number_sources) / tiler_rows))

self.tiler.set_property("rows", tiler_rows)

self.tiler.set_property("columns", tiler_columns)

self.tiler.set_property("width", TILED_OUTPUT_WIDTH)

self.tiler.set_property("height", TILED_OUTPUT_HEIGHT)

# todo

self.sink.set_property("qos", 1)

if int(MyConfigReader.cfg_dict["nvr"]["show"]):

# todo

# self.sink.set_property("emit-signals", True)

# self.sink.set_property("sync", False)

# self.sink.set_property("max-buffers", 50)

# self.sink.set_property("drop", True)

# self.sink.connect("new-sample", self.on_new_sample)

pass

MyLogger.info("Adding elements to Pipeline")

self.pipeline.add(self.pgie)

# todo

self.pipeline.add(self.converter)

self.pipeline.add(self.capsfilter)

if self.nvdslogger:

self.pipeline.add(self.nvdslogger)

self.pipeline.add(self.tiler)

self.pipeline.add(self.nvvidconv)

self.pipeline.add(self.nvosd)

if self.transform:

self.pipeline.add(self.transform)

if int(MyConfigReader.cfg_dict["nvr"]["show"]):

pass

# self.pipeline.add(self.encoder)

# self.pipeline.add(self.parser)

self.pipeline.add(self.sink)

MyLogger.info("Linking elements in the Pipeline \n")

self.streammux.link(self.queue1)

self.queue1.link(self.pgie)

self.pgie.link(self.queue2)

self.queue2.link(self.converter)

self.converter.link(self.queue3)

self.queue3.link(self.capsfilter)

self.capsfilter.link(self.queue4)

if self.nvdslogger:

self.queue4.link(self.nvdslogger)

self.nvdslogger.link(self.tiler)

else:

self.queue4.link(self.tiler)

self.tiler.link(self.queue5)

self.queue5.link(self.nvvidconv)

self.nvvidconv.link(self.queue6)

self.queue6.link(self.nvosd)

if self.transform:

self.nvosd.link(self.queue7)

self.queue7.link(self.transform)

self.transform.link(self.sink)

else:

self.nvosd.link(self.queue7)

if int(MyConfigReader.cfg_dict["nvr"]["show"]):

# self.queue5.link(self.converter)

# self.converter.link(self.capsfilter)

# self.capsfilter.link(self.sink)

self.queue7.link(self.sink)

else:

self.queue7.link(self.sink)

return True

def create_source_bin(self, index, uri):

MyLogger.info("Creating source bin")

if not self.check_uri_valid(uri):

MyLogger.error(f"URI is invalid or unreachable: {uri}", )

return None # Ö±½Ó·µ»Ø None ±íʾ´´½¨Ê§°Ü

# Create a source GstBin to abstract this bin's content from the rest of the

# pipeline

bin_name = "source-bin-%02d" % index

MyLogger.info(bin_name)

nbin = Gst.Bin.new(bin_name)

if not nbin:

MyLogger.error(" Unable to create source bin")

if file_loop:

# use nvurisrcbin to enable file-loop

uri_decode_bin = Gst.ElementFactory.make("nvurisrcbin", "uri-decode-bin")

uri_decode_bin.set_property("file-loop", 1)

MyLogger.info("nvurisrcbin")

else:

uri_decode_bin = Gst.ElementFactory.make("uridecodebin", "uri-decode-bin")

MyLogger.info("uridecodebin")

if not uri_decode_bin:

sys.stderr.write(" Unable to create uri decode bin \n")

# We set the input uri to the source element

uri_decode_bin.set_property("uri", uri)

# Connect to the "pad-added" signal of the decodebin which generates a

# callback once a new pad for raw data has beed created by the decodebin

# todo

uri_decode_bin.connect("pad-added", self.cb_newpad, nbin)

self.source_bins[index] = nbin

uri_decode_bin.connect("child-added", self.decodebin_child_added, nbin)

Gst.Bin.add(nbin, uri_decode_bin)

bin_pad = nbin.add_pad(Gst.GhostPad.new_no_target("src", Gst.PadDirection.SRC))

if not bin_pad:

sys.stderr.write(" Failed to add ghost pad in source bin \n")

return None

return nbin