Description

I am trying to optimise my Mask-RCNN model using the ONNX parser. For this, I converted my model from h5 to pb (frozen graph): resnet50-coco-epoch1.pb

Next, I converted this pb file to ONNX model: resnet50-coco-epoch1.onnx.

During this step, I had to explicitly mention the input layer dims but not for the outputs:

$ python -m tf2onnx.convert --input '/content/resnet50-coco-epoch1.pb' --inputs input_image:0[2,480,480,3] --outputs mrcnn_class/Reshape:0 --output resnet50-imagenet-epoch5.onnx --opset 12

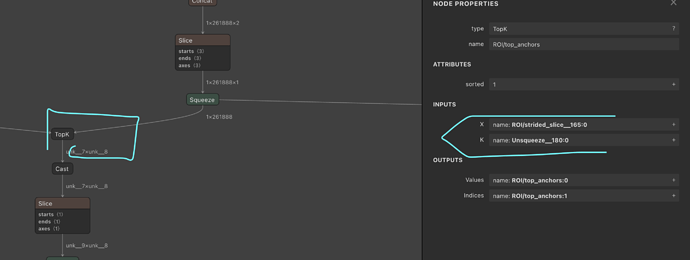

Even though I got the model, I noticed that its outputs still have unclear dimensions.

Next, I try to generate a tensorrt engine using

onnx_path = "resnet50-coco-epoch1.onnx"

batch_size = 1

model = ModelProto()

with open(onnx_path, "rb") as f:

model.ParseFromString(f.read())

d0 = model.graph.input[0].type.tensor_type.shape.dim[1].dim_value

d1 = model.graph.input[0].type.tensor_type.shape.dim[2].dim_value

d2 = model.graph.input[0].type.tensor_type.shape.dim[3].dim_value

shape = [batch_size , d0, d1 ,d2]

engine = eng.build_engine(onnx_path, shape= shape)

eng.save_engine(engine, engine_name)

But I get the error:

[TensorRT] ERROR: Parameter check failed at: ../builder/Network.cpp::addInput::671, condition: isValidDims(dims, hasImplicitBatchDimension())

[TensorRT] ERROR: Network must have at least one output

On going through past discussions, I noticed that I had to specify network output manually. On doing so,

network.mark_output(network.get_layer(network.num_layers - 1).get_output(0))

I get the error:

[TensorRT] ERROR: Parameter check failed at: ../builder/Network.cpp::addInput::671, condition: isValidDims(dims, hasImplicitBatchDimension())

False

python3: ../builder/Network.cpp:863: virtual nvinfer1::ILayer* nvinfer1::Network::getLayer(int) const: Assertion `layerIndex >= 0' failed.

Aborted

Any help is highly appreciated 🤠.

Environment

TensorRT Version: ‘6.0.1.10’

GPU Type: NVIDIA JETSON Xavier AGX inbuilt CUDA cores

L4T info: # R32 (release), REVISION: 3.1, GCID: 18186506, BOARD: t186ref, EABI: aarch64, DATE: Tue Dec 10 07:03:07 UTC 2019

Nvidia Driver Version:

CUDA Version: 10.0

CUDNN Version: 7.6.3

Operating System + Version: Ubuntu 18.04.5 LTS

Python Version (if applicable): 3.6.9

TensorFlow Version (if applicable): 1.15-gpu

Baremetal or Container (if container which image + tag): Baremetal

Steps To Reproduce

Obtain the onnx model using the google collaboratory notebook HERE