Triton Inference Server Image: nvcr.io/nvidia/tritonserver:20.03.1-py3

GPU: T4

OS: Ubuntu 18.04

Driver: 440.33.01

CUDA version: 10.1, cudnn 7.6.5

Tensorflow: 2.2

Hi, I am using Triton inference server for doing dynamic batching based inference.

Following is my config.pbtxt file -

name: “effdet”

platform: “tensorflow_savedmodel”

max_batch_size: 64

input {

name: “image_arrays:0”

data_type: TYPE_UINT8

dims: -1

dims: -1

dims: -1

}

output {

name: “detections:0”

data_type: TYPE_FP32

dims: 100

dims: 7

}

instance_group [

{

count: 1

kind: KIND_GPU

}

]

dynamic_batching {

preferred_batch_size: [8, 64]

max_queue_delay_microseconds: 100

}

default_model_filename: “model.savedmodel”

I am successfully able to run the inferrence using a single instance of my effdet model.

However changing number of model instances to anything other than 1, i.e

instance_group [

{

count: 2 #[or 3,4 etc]

kind: KIND_GPU

}

]

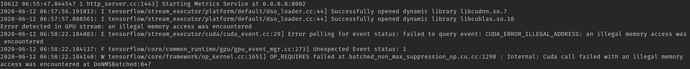

leads to an error CUDA_ERROR_ILLEGAL_ADDRESS: an illegal memory access was encountered.

Refering to the screenshot, can someone please assist me with a solution.

Thank you,

Shubham