Trying using contemporary DLA and GPU on Jetson NX

**• Hardware Platform = Jetson Xavier NX **

• DeepStream Version = DS-6.0.1

**• JetPack Version = JP-4.2 **

• TensorRT Version = 8.2.1.8

**• Issue Type: not clear if we can use GPU + DLA0 + DLA1 from same process **

• Requirement details

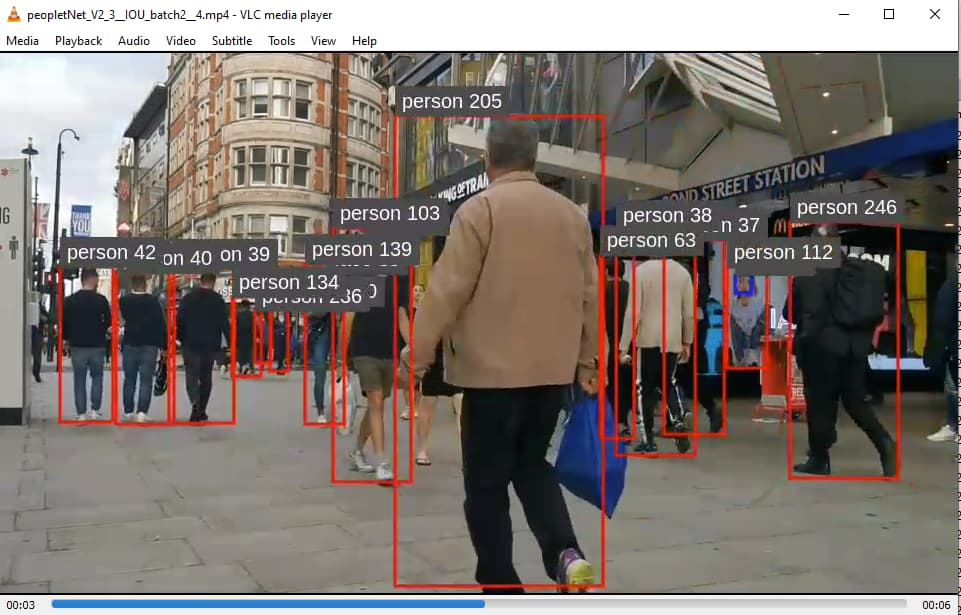

We are trying to run usual Nvidia PeopleNet ver. 2.3.2 on Nvidia NX dev board using contemporary GPU + DLA.

For this we are using deepsteam-app with usual deepstream_Config_file.txt + config_infer_file.txt

Consider that we are already using PeopleNet ver. 2.3.2 on NX in our application and it is working well and correctly using GPU, but when trying to activate DLA we see strange behaviour and performances goes down at least 6 times and no video output is got.

In details: like shown below we declared DLA activation using below config_infer_file [property] context, (from DS documentaiotn is not clear where to decalred DLA activation ) … and we see that a new DLA.engine is created completely different from GPU.engine

Question: is it correct to declare DLA in [property] context ?

[property]

gpu-id=0

net-scale-factor=0.0039215697906911373

tlt-model-key=tlt_encode

#

enable-dla=1 <<<<<<<<<<<<<<<<<<

use-dla-core=0 <<<<<<<<<<<<<<<<<<

#

tlt-encoded-model=../../models/tao_pretrained_models/peopleNet/V2.3.2/resnet34_peoplenet_pruned_int8_v2_3_2_quantized.etlt

labelfile-path=../../models/tao_pretrained_models/peopleNet/V2.3.2/labels.txt

model-engine-file=../../models/tao_pretrained_models/peopleNet/V2.3.2/resnet34_peoplenet_pruned_int8_v2_3_2_quantized.etlt_b2_dla0_int8.engine

int8-calib-file=../../models/tao_pretrained_models/peopleNet/V2.3.2/resnet34_peoplenet_pruned_int8_v2_3_2_quantized.txt

infer-dims=3;544;960

uff-input-blob-name=input_1

batch-size=2

process-mode=1

model-color-format=0

network-mode=1

num-detected-classes=3

cluster-mode=2

interval=0

gie-unique-id=1

output-blob-names=output_bbox/BiasAdd;output_cov/Sigmoid

… when deepstream-app start and no DLA.engine is present then it reads PeopleNet model.file: resnet34_peoplenet_pruned_int8_v2_3_2_quantized.etlt

but when reading tlt-encoded-model for PeopleNet following warnings are got, … so it seems that PeopleNet networks layers are not supported by DLA.

ERROR: Deserialize engine failed because file path: /opt/nvidia/deepstream/deepstream-6.0/samples/configs/tao_pretrained_models/../../models/tao_pretrained_models/peopleNet/V2.3.2/resnet34_peoplenet_pruned_int8_v2_3_2_quantized.etlt_b2_dla0_int8.engine open error

WARNING: [TRT]: Default DLA is enabled but layer output_bbox/bias is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer conv1/kernel is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer conv1/bias is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer bn_conv1/moving_variance is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer bn_conv1/Reshape_1/shape is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer bn_conv1/batchnorm/add/y is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer bn_conv1/gamma is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer bn_conv1/Reshape_3/shape is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer bn_conv1/beta is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer bn_conv1/Reshape_2/shape is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer bn_conv1/moving_mean is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer bn_conv1/Reshape/shape is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer block_1a_conv_1/kernel is not supported on DLA, falling back to GPU.

WARNING: [TRT]: Default DLA is enabled but layer block_1a_conv_1/bias is not supported on DLA, falling back to GPU.

So question:

-) Is it correct that PeopleNet can work only with GPU and will not work on DLA because resNet34 convolutional layers like bn_conv1/xxxxx are not supported on DLA ?

In any case:

-) Can we use 1 single network model like PeopleNet ver.2.3.2 running contemporary on GPU and DLA0 and/or DLA1 ?

how can we declare contemporary use of GPU and DLA ?

-) Or can we use only 1 network model like PeopleNet on GPU and another separated network model like DashCarNet on DLA ?

so we should have two completely different deepstream_Config_file.txt + config_infer_file.txt ?

1 for PeopleNet and 1 for DashCarNet ?

Last thing: when deepstream-app is running using DLA.engine total amount of FPS are about 25 FPS … like shown here below

instead when using GPU.engine it is about 145 FPS

**PERF: 4.68 (3.17) 4.68 (3.18) 4.68 (3.18) 4.68 (3.14) 4.67 (3.15)

**PERF: 4.68 (3.22) 4.68 (3.24) 4.68 (3.30) 4.68 (3.26) 4.68 (3.27)

**PERF: 4.68 (3.33) 4.68 (3.35) 4.68 (3.40) 4.68 (3.37) 4.68 (3.32)

**PERF: 4.68 (3.43) 4.68 (3.44) 4.68 (3.44) 4.68 (3.40) 4.68 (3.42)

**PERF: 4.68 (3.52) 4.68 (3.47) 4.68 (3.52) 4.68 (3.49) 4.68 (3.50)

**PERF: 4.68 (3.54) 4.68 (3.55) 4.68 (3.60) 4.68 (3.57) 4.68 (3.53)

**PERF: 4.67 (3.61) 4.67 (3.63) 4.67 (3.62) 4.67 (3.59) 4.67 (3.60)

Question:

-) Is it correct DLA performaces/FPS are much lower than GPU perfomormance/FPS ?

Thanks for support,

M.