Seed-Omni-8B was released recently, offering a model that is multimodal on both input and output, supporting text/image/audio → text/image/audio. It autoregressively generates tokens for both audio and image outputs.

I haven’t seen anyone successfully run that model because it requires what seems to be a custom fork of vLLM called OmniServe, and it also requires quite a bit of VRAM. Most people don’t want to go through the hassle, despite how interesting true Omni models can be.

I’ve spent probably 15 hours since yesterday afternoon working on the problem, and I am happy to present an easy to use repo: GitHub - coder543/seed-omni-spark

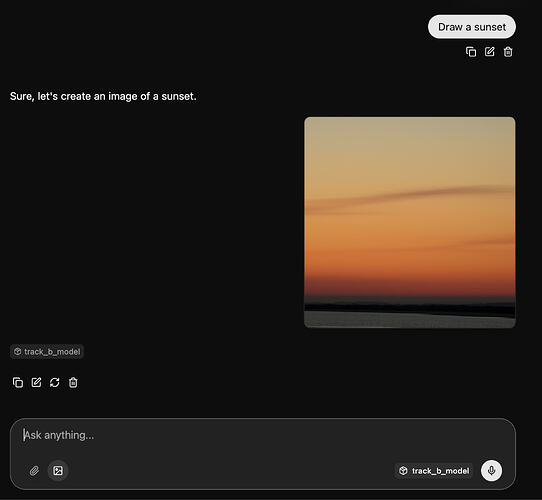

Besides the ease of launching the model server with seed-omni-spark, I have created a fork of llama.cpp’s webui that interfaces with OmniServe, letting you upload images/mp3s as inputs, and showing you images/sounds that the model sends back. Without an easy to use interface, it would be very difficult to use this model in any capacity. My fork of webui uses a proxy to handle translating things back and forth to what OmniServe expects, including decoding Seed-Omni-8B’s image and audio tokens to something that is actually useful and sending those to the browser.

Clone the repo and run ./start.sh. It will download the necessary models and docker containers, build OmniServe for DGX Spark, and wait for the containers to become healthy. After everything is running, simply visit port 3000 to load the webui interface and begin chatting with Seed-Omni-8B.

I am sure there are missing optimizations that could make this go faster, but it runs at 13 tokens per second as-is, which is sufficient for demo purposes.

I hope this project is fun for some other people! If you run into any issues, let me know, but I have already spent hours testing to make sure a fresh clone should start up correctly and easily.

There is one known issue: system prompts. Seed-Omni-8B appears to depend heavily on system prompts when image generation is required. I have it automatically inject the correct system prompt, but if you open a new chat, sometimes that sticks around and messes with non-image generation tasks unless you go into webui’s settings and manually delete the system prompt. Similarly, image→image requires a different system prompt, and it is supposed to be substituting that one in at the correct time, but I never got image→image to work for me. Probably requires more debugging, but I’m out of energy on this project for today.

Note: to generate an image, you need to turn on the image generation mode, which is controlled by the picture button next to the attachment paperclip. This adjusts the system prompt and attaches the necessary tool to the request.