I create a class wrapping the VPI PLK offerings and I am disappointed by the tracking results. When tracking a sparse set of points on an image to itself (so, 0 motion and with useInitialFlow set to 0), every single feature point is tracked successfully. However if I warp the target image ever so slightly (small rotations, translations, shear, etc.) many of the feature points end far from their starting positions and so give garbage solutions. This is the same with both CPU and CUDA backends.

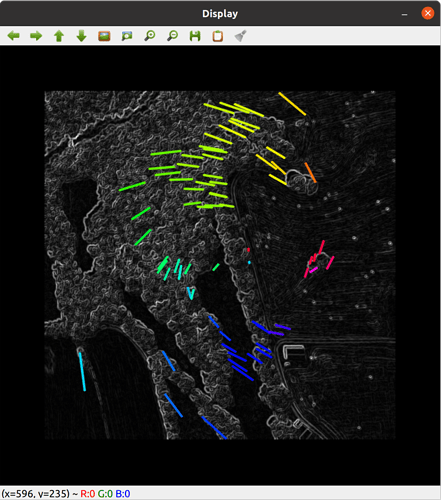

This first image shows the tracked points color coded by angle.

This second image show the inlier set from an affine transform.

This third image shows the two input images and the diff after warping:

Does anyone else experience this? Are there know bugs in this library? Or, maybe more likely, any thoughts on what I am doing wrong?

static void vpi_track(const VPIImage& i0_wrapper, const VPIImage& i1_wrapper,

VPIImage& gray0, VPIImage& gray1, VPIPyramid& pyr0,

VPIPyramid& pyr1, VPIArray& pts0, VPIArray& pts1,

VPIArray& status, VPIPayload& plk,

VPIOpticalFlowPyrLKParams* plk_params, VPIStream& stream,

VPIBackend backend) {

try {

// Convert to grayscale

vpi_check_status(vpiSubmitConvertImageFormat(stream, backend, i0_wrapper,

gray0, nullptr));

vpi_check_status(vpiSubmitConvertImageFormat(stream, backend, i1_wrapper,

gray1, nullptr));

// Fill pyramids

vpi_check_status(vpiSubmitGaussianPyramidGenerator(stream, backend, gray0,

pyr0, VPI_BORDER_CLAMP));

vpi_check_status(vpiSubmitGaussianPyramidGenerator(stream, backend, gray1,

pyr1, VPI_BORDER_CLAMP));

// Run optical flow

vpi_check_status(vpiSubmitOpticalFlowPyrLK(stream, 0, plk, pyr0, pyr1, pts0,

pts1, status, plk_params));

// Wait for processing to finish

vpi_check_status(vpiStreamSync(stream));

} catch (std::exception& e) {

LOG(ERROR) << e.what();

}

}

I can paste more code if needed. But again, everything is “great” if there is zero motion between image0 and image1.