#include<stdio.h>

#include<gst/gst.h>

#include<gst/base/gstbasesrc.h>

#include<gst/app/gstappsrc.h>

#include<gst/app/gstappsink.h>

#include<gst/gstallocator.h>

#include <math.h>

#include <stdbool.h>

#include <pthread.h>

#include <unistd.h>

#include <time.h>

#include <string.h>

#include <stdlib.h>

/*******************************************************************************

* Const and Macro Defines

******************************************************************************/

#ifdef DEBUG_VID_SER

#define DBG_VGST(fmt, args...) printf("Vid Gst Debug::%s:"fmt"\n", __FUNCTION__, ##args)

#else

#define DBG_VGST(fmt, args...)

#endif

#define ERR_VGST(fmt, args...) printf("Vid Gst ERROR::%s:"fmt"\n", __FUNCTION__, ##args)

#define INFO_VGST(fmt, args...) printf("Vid Gst INFO::%s:"fmt"\n", __FUNCTION__, ##args)

/* Day camera configs */

#define DAYCAM_RTSP_URL "rtsp://192.168.10.13:8554/day"

/* Paths */

#define GALLERY "/log/ftp/"

#define VID_STORE_DIR GALLERY"/VIDEOS/"

#define IMG_STORE_DIR GALLERY"/PHOTOS/"

#define IMG_EXTENTION ".jpeg"

#define VID_EXTENTION ".mp4"

#define DAYCAM_WIDTH 1280

#define DAYCAM_HEIGHT 1024

#define DAYCAM_FRAMERATE 30

/*******************************************************************************

* Typedefs and Enum Declarations

******************************************************************************/

enum errorrr_t{

SUCCESS,

FAILURE,

ERR_GST_PIPELINE_CREATION,

ERR_ALREADY_IN_PROGRESS,

};

/* media type enum */

typedef enum MEDIA_TYPE {

PHOTO = 0,

VIDEO,

} media_type_t;

typedef struct GST_CAPTURE_PIPELINE {

int reference;

GstElement *pipeline;

GstElement *src;

GstElement *rtpdepay;

GstElement *decoder;

GstElement *scale;

GstElement *sink;

} gst_capture_pipeline_t;

typedef struct GST_SNAPSHOT_PIPELINE {

int need_data;

GstClockTime timestamp;

GstElement *pipeline;

GstElement *src;

GstElement *encoder;

GstElement *sink;

} gst_snapshot_pipeline_t;

typedef struct GST_RECORDING_PIPELINE {

int need_data;

int status;

GstClockTime timestamp;

GstElement *pipeline;

GstElement *src;

GstElement *encoder;

GstElement *container;

GstElement *sink;

} gst_rec_pipeline_t;

typedef struct GST_PIPELINE {

gst_capture_pipeline_t capture;

gst_rec_pipeline_t recording;

gst_snapshot_pipeline_t snapshot;

} gst_pipeline_t;

typedef enum RECORDING_STATUS {

RECORDING_STOPPED = 0,

RECORDING_STOP_INITIATED,

RECORDING_STOP_INPROGRESS,

RECORDING_RUNNING,

} recording_status_t;

/*******************************************************************************

* Public Variables

******************************************************************************/

gst_pipeline_t day_pipeline = {0}; /* day camera pipeline */

/*******************************************************************************

* Private Variables

******************************************************************************/

static pthread_t rec_mon_thread_t;

/*******************************************************************************

* Private Prototypes

******************************************************************************/

static int create_gst_capture_pipeline(gst_capture_pipeline_t *capture_pipeline);

static GstFlowReturn capture_new_sample_cb(GstAppSink *capture_sink, gpointer *data);

static int start_capture_pipeline(gst_capture_pipeline_t *capture);

static int stop_capture_pipeline(gst_capture_pipeline_t *capture);

static int take_snapshot(gst_pipeline_t *source);

static int init_snap_pipeline(gst_snapshot_pipeline_t *snapshot);

static int start_recording(gst_pipeline_t *pipeline);

static int init_rec_pipeline(gst_rec_pipeline_t *recording);

static void *rec_mon_loop(void *p);

static int stop_recording(gst_rec_pipeline_t *rec);

/*******************************************************************************

* Public Functions

******************************************************************************/

void gst_video_init(int *argc, char ***argv)

{

gst_init(argc,argv);

}

/*******************************************************************************

* @brief start or stop recording video

*

* This function will start/stop the recording of input video.

*

* @param[in] enable enable or disable the recording.

*

* @return SUCCESS/FAILURE

******************************************************************************/

int set_video_recording(int enable)

{

int ret;

if (enable) {

ret = start_recording(&day_pipeline);

} else {

ret = stop_recording(&(day_pipeline.recording));

}

return ret;

}

/*******************************************************************************

* @brief take snapshot

*

* This function will take snapshot of the day camera pipeline.

*

* @return SUCCESS/FAILURE

******************************************************************************/

int take_pipeline_snapshot(void)

{

int ret;

ret = take_snapshot(&day_pipeline);

return ret;

}

/*******************************************************************************

* Private Functions

******************************************************************************/

/*******************************************************************************

* @brief create video capture pipeline

*

* This function will create a capture pipeline for capturing video from the

* RTSP stream and then send it to appsink. So the data can be used by next

* pipeline. Next pipeline can be recording pipeline or snapshot pipeline.

*

* @param[out] capture_pipeline capture_pipeline pointer to the instance of

* capture pipeline to which the gst elements are to be initialised.

*

* @return SUCCESS/FAILURE

******************************************************************************/

static int create_gst_capture_pipeline(gst_capture_pipeline_t *capture_pipeline)

{

char pipeline_str[4096]={0};

GError *error = NULL;

sprintf(pipeline_str, "rtspsrc latency=0 name=capture_src is-live=true ! "

"rtph264depay name=rtpdecoder ! queue ! nvv4l2decoder name=nvdecoder ! "

"video/x-raw(memory:NVMM) ! nvvideoconvert name=scale ! appsink name=capture_sink");

if ((capture_pipeline->pipeline = gst_parse_launch(pipeline_str, &error)) == NULL) {

ERR_VGST("Pipeline Creation Failed : %s", error->message);

return FAILURE;

} else if (error != NULL) {

INFO_VGST("Pipeline Creation Warning : %s", error->message);

}

/* Store Pipeline confs */

capture_pipeline->src = gst_bin_get_by_name(GST_BIN(capture_pipeline->pipeline),"capture_src");

capture_pipeline->rtpdepay = gst_bin_get_by_name(GST_BIN(capture_pipeline->pipeline),"rtpdecoder");

capture_pipeline->decoder = gst_bin_get_by_name(GST_BIN(capture_pipeline->pipeline),"nvdecoder");

capture_pipeline->scale = gst_bin_get_by_name(GST_BIN(capture_pipeline->pipeline), "scale");

capture_pipeline->sink = gst_bin_get_by_name(GST_BIN(capture_pipeline->pipeline),"capture_sink");

/* configure rtsp source */

g_object_set(G_OBJECT(capture_pipeline->src), "location", DAYCAM_RTSP_URL, NULL);

/* configure nv decoder */

g_object_set(G_OBJECT(capture_pipeline->decoder),

"num-extra-surfaces", 0,

"enable-max-performance", 1,

"discard-corrupted-frames", 1,

NULL);

/* configure appsink */

g_object_set(G_OBJECT(capture_pipeline->sink),

"sync", 0,

"emit-signals", 1,

"max-buffers", 1,

"drop", 1,

NULL);

/* connect new-sample callback so gst buffer can be pushed to next

* demanding source */

g_signal_connect(capture_pipeline->sink, "new-sample", G_CALLBACK(capture_new_sample_cb), &day_pipeline);

/* unrefering unwanted object instances */

gst_object_unref(capture_pipeline->src);

gst_object_unref(capture_pipeline->rtpdepay);

gst_object_unref(capture_pipeline->decoder);

gst_object_unref(capture_pipeline->sink);

DBG_VGST("Day Camera Capture Pipeline Created");

return SUCCESS;

}

/* Gstreamer callback to new sample */

static GstFlowReturn capture_new_sample_cb(GstAppSink *capture_sink, gpointer *data)

{

GstSample *sample;

GstFlowReturn ret;

GstBuffer *video_buffer;

gst_pipeline_t *pipeline = (gst_pipeline_t *)data;

/* Retrieve the buffer */

sample = gst_app_sink_pull_sample(capture_sink);

if (sample) {

video_buffer = gst_sample_get_buffer(sample);

GST_BUFFER_DURATION(video_buffer) = gst_util_uint64_scale_int(1, GST_SECOND, 30);

/* Create copy of input buffer for pushing it to recording and

* snapshot pipeline */

if (pipeline->snapshot.need_data) {

GstBuffer *buffer_temp;

GstSample *sample_temp;

/* deep copy input buffer to output */

buffer_temp = gst_buffer_copy_deep(video_buffer);

GST_BUFFER_PTS(buffer_temp) = pipeline->snapshot.timestamp;

GST_BUFFER_DURATION(buffer_temp) = gst_util_uint64_scale_int(1, GST_SECOND, 30);

/* Create gst sample with incoming gst buffer's caps */

sample_temp = gst_sample_new(buffer_temp, gst_sample_get_caps(sample), NULL, NULL);

g_signal_emit_by_name(pipeline->snapshot.src, "push-sample", sample_temp, &ret);

pipeline->snapshot.timestamp += GST_BUFFER_DURATION(buffer_temp);

gst_sample_unref(sample_temp);

gst_buffer_unref(buffer_temp);

}

if (pipeline->recording.need_data) {

GstBuffer *buffer_temp;

GstSample *sample_temp;

/* deep copy input buffer to output */

buffer_temp = gst_buffer_copy_deep(video_buffer);

GST_BUFFER_PTS(buffer_temp) = pipeline->recording.timestamp;

GST_BUFFER_DURATION(buffer_temp) = gst_util_uint64_scale_int(1, GST_SECOND, 30);

/* Create gst sample with incoming gst buffer's caps */

sample_temp = gst_sample_new(buffer_temp, gst_sample_get_caps(sample), NULL, NULL);

g_signal_emit_by_name(pipeline->recording.src, "push-sample", sample_temp, &ret);

pipeline->recording.timestamp += GST_BUFFER_DURATION(buffer_temp);

gst_buffer_unref(buffer_temp);

}

gst_sample_unref (sample);

ret = GST_FLOW_OK;

return ret;

}

return GST_FLOW_ERROR;

}

static int start_capture_pipeline(gst_capture_pipeline_t *capture)

{

GstStateChangeReturn ret = 0;

/* if refcount is zero the pipeline should be started */

if (capture->reference == 0) {

/* Create pipeline before making it into playing state */

if (create_gst_capture_pipeline(capture) != SUCCESS) {

ERR_VGST("Failed to create day camera pipeline");

return FAILURE;

}

ret = gst_element_set_state(capture->pipeline, GST_STATE_PLAYING);

if (ret == GST_STATE_CHANGE_FAILURE) {

ERR_VGST("Failed to set capture pipeline to playing state");

return FAILURE;

}

capture->reference++;

/* In case of pipeline already running increase the refcount */

} else if (capture->reference > 0) {

capture->reference++;

}

return SUCCESS;

}

static int stop_capture_pipeline(gst_capture_pipeline_t *capture)

{

GstStateChangeReturn ret = 0;

if (capture->reference == 1) {

DBG_VGST("Killing Capture Pipeline");

ret = gst_element_set_state(capture->pipeline, GST_STATE_NULL);

if (ret == GST_STATE_CHANGE_FAILURE) {

ERR_VGST("Failed to set capture pipeline to stopped state");

return FAILURE;

}

gst_object_unref(capture->pipeline);

gst_object_unref(capture->scale);

capture->pipeline = NULL;

capture->scale = NULL;

capture->reference--;

} else if (capture->reference > 1) {

capture->reference--;

}

return SUCCESS;

}

/*******************************************************************************

* Recording And Snapshot Related Apis

******************************************************************************/

void get_formatted_filename(int media_file_type, char *buff)

{

static int photo_counter = 0;

static int video_counter = 0;

if (media_file_type == PHOTO) {

photo_counter++;

sprintf(buff, "%s/%d%s", GALLERY, photo_counter, IMG_EXTENTION);

} else {

video_counter++;

sprintf(buff, "%s/%d%s", GALLERY, video_counter, VID_EXTENTION);

}

return;

}

/*******************************************************************************

* @brief take snapshot

*

* This function will take a snapshot of the video.

*

* @param[in] source pipeline to be used for snapshot.

*

* @return SUCCESS/FAILURE

******************************************************************************/

static int take_snapshot(gst_pipeline_t *source)

{

GstBus *bus;

GstMessage *msg;

int ret = SUCCESS;

if (init_snap_pipeline(&(source->snapshot)) == SUCCESS) {

start_capture_pipeline(&(source->capture));

gst_element_set_state(source->snapshot.pipeline, GST_STATE_PLAYING);

source->snapshot.need_data = 1;

/* Wait for the end of stream or an error */

bus = gst_element_get_bus(source->snapshot.pipeline);

msg = gst_bus_timed_pop_filtered(bus, GST_CLOCK_TIME_NONE, GST_MESSAGE_ERROR | GST_MESSAGE_EOS);

source->snapshot.need_data = 0;

if (msg) {

if (GST_MESSAGE_TYPE(msg) == GST_MESSAGE_ERROR) {

GError *err;

gchar *debug_info;

gst_message_parse_error(msg, &err, &debug_info);

g_printerr("Error received from element %s: %s\n", GST_OBJECT_NAME(msg->src), err->message);

g_printerr("Debugging information: %s\n", debug_info ? debug_info : "none");

g_error_free(err);

g_free(debug_info);

ret = FAILURE;

}

gst_message_unref(msg);

}

gst_object_unref(bus);

/* deinit the snapshot pipeline */

gst_element_set_state(source->snapshot.pipeline, GST_STATE_NULL);

gst_object_unref(source->snapshot.pipeline);

gst_object_unref(source->snapshot.src);

INFO_VGST("Snapshot Done!");

stop_capture_pipeline(&(source->capture));

return ret;

}

return FAILURE;

}

/*******************************************************************************

* @brief create snapshot pipeline

*

* This function will create snapshot pipeline for day camera.

*

* @param[in] snapshot gst_snapshot_pipeline_t to which the element references

* is to be saved.

*

* @return SUCCESS/FAILURE

******************************************************************************/

static int init_snap_pipeline(gst_snapshot_pipeline_t *snapshot)

{

char snap_pipeline_str[300] = {0};

char jpeg_file_name[300] = {0};

snapshot->timestamp = 0;

sprintf(snap_pipeline_str, "appsrc name=snapsrc is-live=true num-buffers=1 caps=\"video/x-raw(memory:NVMM)\" ! nvjpegenc ! filesink name=snapsink sync=false");

snapshot->pipeline = gst_parse_launch(snap_pipeline_str, NULL);

if (!snapshot->pipeline) {

ERR_VGST("snapshot pipeline creation failed");

return ERR_GST_PIPELINE_CREATION;

}

snapshot->src = gst_bin_get_by_name(GST_BIN(snapshot->pipeline), "snapsrc");

gst_util_set_object_arg(G_OBJECT(snapshot->src), "format", "time");

/* File to which snapshot is to be saved */

get_formatted_filename(PHOTO, jpeg_file_name);

snapshot->sink = gst_bin_get_by_name(GST_BIN(snapshot->pipeline), "snapsink");

g_object_set(snapshot->sink, "location", jpeg_file_name, NULL);

gst_object_unref(snapshot->sink);

return SUCCESS;

}

/*******************************************************************************

* @brief start recording

*

* This function will start recording the file in mp4 file format.

*

* @param[in] pipeline gst_pipeline_t containing recording pipeline

*

* @return SUCCESS/FAILURE

******************************************************************************/

int start_recording(gst_pipeline_t *pipeline)

{

DBG_VGST("Recording Starting");

if (pipeline->recording.status != RECORDING_STOPPED) {

ERR_VGST("Recording Already Running, Please Stop to Continue");

return ERR_ALREADY_IN_PROGRESS;

}

if (init_rec_pipeline(&(pipeline->recording)) != SUCCESS) {

ERR_VGST("Failed to Init Recording Pipeline");

return FAILURE;

}

start_capture_pipeline(&(pipeline->capture));

pthread_create(&rec_mon_thread_t, NULL, rec_mon_loop, (void *)pipeline);

DBG_VGST("Recording Started");

return SUCCESS;

}

/* callback for need-data signal */

static void rec_need_data(GstElement * appsrc, guint length, gpointer udata)

{

gst_rec_pipeline_t *rec_pipeline = (gst_rec_pipeline_t *)udata;

rec_pipeline->need_data = 1;

}

/* callback for enough-data signal */

static void rec_enough_data(GstElement * appsrc, gpointer udata)

{

gst_rec_pipeline_t *rec_pipeline = (gst_rec_pipeline_t *)udata;

rec_pipeline->need_data = 0;

}

/*******************************************************************************

* @brief create recording pipeline

*

* This function will create recording pipeline for day camera.

*

* @param[in] recording gst_rec_pipeline_t to which the element references

* is to be saved.

*

* @return SUCCESS/FAILURE

******************************************************************************/

static int init_rec_pipeline(gst_rec_pipeline_t *recording)

{

char rec_pipeline_str[300] = {0};

char vid_file_name[300] = {0};

recording->timestamp = 0;

sprintf(rec_pipeline_str, "appsrc name=recsrc is-live=true ! queue ! nvvidconv ! nvv4l2h264enc name=recenc ! h264parse ! queue ! qtmux ! filesink name=recsink location=/tmp/rec.mp4 sync=false");

recording->pipeline = gst_parse_launch(rec_pipeline_str, NULL);

if (!recording->pipeline) {

ERR_VGST("recording pipeline creation failed");

return ERR_GST_PIPELINE_CREATION;

}

recording->src = gst_bin_get_by_name(GST_BIN(recording->pipeline), "recsrc");

gst_util_set_object_arg(G_OBJECT(recording->src), "format", "time");

/* install the callback that will be called when a buffer is needed */

g_signal_connect (recording->src, "need-data", (GCallback) rec_need_data, recording);

/* install the callback that will be called when a buffer has enough data */

g_signal_connect (recording->src, "enough-data", (GCallback) rec_enough_data, recording);

/* File to which recording is to be saved */

get_formatted_filename(VIDEO, vid_file_name);

recording->sink = gst_bin_get_by_name(GST_BIN(recording->pipeline), "recsink");

g_object_set(recording->sink, "location", vid_file_name, NULL);

gst_object_unref(recording->sink);

if (gst_element_set_state(recording->pipeline, GST_STATE_PLAYING) == GST_STATE_CHANGE_FAILURE) {

ERR_VGST("Failed to set recording pipeline to playing state");

return FAILURE;

}

recording->status = RECORDING_RUNNING;

return SUCCESS;

}

static int stop_recording(gst_rec_pipeline_t *rec)

{

if (rec->status == RECORDING_RUNNING) {

rec->status = RECORDING_STOP_INITIATED;

} else {

ERR_VGST("Unable to stop recording");

return FAILURE;

}

return SUCCESS;

}

static void send_eos_wait_for_completion(GstElement *pipeline)

{

GstClockTime timeout = 10*GST_SECOND;

GstMessage *msg = NULL;

gst_element_send_event(pipeline, gst_event_new_eos());

/* wait for EOS (blocks) */

msg = gst_bus_timed_pop_filtered (GST_ELEMENT_BUS (pipeline),

timeout, GST_MESSAGE_EOS | GST_MESSAGE_ERROR);

if (msg == NULL) {

g_warning ("No EOS for last 10 seconds!\n");

} else if (GST_MESSAGE_TYPE (msg) == GST_MESSAGE_ERROR) {

GError *err;

gchar *debug_info;

gst_message_parse_error(msg, &err, &debug_info);

g_printerr("Error received from element %s: %s\n", GST_OBJECT_NAME(msg->src), err->message);

g_printerr("Debugging information: %s\n", debug_info ? debug_info : "none");

g_error_free(err);

g_free(debug_info);

}

if (msg)

gst_message_unref (msg);

/* shutdown pipeline and free pipeline */

gst_element_set_state (pipeline, GST_STATE_NULL);

}

static int kill_recording_pipeline(gst_rec_pipeline_t *recording)

{

DBG_VGST("Killing Recording Pipeline");

send_eos_wait_for_completion(recording->pipeline);

gst_object_unref(recording->pipeline);

gst_object_unref(recording->src);

recording->need_data = 0;

recording->status = RECORDING_STOPPED;

return SUCCESS;

}

static void *rec_mon_loop(void *p)

{

pthread_detach(pthread_self());

gst_pipeline_t *pipeline = (gst_pipeline_t *)p;

while (1) {

if (pipeline->recording.status == RECORDING_STOP_INITIATED) {

pipeline->recording.status = RECORDING_STOP_INPROGRESS;

kill_recording_pipeline(&(pipeline->recording));

stop_capture_pipeline(&(pipeline->capture));

INFO_VGST("Recording stopped");

break;

}

usleep(200000);

}

return NULL;

}

int main(int argc, char *argv[])

{

char cmd;

int recording = 0;

gst_video_init(&argc, &argv);

printf("Day Camera Video Capture System\n");

printf("Commands:\n");

printf(" r - Toggle recording\n");

printf(" s - Take snapshot\n");

printf(" q - Quit\n");

while (1) {

printf("Enter command: ");

cmd = getchar();

while (getchar() != '\n'); // flush stdin

if (cmd == 'r') {

if (!recording) {

if (set_video_recording(1) == SUCCESS) {

printf("Recording started.\n");

recording = 1;

} else {

printf("Failed to start recording.\n");

}

} else {

if (set_video_recording(0) == SUCCESS) {

printf("Recording stopped.\n");

recording = 0;

} else {

printf("Failed to stop recording.\n");

}

}

} else if (cmd == 's') {

if (take_pipeline_snapshot() == SUCCESS) {

printf("Snapshot taken.\n");

} else {

printf("Failed to take snapshot.\n");

}

} else if (cmd == 'q') {

if (recording) {

set_video_recording(0);

}

printf("Exiting.\n");

break;

} else {

printf("Unknown command.\n");

}

}

return 0;

}

This is the full source code.

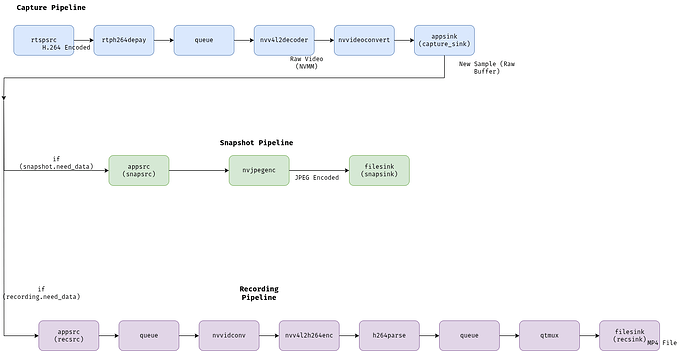

I am attaching the gst pipelien flow diagram in the application.