As the original discoverer/reporter of this issue, I figured I should chime in since it’s been a while since I’ve commented:

I’m on driver 530.43.01 and the issue actually IS still fixed, albeit in my opinion only partially. What I mean to say is that the GPU should not just force itself to the highest power state at all times just because “Prefer Maximum Performance” is the active PowerMizer mode, and on my system with 530.43.01 (and 525), power draw is pegged at 110W or more 100% of the time if I have “Prefer Maximum Performance” set as the active mode.

To refresh anyone’s memory who needs it, I’m running an RTX 3090 with 2x 2560x1440 165Hz monitors that are the exact same model (so no “one model is running at 165.00Hz while the other is at 164.80Hz” or anything like that)

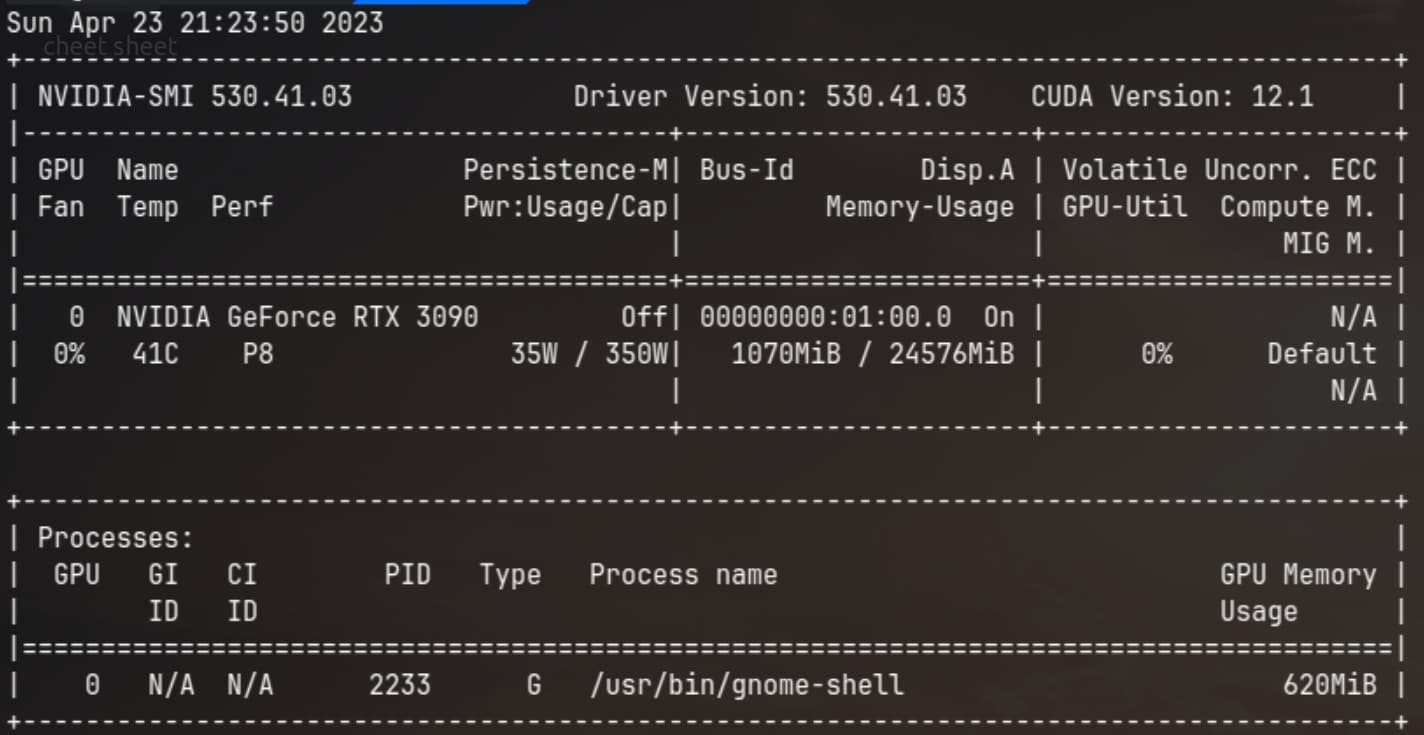

BUT, when I go into the X Server Settings and change the PowerMizer Mode to Adaptive or Auto, the bug is gone and I’m back down to power usage in the 40W range (assuming I’m not using anything with GPU acceleration like a browser, but even then it “idles” in the 70W range):

I’m not sure why no one else seems to be able to achieve this result on the 530 drivers, but I have reproduced it several times, across multiple kernels, and it is now 100% correlated with the PowerMizer mode (back when I reported this and until very recently, power usage was over 100W at all times regardless of PM mode).

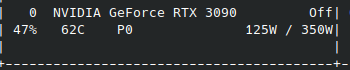

On a side note, @kodatarule I’ve known you from the various forums and spoken to you periodically for years now, but for the life of me I can’t recall you ever telling me your exact HW setup, and the reason why I’m asking is because your screenshots are alarming when it comes to temperatures. We both have 3090s, and my GPU runs at around 38-44C with the fans running at 44%, I’ve had this same fan curve for years (I got my card in person at Micro Center on launch day, if you remember me mentioning that), and it’s not like I have one of those INSANELY huge-cooler cards or an AIO, I have an EVGA XC3 Ultra, honestly it’s only slightly bigger than my Gigabyte Gaming OC RX 5700 XT. So it’s not like I have some amazing cooler solution, I just have a quite good case with good airfllow (Phanteks Eclipse P500A).

And that temperature range I gave you was the same even when idle power was always over 100W. And I don’t live in some frigid region, I live in Appalachia and my PC is in a bedroom that is usually slightly above room temperature (because of the PC).

So like, do you have a small form factor build, or a glass-fronted case or something? Because if we have the same GPU (a 3090), our fans are running at the same load (45%-ish), why are your temperatures 10-15C higher than mine? I’m just concerned their might be a problem with your GPU’s heatsink contact/thermal solution or something.

What are your max temps in games? I only hit 70C when gaming for extended periods during the warm seasons, usually I max out around 64C even in games like Cyberpunk.

Anyway if you wanna talk more about that issue and compare notes, you can DM me on reddit or discord or something so we don’t clutter this thread (you know my reddit username is also gardotd426 I’m sure)