Sorry if this is the wrong forum. Seemed like the most applicable one.

While optimizing a compute shader, I wanted to swap some texture sampling functions (previously textureGrad/SampleLevel) to gather4 since I didn’t need any actual filtering and read recommendations to use it a couple of times. At worst I expected it to be a noop, but in reality for a 4k (3840 x 2160) compute shader it adds almost 200ms to a previously ~1.2ms shader runtime.

I tried both a version that actually use all the samples and averages them and one that just hard-pick one of the samples, makes no difference.

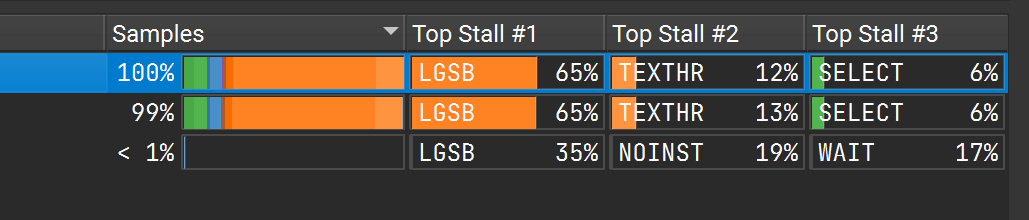

I can’t share code, at least publically, but here’s what the shader profiler sample sections say

without gather:

and with:

Also some metrics from the event itself:

(It appears I can only put three links in a post, so here’s the album: Imgur: The magic of the Internet . The very last image is without gather, the second one from the start is with gather).

So a small shift to more long scoreboard stalls and small improvement in other metrics, but it makes a big difference in absolute terms.

Perhaps I should add, the texture accesses in question use noise for texture coordinates for a filter kernel, so they aren’t particularly coherent, but from the metrics it seems that cache hit rates are already pretty good (perhaps the filter sizes are small enough).

Other parts of the code fetch texture memory, too, but most of those are highly coherent and about 90% of the stalls come from that small part of the code with noise-based texture access. They are also dependent texture reads, their coordinates rely on results from an earlier texture fetch, if that makes any difference.

What could be going on here? I thought gather4 was mostly free if you don’t need the actual sampling from texture sample functions. Could it trigger me to fetch across cache lines that were previously untouched?

edit: This is on Windows 10, DX12 (shader compiled with DXC) an RTX 3070 and driver version 511.65