Please provide complete information as applicable to your setup.

**• Hardware Platform (Jetson / GPU)**jetson

• DeepStream Version6.0.1

• JetPack Version (valid for Jetson only) 4.6

• TensorRT Version 8.0.1

• NVIDIA GPU Driver Version (valid for GPU only)

**• Issue Type( questions, new requirements, bugs)**pytorch resnet50 classfication deepstream

**• Requirement details:

Hello, I use pytorch to train a resnet50 image classifier and convert it to a .engine file with trtexec. I deployed it to deepstream sgie inference,the primary detection model is peoplenet. the image doesn’t show the label information, only the information detected by the first-level model

this is my config file:

config_infer_secondary_test.txt (3.3 KB)

deepstream_app_config_people.txt (3.6 KB)

display result:

Looking forward to your reply

We don’t know anything about the resnet50 model you deploy with. Can you tell us why “net-scale-factor=0” in config_nfer_secondary_test.txt?

All the parameters for nvinfer are explained in Gst-nvinfer — DeepStream 6.1.1 Release documentation.

You need to debug the model output problem by yourself. The tips may be helpful for you! DeepStream SDK FAQ - Intelligent Video Analytics / DeepStream SDK - NVIDIA Developer Forums

When I change the model in the config file to the model trained by TAO, I can display the label information of sgie inference normally, but when I switch to the model trained by pytorch myself, it can’t display the label information of sgie inference; I compare There are two kinds of deepstream output logs:

I find tao model output is 2

11 for two-class,but custom model output is only 1000

for 1000 class;

So I think it’s a post-processing issue;

What is the default custom-lib-path and parse-bbox-func for the configuration file of sgie inference

“net-scale-factor=0” in config_nfer_secondary_test.txt is also very confusing.

I don’t think it’s a problem with net-scale-factor=0, because it works fine when the sgie model is the TAO model

“net-scale-factor=0” means all zero input to the model, are you sure it can output any classification result?

Which TAO model are you using?

I just set net-scale-factor=1, but sgie inference still can’t display label information;

So I think it’s a model problem. The different post-processing methods of TAO model and pytorch custom model lead to can’t display

Please refer to the DeepStream SDK FAQ - Intelligent Video Analytics / DeepStream SDK - NVIDIA Developer Forums for how to debug output precision issue.

If the model is customized model, please make sure the postprocessing is cusomized too.

What is the default post-processing library for TAO classification?

i set custom-lib-path=/opt/nvidia/deepstream/deepstream/libs/nvdsinfer_customparser/libnvdsinfer_customparser.so,and parse-bbox-func-name=NvDsInferClassiferParseCustomSoftmax

The default classification postprocessing is ClassifyPostprocessor::parseAttributesFromSoftmaxLayers() in /opt/nvidia/deepstream/deepstream/sources/utils/nvdsinfer/nvdsinfer_context_impl_output_parsing.cpp

You should customize “parse-classifier-func-name” but not “parse-bbox-func-name”. Please refer to NvDsInferClassiferParseCustomFunc() in /opt/nvidia/deepstream/deepstream/sources/includes/nvdsinfer_custom_impl.h

Thank you very much, I have another question for you:

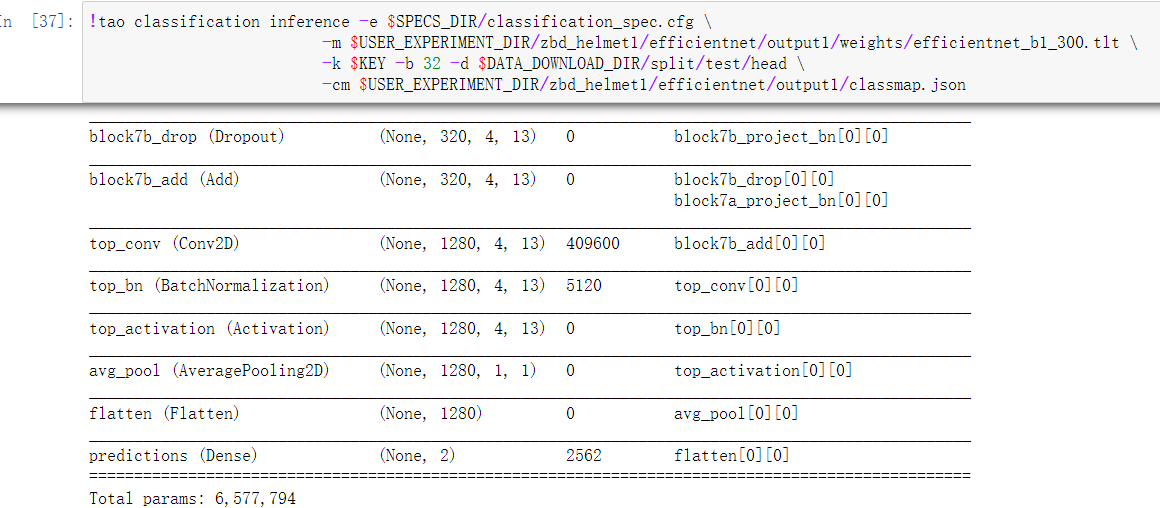

I found that the output structure of the TAO model is as follows:

But why the output of deepstream is 2

11, shouldn’t it be 2?

There is no update from you for a period, assuming this is not an issue anymore.

Hence we are closing this topic. If need further support, please open a new one.

Thanks

We don’t know what you have done to your model. gst-nvinfer just shows what it reads from the model. Please check with the guy who provide and generate the model.