• Hardware Platform (GPU) - NVIDIA GeForce RTX 3080

• DeepStream Version -6.2

• TensorRT Version - 8.5.2-1+cuda11.8

• NVIDIA GPU Driver Version- 525.105.17

• Deepstream is used inside deepstream:6.2-triton docker container and container is built with the command docker run --net=host --ipc=host -it --gpus all -e DISPLAY=$DISPLAY --name deepstream_trition deepstream_triton_image

**• The issue **

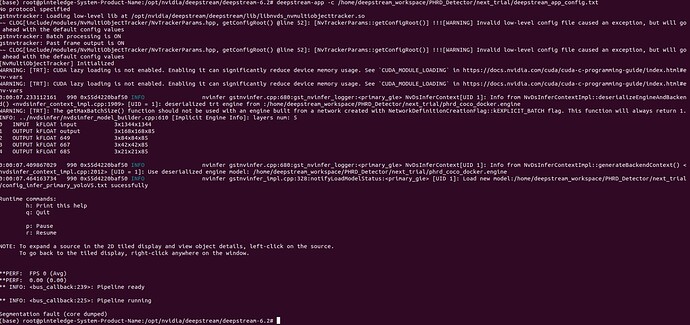

- I am trying to use my custom detector model which implements Yolov5 as baseline. But I got the App Failed with **Segmentation fault(core dumped) message.

**• How to reproduce the issue ?

For using my custom detector, I refered to the Deepstream-Yolo github repo. I generated cfg file and wts file according to the repository. And my pretrained custom model is converted to TensorRT engine using “trtexec”.

Before that, I tried to run original Yolo sampe sucessfully provided by Deepstream under /opt/nvidia/deepstream/deepstream-6.2/sources/objectDetector_Yolo/deepstream_app_config_yoloV3.txt .But I notice one thing that my custom detector is different from deepstream original sample is that I don’t have “kernel.cu” and “kernel.o”.

**

If not having that 2 files (kernel.cu and kernel.o) is actual issue, please help me with how I can create those files.

I’ve been working on the issue for a week.

Any kind of help is sincerely accepted~~

Thanks in advanced~