i want to Implement roi through preprocess group with deepstream-app config。How to write config_preprocess.txt,i I don’t know what configuration items there are

You can refer to our latest version. We have provided demo for preprocess in samples\configs\deepstream-app\source4_1080p_dec_preprocess_infer-resnet_preprocess_sgie_tiled_display_int8.txt.

[property]

enable=1

# list of component gie-id for which tensor is prepared

target-unique-ids=1

# 0=NCHW, 1=NHWC, 2=CUSTOM

network-input-order=0

# 0=process on objects 1=process on frames

process-on-frame=1

#uniquely identify the metadata generated by this element

unique-id=0

# gpu-id to be used

gpu-id=0

# if enabled maintain the aspect ratio while scaling

maintain-aspect-ratio=1

# if enabled pad symmetrically with maintain-aspect-ratio enabled

symmetric-padding=1

# processig width/height at which image scaled

processing-width=640

processing-height=368

# max buffer in scaling buffer pool

scaling-buf-pool-size=6

# max buffer in tensor buffer pool

tensor-buf-pool-size=6

# tensor shape based on network-input-order

network-input-shape= 60;3;368;640

# 0=RGB, 1=BGR, 2=GRAY

network-color-format=0

# 0=FP32, 1=UINT8, 2=INT8, 3=UINT32, 4=INT32, 5=FP16

tensor-data-type=0

# tensor name same as input layer name

tensor-name=input_1

# 0=NVBUF_MEM_DEFAULT 1=NVBUF_MEM_CUDA_PINNED 2=NVBUF_MEM_CUDA_DEVICE 3=NVBUF_MEM_CUDA_UNIFIED

scaling-pool-memory-type=0

# 0=NvBufSurfTransformCompute_Default 1=NvBufSurfTransformCompute_GPU 2=NvBufSurfTransformCompute_VIC

scaling-pool-compute-hw=0

# Scaling Interpolation method

# 0=NvBufSurfTransformInter_Nearest 1=NvBufSurfTransformInter_Bilinear 2=NvBufSurfTransformInter_Algo1

# 3=NvBufSurfTransformInter_Algo2 4=NvBufSurfTransformInter_Algo3 5=NvBufSurfTransformInter_Algo4

# 6=NvBufSurfTransformInter_Default

scaling-filter=0

# custom library .so path having custom functionality

custom-lib-path=/opt/nvidia/deepstream/deepstream/lib/gst-plugins/libcustom2d_preprocess.so

# custom tensor preparation function name having predefined input/outputs

# check the default custom library nvdspreprocess_lib for more info

custom-tensor-preparation-function=CustomTensorPreparation

[user-configs]

nvdspreprocess_lib

pixel-normalization-factor=0.003921568

[group-0]

src-ids=-1

custom-input-transformation-function=CustomAsyncTransformation

process-on-roi=1

roi-params-src-0=300;200;1000;800;

Why is the detection target not within the roi area?

Did you add the input-tensor-meta=1 parameter to the nvinfer configuration? You can refer to the demo file I attached before first.

i use yolov5 model.how can i set “tensor-name” to yolov5’s input layer name.

Please set it according to your own model. You can refer to our Guide to set it yourself.

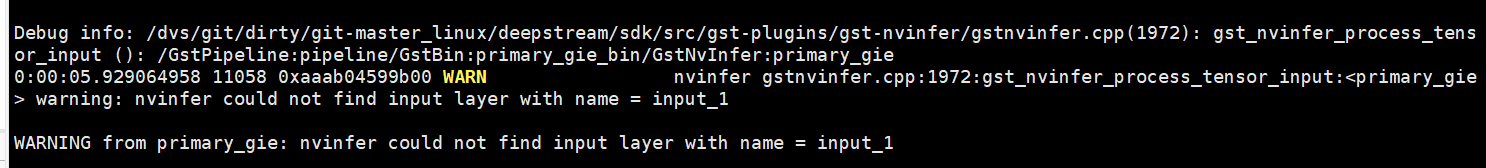

omg ,i refer to your guide ,it doesnt work.please help;this is my configurations

config_preprocess.txt (3.6 KB)

deepstream_app_config.txt (1.3 KB)

config_infer_primary_yoloV5.txt (681 字节)

Maybe setting the tensor-name parameter to your tensorrt’s input layer name does the job. (unless I misunderstood the parameter)

When you launch your pipeline, you can see the name of your input layer and output layer(s) in the terminal in the beginning.

It would look something like this

0 INPUT <input layer name> dimensions

1 OUTPUT <output layer name> dimensions & so on.

yes, so your tensor-name=input and not input_1 is what I think.

thankyou very much.it works

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.