Please provide the following information when requesting support.

• Network Type : Detectnet_v2

• TLT Version: v5.5.0

We see a strange observation on detectnetv2 model. The model was trained for 300 epochs and was evaluated with NMS and DBSCAN+NMS. We see acceptable results in hdf5 models with mAP values

When converted to ONNX and checked mAP, we see there is a huge drop in mAP value. We are attaching the accuracy values of each scenario below:

- mAP for nms hdf5_model

Car AP: 77.16%

Pedestrian AP: 51.01%

Cyclist AP: 74.26%

mAP (mean AP): 67.48%

2)mAP for DBSCAN hdf5 model

Car AP: 78.22%

Pedestrian AP: 52.11%

Cyclist AP: 75.49%

mAP (mean AP): 68.60%

3)mAP for DBSCAN Onnx model

Car AP: 6.28%

Pedestrian AP: 1.79%

Cyclist AP: 0.97%

mAP (mean AP): 3.01%

We observe the accuracy has reduced from 70% to 3%. Why there is so much drop observed in Detectnetv2 model.

It will be helpful if you could let us know the evaluation methos for ONNX model to compare our results.

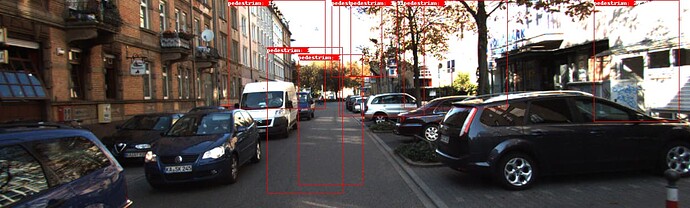

I am facing similar issue while testing on TRT engine. Here are the inference results obtained from TRT engine.

Also I am facing drop in accuracy after converting the model to ONNX.

- Network Type : Detectnet_v2

- TLT Version: v5.5.0

tao deploy detectnet_v2 gen_trt_engine -m /workspace/tao-experiments/detectnet_v2/export/tao_export_model.onnx --data_type fp32 --batches 10 --batch_size 4 --max_batch_size 64 --engine_file /workspace/tao-experiments/detectnet_v2/results_trt/resnet18_detector.trt.fp32 --cal_cache_file /workspace/tao-experiments/detectnet_v2/results_trt/calibration.bin -e /workspace/tao-experiments/detectnet_v2/specs/train_config.yaml --results_dir /workspace/tao-experiments/detectnet_v2/results_trt --verbose

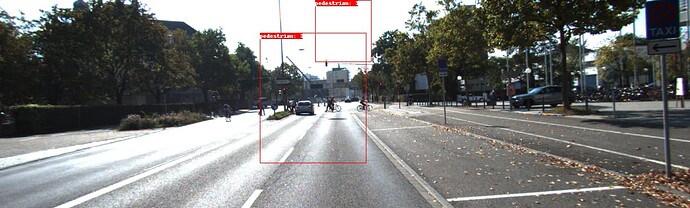

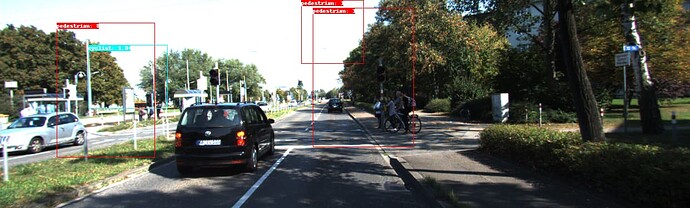

tao deploy detectnet_v2 inference -e /workspace/tao-experiments/detectnet_v2/specs/detectnet_v2_inference_kitti_etlt.txt -m /workspace/tao-experiments/detectnet_v2/results_trt/resnet18_detector.trt.fp32 -r /workspace/tao-experiments/detectnet_v2/results_trt -i /workspace/tao-experiments/detectnet_v2/test_samples -b 4

@joel.kunjachanvarghese

Could you please create a new topic? You can upload the stuff to it as well. Thanks.

Hi,

I tried it on tensorrt engine and was able to get very low accuracy. Please let me know if you are facing similar accuracy drop upon exporting to ONNX

I suggest you to run the default notebook tao_tutorials/notebooks/tao_launcher_starter_kit/detectnet_v2 at main · NVIDIA/tao_tutorials · GitHub to check if you can reproduce accuracy issue in Tensorrt engine.