Description

Hi all,

I wonder did anyone successfully convert the detectron2 model to TensorRT engine? Besides, did anyone try the detectron2 model on TensorRT?

I was trying the detectron2 model on TensorRT; however, I met two significant troubles during converting the Detectron2 model by two ways.

First Issue

I was using the official file, caffe2_export.py, to export the onnx model on Detectron2 GitHub. Please check this part from codes. After I tested it, I got this wrong below:

RuntimeError:No such operator caffe2::AliasWithName

The command is quite similar with the instructions from here.

The environment setting of testing was on Windows with Pytorch

v1.3.2.

Environment setting

GPU Type: GTX 1080 TI

Nvidia Driver Version: 431.86

CUDA Version: 10.0

CUDNN Version: 7.6.3

Operating System + Version: Win10

Python Version (if applicable): 3.6

TensorFlow Version (if applicable): 1.4

PyTorch Version (if applicable): 1.31

Second Issue

I also tried to use the torch.onnx.export function to export the model.

However, I met the significant problem that there is a python class issue on such as post-processing or many places if it needs to use the class. For example, the ROI Align or post-processing part were written by python class in the detectron2 model, but onnx seems unable to handle python class. I think this issue is quite serious if someone desires to use it with the TensorRT. Currently, I can successfully export the onnx model which only includes the backbone and FPN. (I will show the detail steps to reproduce it below.) Is there any possible to export an entire Detectron2 of onnx model?

Environment setting

GPU Type: GTX1080

Nvidia Driver Version: 445.74

CUDA Version: 10.0.130

CUDNN Version: 7.6.0

Operating System + Version: Windows 10 10.0.18363

Python Version (if applicable): 3.6.5

PyTorch Version (if applicable): 1.3.1

Reproduce

Reproduce my first issue:

- git clone from detectron2

- Run tools/deploy/caffe2_converter.py and successfully export the onnx model.

(Instructions: Deployment — detectron2 0.6 documentation)

Reproduce my second issue:

Here I will show what I have tried the success part that it only includes Backbone+FPN part.

- Do step Part A (install the detectron and additional requirements)

- (this) Go to detectron/tools folder

- Download

test_detect.py(The document is here.) and put in (this) folder - Open command line/terminal to (this) folder, and type

python3 test_detect.py

NOTE:

Check this : For detectron2 issue · GitHub

Please check the line 165 and line 166 below:

dummy_convert(cfg, only_backbone = True) # only backbone + FPN

dummy_convert(cfg, only_backbone = False) # all

If only_backbone = True , you can convert it successfully that only with backbone + FPN.

However, if only_backbone = False , it means including whole model that it will get wrong.

- Done

Part A

Requirements:

- See DETECTRON2 requirements here

- additional requirements that has been tested

- onnxruntime-gpu 1.1.2

- onnx-simplifier 0.2.9

- pytorch 1.3.1

- Python 3.6.5

Log Success

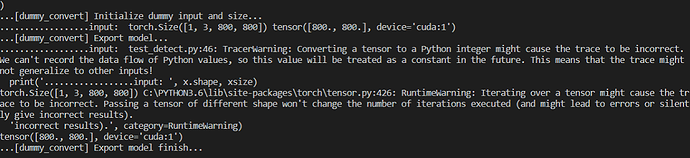

Log test convert:

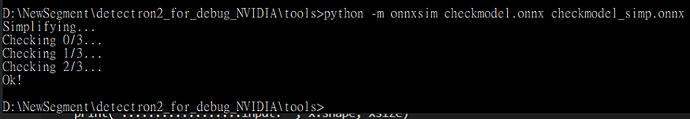

Log successfull simplify onnx:

Above I showed the successful case which can convert to onnx model without class part of Detectron2.

For the detectron2, I think the second issue is more serious and important.

What I Expect

My purpose is to successfully convert the detectron2 model to onnx model and convert onnx model to TensorRT engine!!!