Please provide complete information as applicable to your setup.

• Hardware Platform (Jetson / GPU) Jetson

• DeepStream Version 6.0

• JetPack Version (valid for Jetson only) JetPack 4.6.3

• TensorRT Version 8.2.1

• NVIDIA GPU Driver Version (valid for GPU only) cuda 10.2

• Issue Type( questions, new requirements, bugs) questions

Hello, I trained in docker(nvcr.io/nvidia/tlt-streamanalytics:v3.0-dp-py3)and got the etlt file of the model yolov4_resnet50. When I try to deploy the file to agx, I get an error.

(gst-plugin-scanner:9088): GStreamer-WARNING **: 22:24:24.232: Failed to load plugin '/usr/lib/aarch64-linux-gnu/gstreamer-1.0/deepstream/libnvdsgst_inferserver.so': libtritonserver.so: cannot open shared object file: No such file or directory (gst-plugin-scanner:9088): GStreamer-WARNING **: 22:24:24.234: Failed to load plugin '/usr/lib/aarch64-linux-gnu/gstreamer-1.0/deepstream/libnvdsgst_udp.so': librivermax.so.0: cannot open shared object file: No such file or directory *** DeepStream: Launched RTSP Streaming at rtsp://localhost:8554/ds-test *** Opening in BLOCKING MODE gstnvtracker: Loading low-level lib at /opt/nvidia/deepstream/deepstream-6.0/lib/libnvds_nvmultiobjecttracker.so gstnvtracker: Batch processing is ON gstnvtracker: Past frame output is ON [NvMultiObjectTracker] Initialized 0:00:03.203432639 9087 0x7f0c0022d0 WARN nvinfer gstnvinfer.cpp:635:gst_nvinfer_logger:<primary_gie> NvDsInferContext[UID 1]: Warning from NvDsInferContextImpl::initialize() <nvdsinfer_context_impl.cpp:1161> [UID = 1]: Warning, OpenCV has been deprecated. Using NMS for clustering instead of cv::groupRectangles with topK = 20 and NMS Threshold = 0.5 ERROR: Deserialize engine failed because file path: /home/py/Downloads/facenet/resnet18_detector.etlt_b1_gpu0_fp32.engine open error 0:00:05.079530093 9087 0x7f0c0022d0 WARN nvinfer gstnvinfer.cpp:635:gst_nvinfer_logger:<primary_gie> NvDsInferContext[UID 1]: Warning from NvDsInferContextImpl::deserializeEngineAndBackend() <nvdsinfer_context_impl.cpp:1889> [UID = 1]: deserialize engine from file :/home/py/Downloads/facenet/resnet18_detector.etlt_b1_gpu0_fp32.engine failed 0:00:05.099664027 9087 0x7f0c0022d0 WARN nvinfer gstnvinfer.cpp:635:gst_nvinfer_logger:<primary_gie> NvDsInferContext[UID 1]: Warning from NvDsInferContextImpl::generateBackendContext() <nvdsinfer_context_impl.cpp:1996> [UID = 1]: deserialize backend context from engine from file :/home/py/Downloads/facenet/resnet18_detector.etlt_b1_gpu0_fp32.engine failed, try rebuild 0:00:05.099816356 9087 0x7f0c0022d0 INFO nvinfer gstnvinfer.cpp:638:gst_nvinfer_logger:<primary_gie> NvDsInferContext[UID 1]: Info from NvDsInferContextImpl::buildModel() <nvdsinfer_context_impl.cpp:1914> [UID = 1]: Trying to create engine from model files ERROR: [TRT]: UffParser: Validator error: FirstDimTile_1: Unsupported operation _BatchTilePlugin_TRT parseModel: Failed to parse UFF model ERROR: Failed to build network, error in model parsing. ERROR: Failed to create network using custom network creation function ERROR: Failed to get cuda engine from custom library API 0:00:05.855356847 9087 0x7f0c0022d0 ERROR nvinfer gstnvinfer.cpp:632:gst_nvinfer_logger:<primary_gie> NvDsInferContext[UID 1]: Error in NvDsInferContextImpl::buildModel() <nvdsinfer_context_impl.cpp:1934> [UID = 1]: build engine file failed Segmentation fault (core dumped)

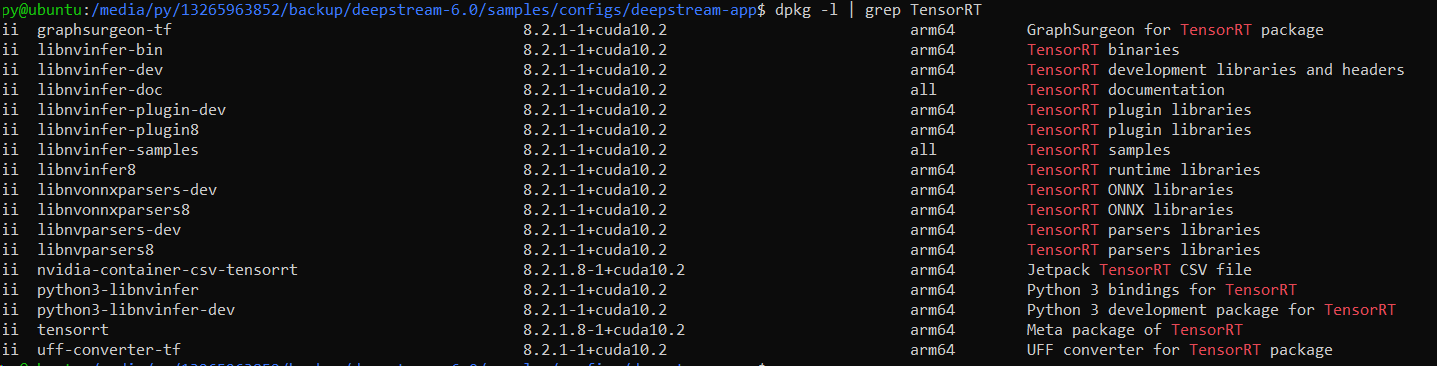

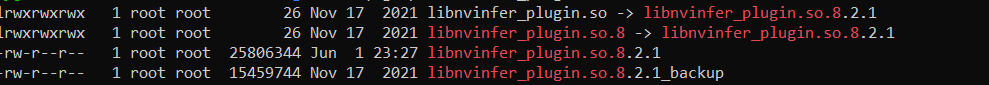

Then I checked the tensorrt version of TLT Docker and AGX

TLT Docker is

AGX is

What should I do ?

Looking forward to your reply

Thankyou