Please provide the following information when requesting support.

• Hardware (T4/V100/Xavier/Nano/etc)

V100

• Network Type (Detectnet_v2/Faster_rcnn/Yolo_v4/LPRnet/Mask_rcnn/Classification/etc)

Classification

• TLT Version (Please run “tlt info --verbose” and share “docker_tag” here)

dockers: [‘nvidia/tao/tao-toolkit-tf’, ‘nvidia/tao/tao-toolkit-pyt’, ‘nvidia/tao/tao-toolkit-lm’]

format_version: 2.0

toolkit_version: 3.22.05

published_date: 05/25/2022

• Training spec file(If have, please share here)

• How to reproduce the issue ? (This is for errors. Please share the command line and the detailed log here.)

When I use TAO to train a classification model, I get the following error:

Traceback (most recent call last):

File "/usr/local/bin/classification", line 8, in <module>

sys.exit(main())

File "/root/.cache/bazel/_bazel_root/ed34e6d125608f91724fda23656f1726/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/makenet/entrypoint/makenet.py", line 12, in main

File "/root/.cache/bazel/_bazel_root/ed34e6d125608f91724fda23656f1726/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/common/entrypoint/entrypoint.py", line 263, in launch_job

File "/root/.cache/bazel/_bazel_root/ed34e6d125608f91724fda23656f1726/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/common/entrypoint/entrypoint.py", line 47, in get_modules

File "/usr/lib/python3.6/importlib/__init__.py", line 126, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 994, in _gcd_import

File "<frozen importlib._bootstrap>", line 971, in _find_and_load

File "<frozen importlib._bootstrap>", line 955, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 665, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 678, in exec_module

File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed

File "/root/.cache/bazel/_bazel_root/ed34e6d125608f91724fda23656f1726/execroot/ai_infra/bazel-out/k8-fastbuild/bin/magnet/packages/iva/build_wheel.runfiles/ai_infra/iva/makenet/scripts/evaluate.py", line 34, in <module>

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/client/session.py", line 1585, in __init__

super(Session, self).__init__(target, graph, config=config)

File "/usr/local/lib/python3.6/dist-packages/tensorflow_core/python/client/session.py", line 699, in __init__

self._session = tf_session.TF_NewSessionRef(self._graph._c_graph, opts)

tensorflow.python.framework.errors_impl.InternalError: cudaGetDevice() failed. Status: CUDA driver version is insufficient for CUDA runtime version

2022-09-09 15:06:34,933 [INFO] tlt.components.docker_handler.docker_handler: Stopping container.

I am going to delete the old version of the driver

./NVIDIA-Linux-x86_64-515.65.01.run --uninstall

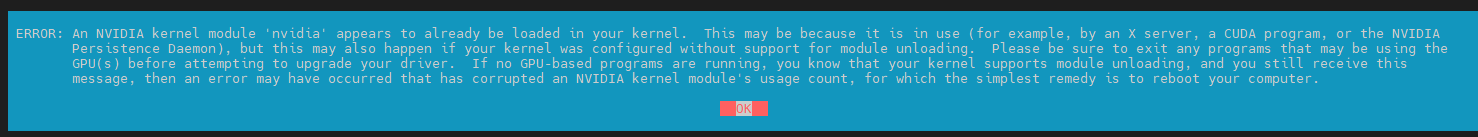

So I am going to upgrade the driver, but the following error occurred:

./NVIDIA-Linux-x86_64-515.65.01.run

Still not working after restart.